Image interfusion method based on wave transform of not sub sampled contour

A technology of contourlet transformation and image fusion, applied in the field of image fusion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

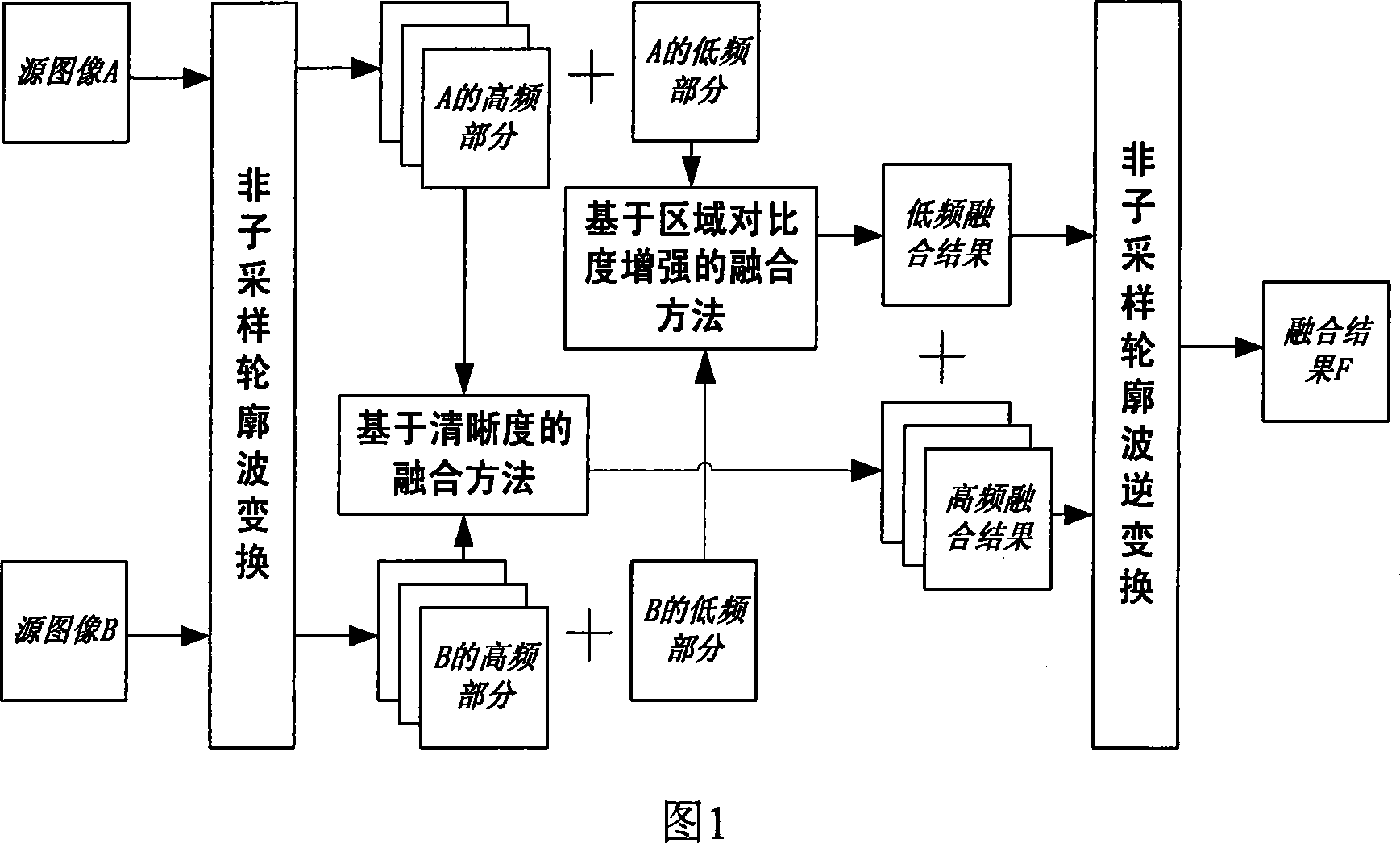

[0053] The present invention uses Nonsubsampled Contourlet Transform NSCT (Nonsubsampled ContourletTransform) to decompose the image in multiple scales, and then performs different fusion operations on different frequency bands. The processing flow is shown in Figure 1:

[0054] (1) The source images A and B are decomposed into low-frequency sub-images Y through non-subsampled contourlet transform respectively 0 A , Y 0 B and a series of high-frequency sub-images Y k A , Y k B , k=1, 2, ..., N, where N is the number of high-frequency sub-images.

[0055]The high-frequency part represents the detail component of the image, including the edge detail information of the source image. The number N of high-frequency sub-images is determined by the number of stages of pyramid decomposition in non-subsampling contourlet transform and the number of directions of directional filter decomposition; the low-frequency part Represents the approximate component of the image, containing...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com