Automatic frontal-view gait segmentation for abnormal gait quantification

a technology of automatic frontal-view gait segmentation and abnormal gait, which is applied in the direction of image enhancement, instruments, person identification, etc., can solve the problems of reducing the quality of life of individuals, difficult human gait analysis and assessment, and complicated processes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

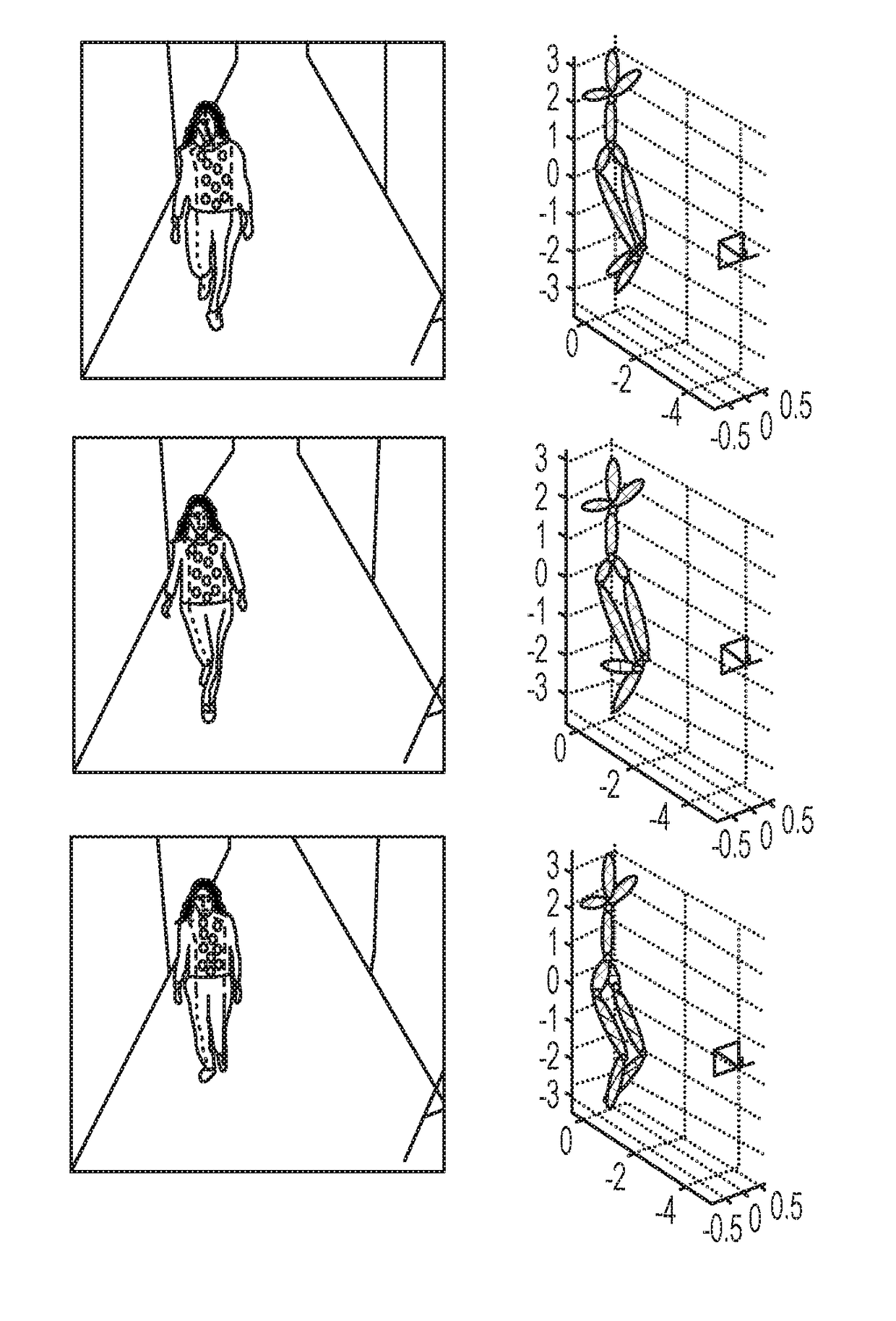

Image

Examples

Embodiment Construction

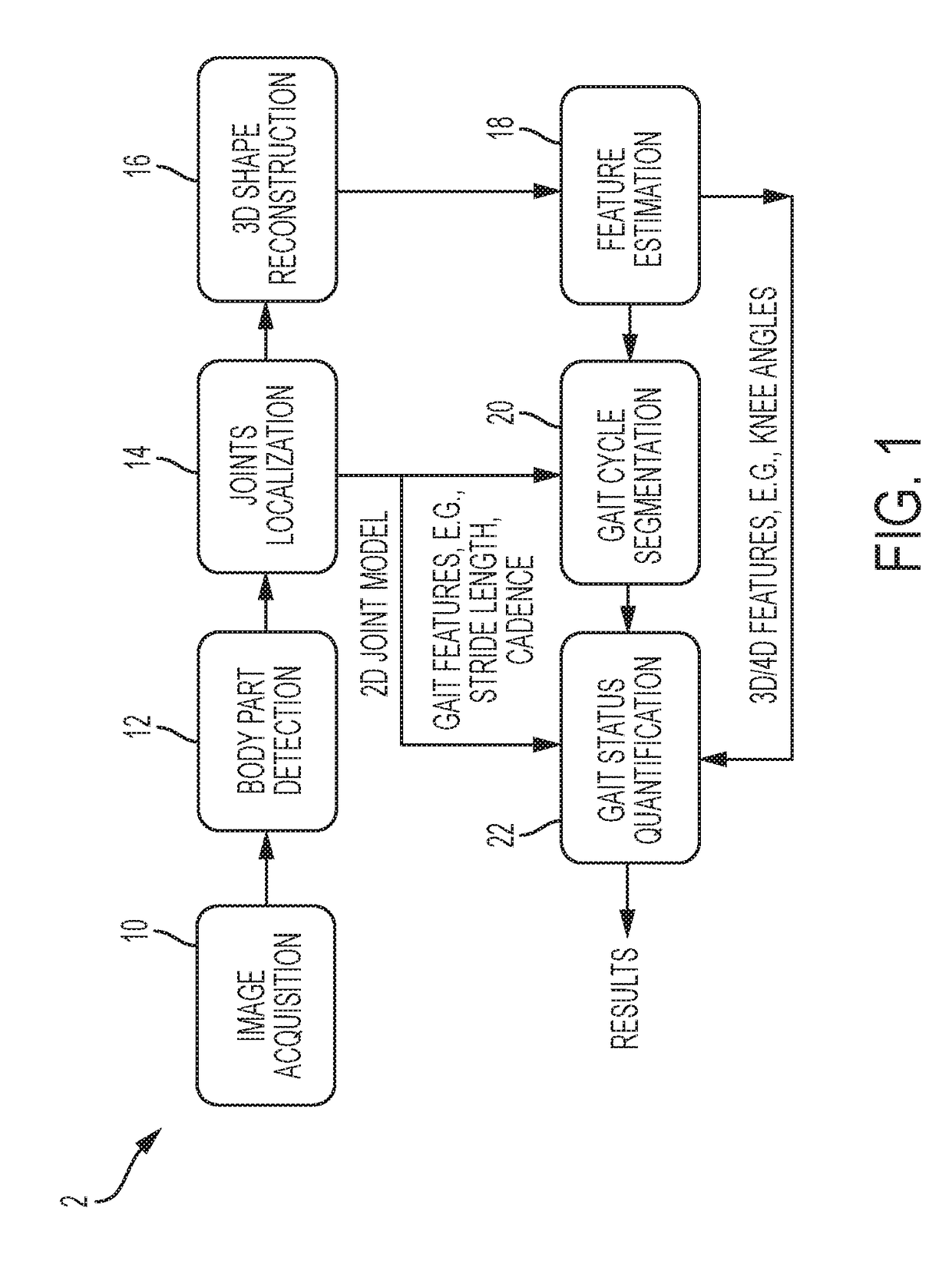

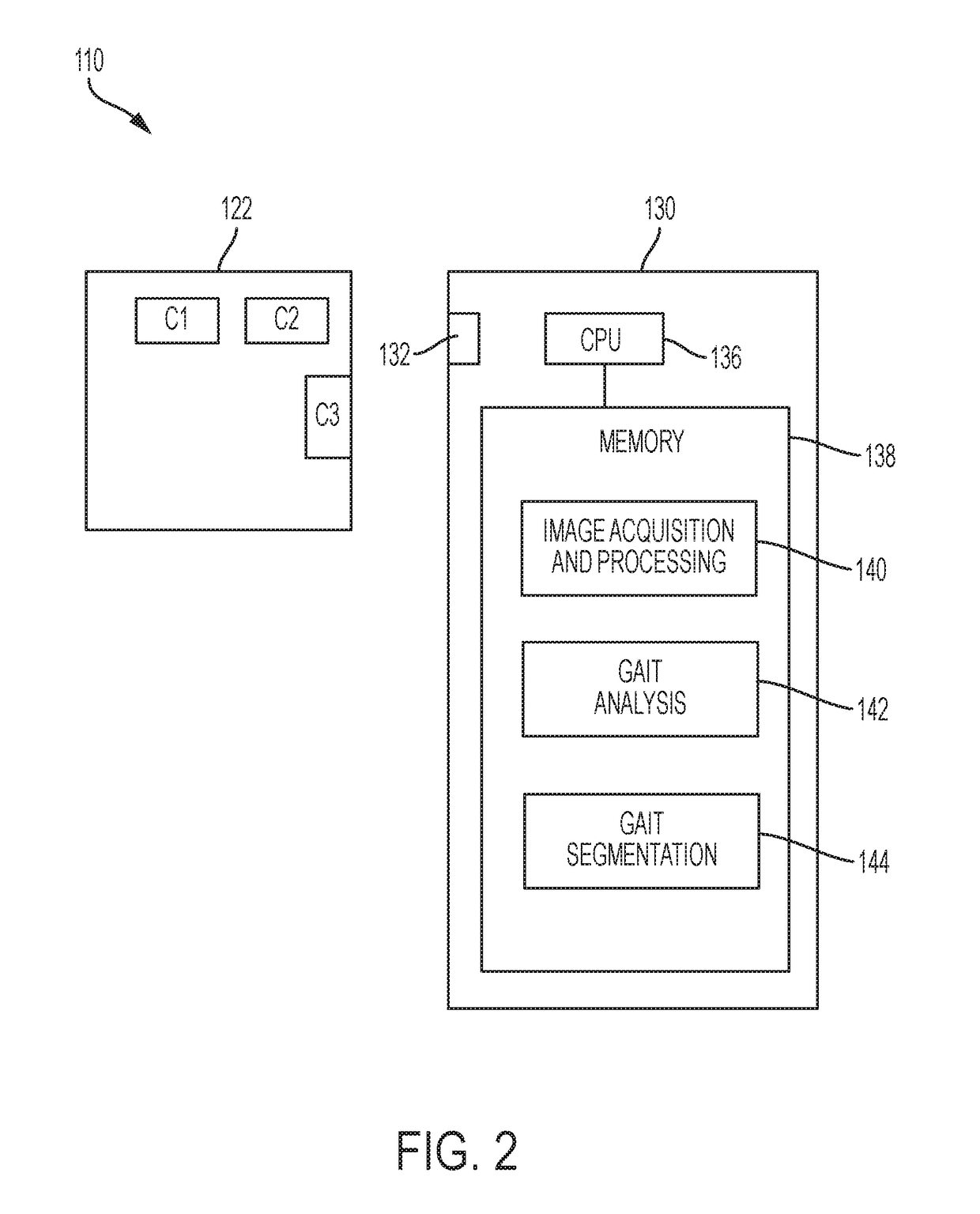

[0032]The present disclosure sets forth systems and methods for performing an objective evaluation of different gait parameters by applying computer vision techniques that can use existing monitoring systems without substantial additional cost or equipment. Aspects of the present disclosure can perform assessment during a user's daily activity without the requirement to wear a device (e.g., a sensor or the like) or special clothing (e.g., uniform with distinct marks on certain joints of the person). Computer vision in accordance with the present disclosure can allow simultaneous, in-depth analysis of a higher number of parameters than current wearable systems. Unlike approaches utilizing wearable sensors, the present disclosure is not restricted or limited by power consumption requirements of sensors. The present disclosure can provide a consistent, objective measurement of gait parameters, which reduces error and variability incurred by subjective techniques. To achieve these goals...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com