Surgical navigation system with one or more body borne components and method therefor

a surgical navigation system and body-borne technology, applied in the field of computer assisted surgery and surgical navigation systems, can solve the problems of binocular-based navigation systems, large and expensive medical equipment systems, and disruption of line-of-sight between cameras and objects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

camera embodiment

Handheld Camera Embodiment

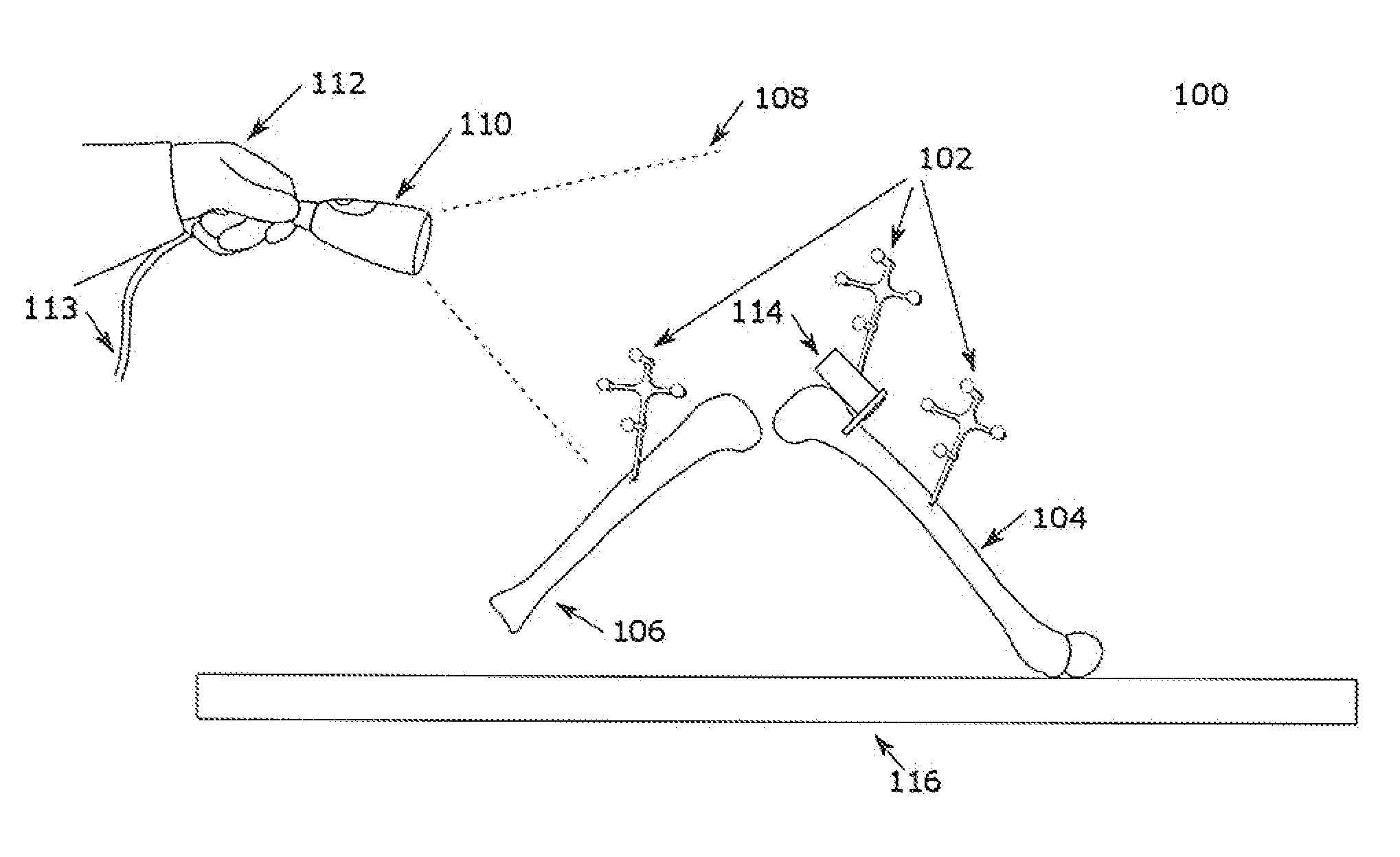

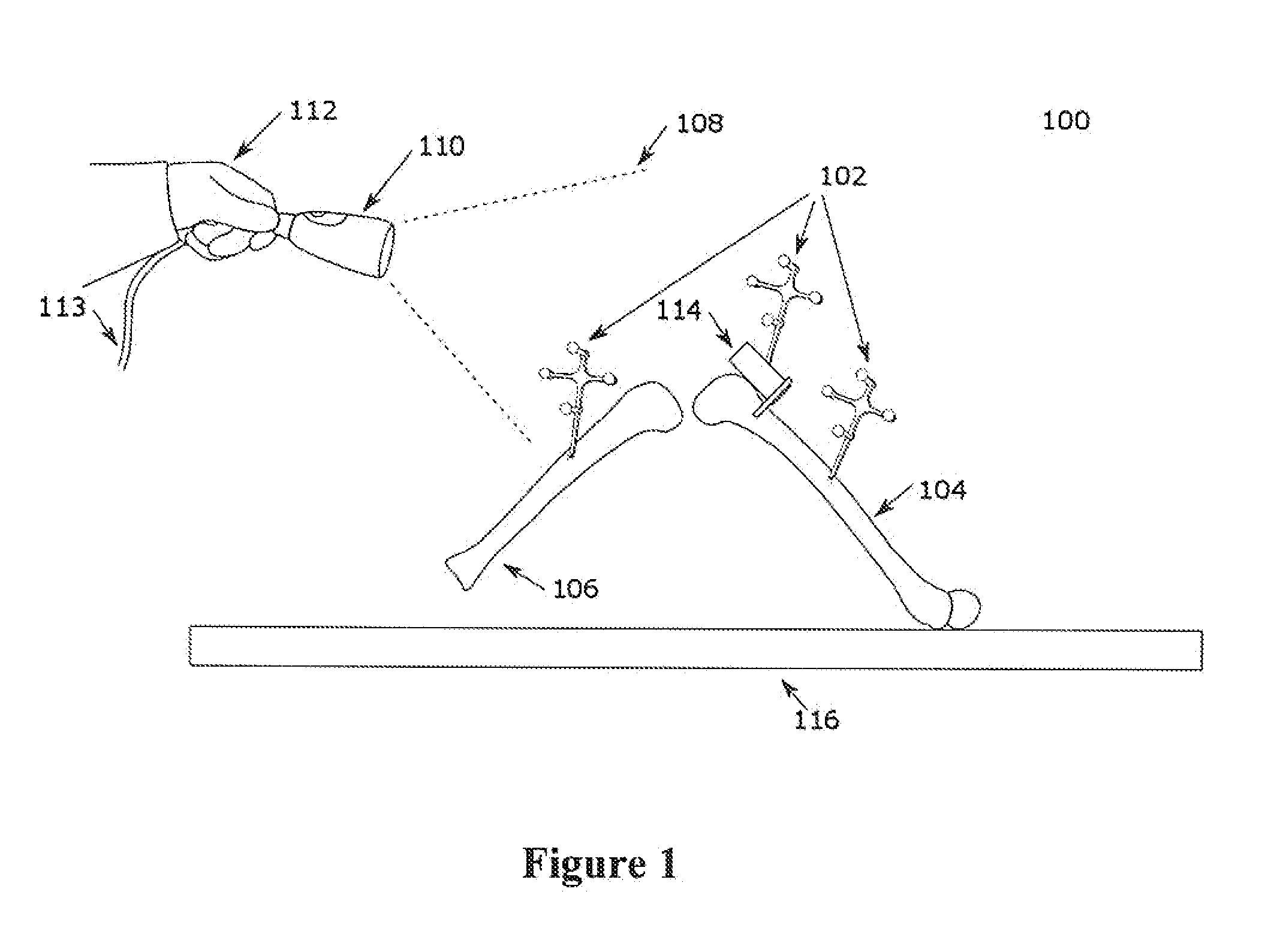

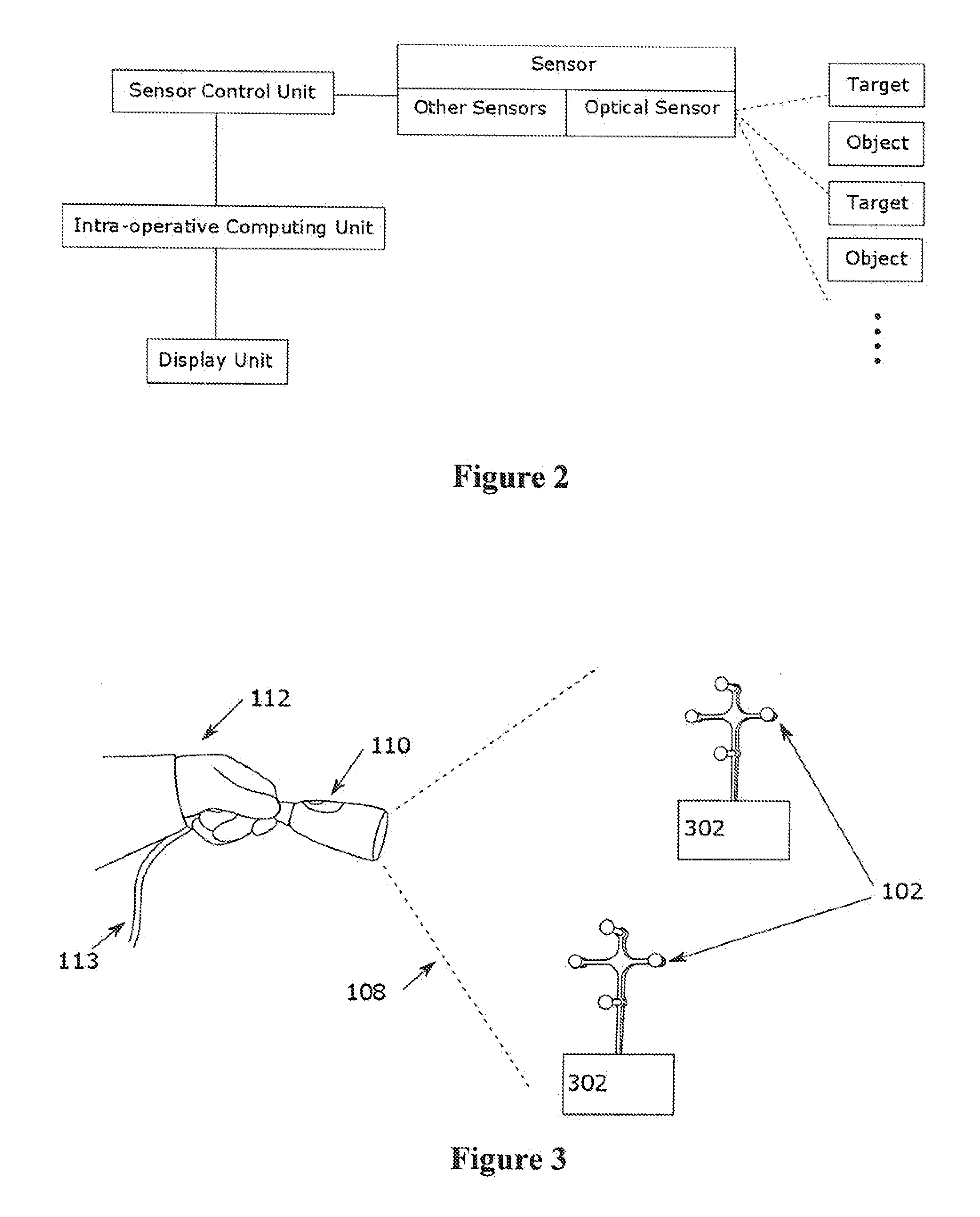

[0052]In one embodiment, a sensor is configured for handheld use. As depicted in FIG. 3, the sensor 110 may localize two or more targets 102, in order to compute a relative pose between the targets 102. The targets 102 are attached to objects 302 and the relative pose between the objects 302 can also be calculated. In relation to navigated TKA, the objects could be the femur 104 and the tibia 106, or the femur 104 and the cutting guide 114.

[0053]Furthermore, both handheld and non-handheld modes may be supported for use of the sensor within the same surgery. This is illustrated in FIG. 4a and FIG. 4b. For example, during a TKA, it may be preferable to leave the sensor 110 mounted to a fixed structure 402 for the majority of the procedure with the use of a releasable mechanical connection 404, such as the one described in U.S. 20140275940 titled “System and method for intra-operative leg position measurement”, the entire contents of which are incorporated her...

embodiment

Body Mounted Embodiment

[0055]As illustrated in FIG. 5, in addition to handheld configurations, the sensor 110 may be mounted onto a surgeon 502 for other user) using a sensor mounting structure 504. The sensor mounting structure 504 may allow the sensor to be attached to a surgeon's forehead (by way of example, shown as a headband). The sensor 110 is preferably placed on the sensor mounting structure 504 such that the working volume 408 of the sensor 110 and the surgeon's visual field of view 506 are substantially aligned, i.e. the majority of the working volume 408 overlaps with the field of view 108 of the sensor 110. In such a configuration, if the surgeon 502 can see the targets 102, the sensor 110 (while mounted on the sensor mounting structure 504) will likely be able to do so as well. This configuration allows the surgeon to rapidly and intuitively overcome line-of-sight disruptions between the sensor 110 and the targets 102. In this configuration, it is desirable that the op...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com