System and method for tracking and recognizing people

a system and recognition technology, applied in the field of system and recognition methods for tracking and recognizing people, can solve problems such as the difficulty of accurately tracking multiple people in any real-world scenario

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

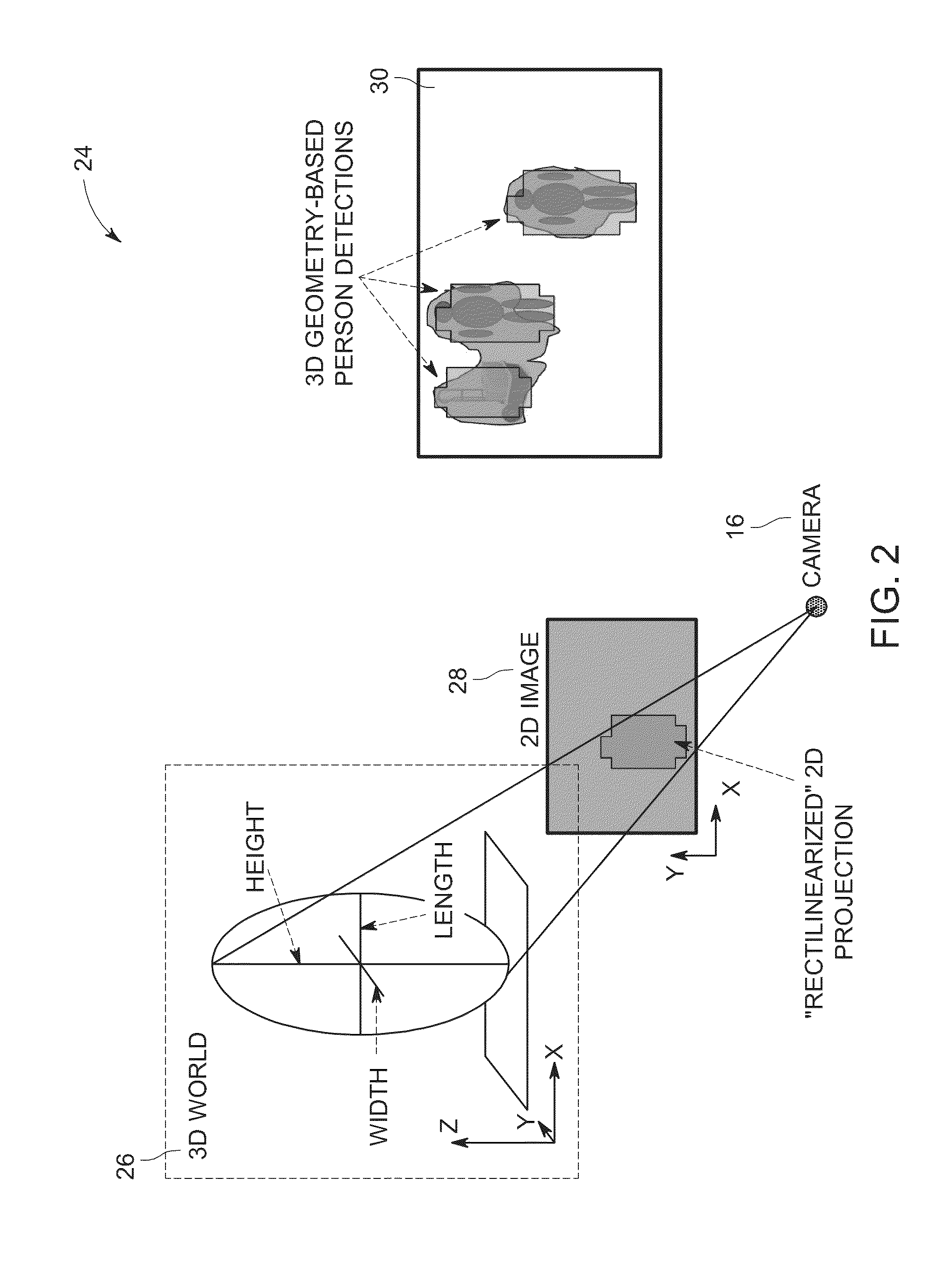

[0013]In the subsequent paragraphs, various aspects of identifying and tracking multiple people will be explained in detail. The various aspects of the present techniques will be explained, by way of example only, with the aid of figures hereinafter. The present techniques for identifying and tracking multiple people will generally be described by reference to an exemplary tracking and recognition system (e.g., trajectory-based tracking and recognition system) designated by numeral 10.

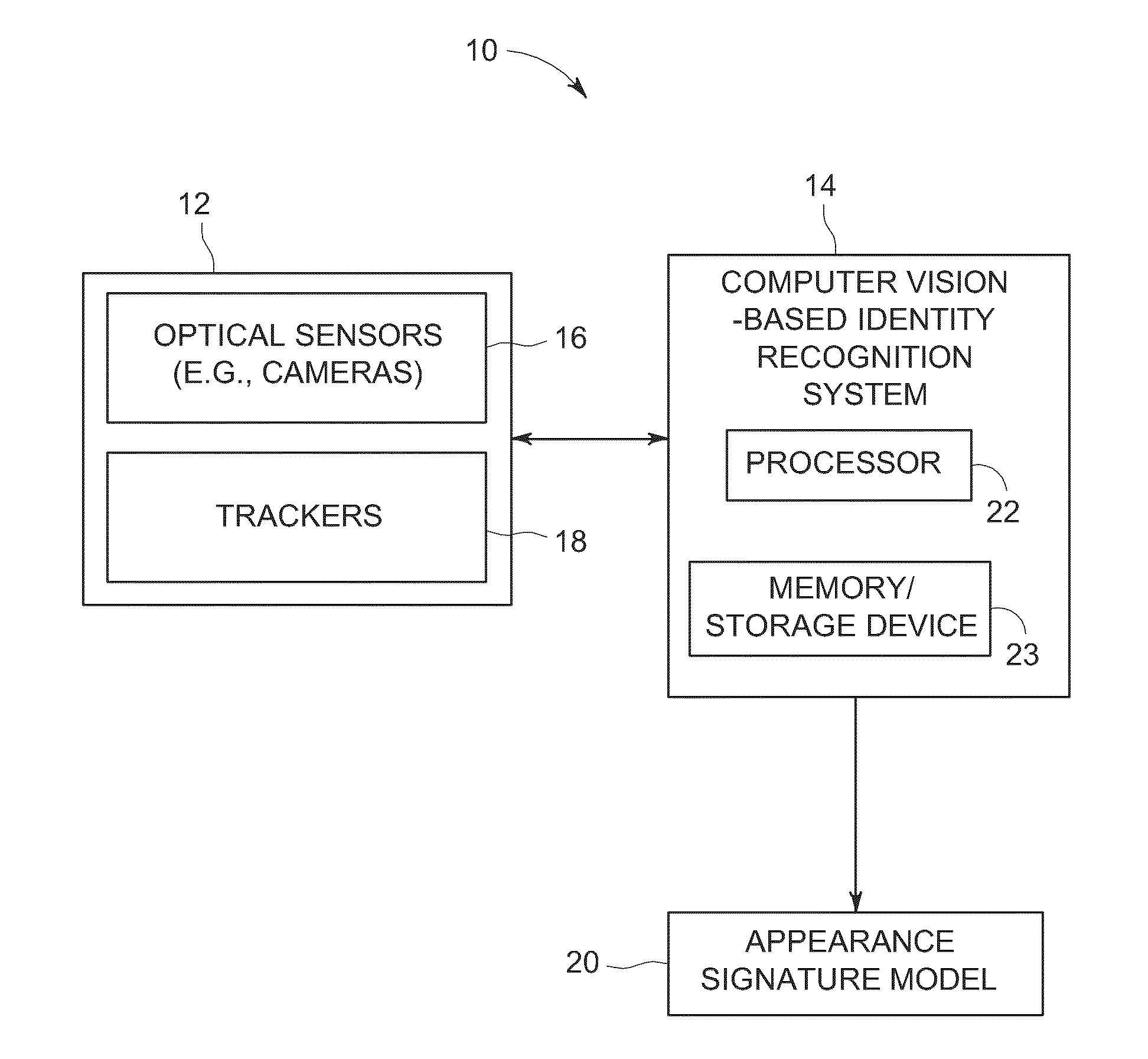

[0014]The tracking and recognition system 10 depicted in FIG. 1 is configured to track people despite tracking errors (e.g., temporary trajectory losses and / or identity switches) that may occur. These tracking errors may result in noisy data or samples that include spatiotemporal gaps. The tracking and recognition system 10 is configured to handle the noisy data to enable the recognition and tracking of multiple people. The tracking and recognition system 10 includes a tracking subsystem 12 and a compu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com