Unmanned aerial vehicle strong-robustness attitude control method based on deep reinforcement learning

A reinforcement learning, strong and robust technology, applied in the direction of attitude control, vehicle position/route/height control, non-electric variable control, etc., can solve problems such as large amount of calculation, controller jitter or even divergence, complex theory, etc., to achieve Improving adaptability and response speed, weakening the quantization problem of the controller, and improving the effect of generalization ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] The present invention will be further described in detail below in conjunction with the examples, which are explanations of the present invention rather than limitations.

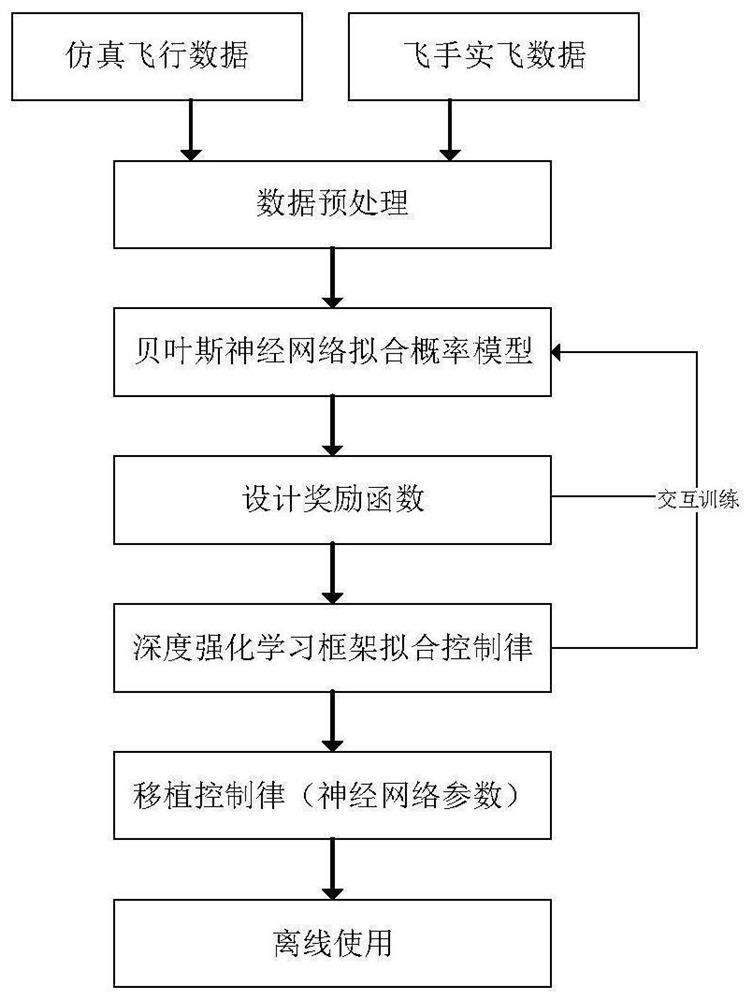

[0047] The present invention provides a method for controlling the strong and robust attitude of UAVs based on deep reinforcement learning, including the following operations:

[0048] 1) Collect aircraft flight data and simulated flight data, including aircraft status st with action a t Corresponding state s t+1 data flow;

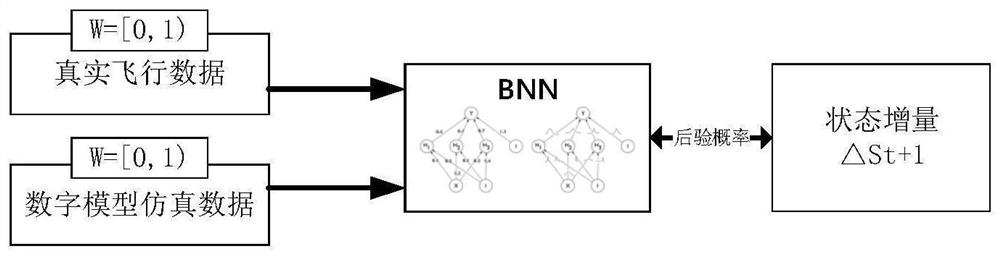

[0049] Add set weights to real flight data and simulated flight data to form a digital model of the aircraft;

[0050] Then normalize and preprocess each state quantity of the aircraft in the digital model to a dimensionless value between 0 and 1;

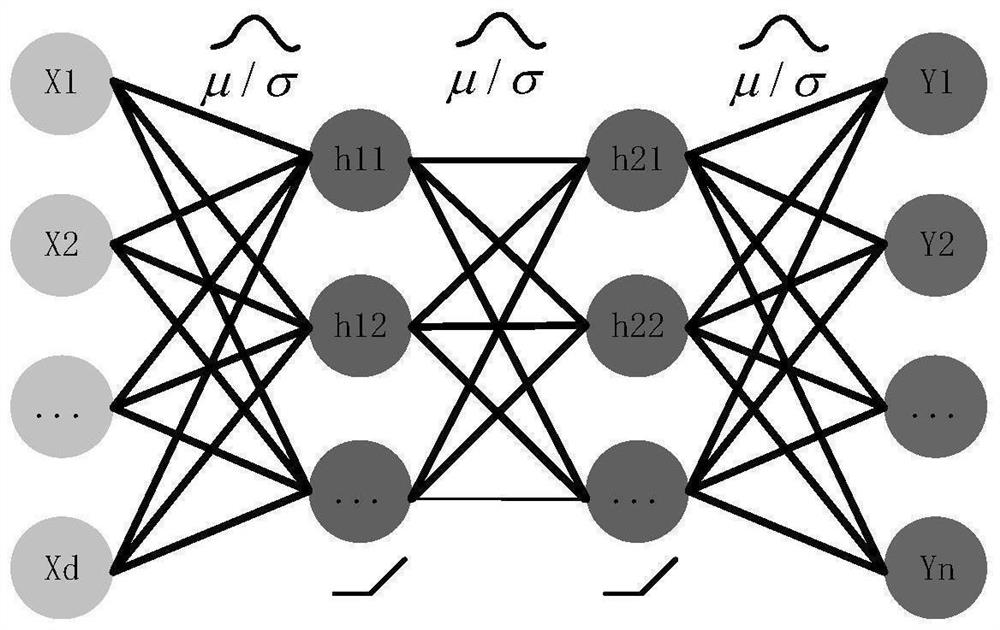

[0051] 2) The digital model of the aircraft after the pretreatment is used as the input of the Bayesian neural network, and the network weight distribution is randomly initialized, and the aircraft dynamics model introduced by the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com