Twin network target tracking method based on different measurement criteria

A twin network, target tracking technology, applied in biological neural network model, image data processing, image enhancement and other directions, can solve the problems of tracking failure, similar target interference, unrobust change of target appearance, etc. Robust effects for tracking drift, target appearance changes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

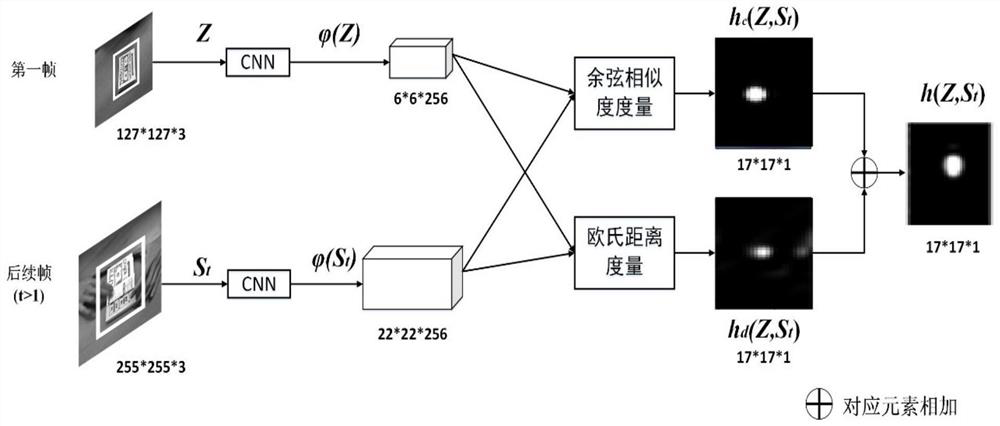

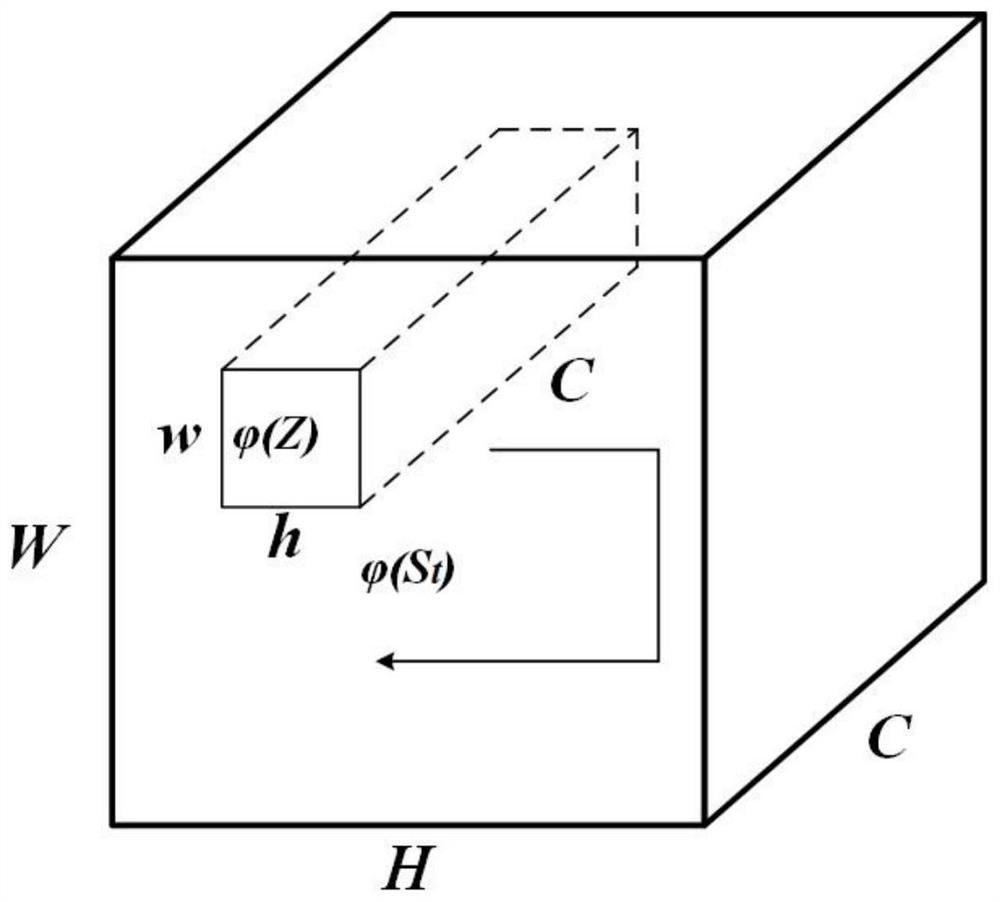

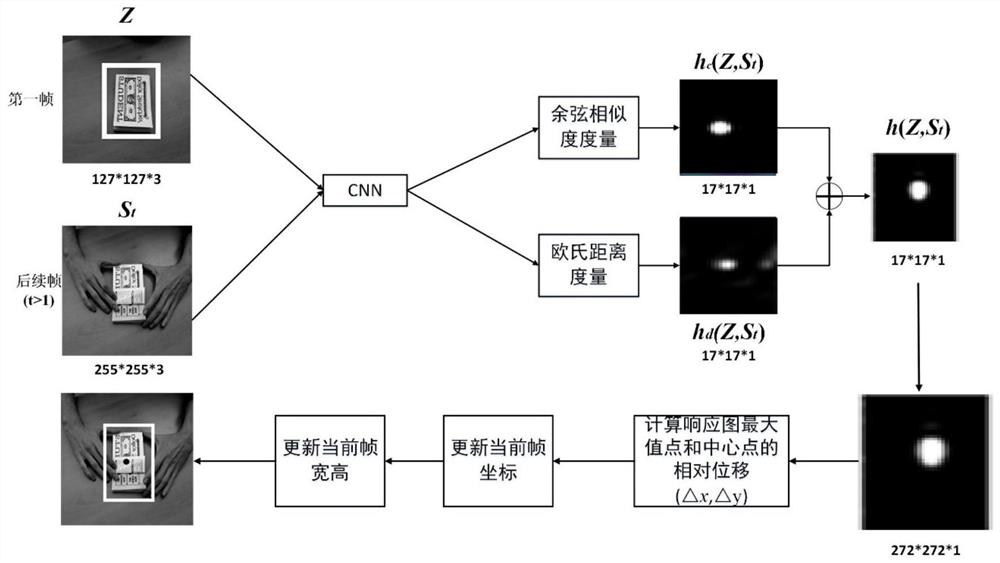

Method used

Image

Examples

Embodiment 1

[0102] Step 1, select the AlexNet network pre-trained on the ImageNet dataset as the feature extraction network of the twin network

[0103] Table 1 Feature extraction network parameter table

[0104]

[0105]

[0106] Feature Extraction Network The parameters of are shown in Table 1, consisting of 5 convolutional layers and 2 pooling layers. The first two convolutional layers are followed by two max pooling layers. Add random deactivation layer and RELU nonlinear activation function after the first 4 convolutional layers

[0107] Step 2, obtain the tracking video, and manually select the area where the target is located on the first frame of the video. Let (x, y) be the coordinates of the center point of the target in the first frame, and m and n be the width and height of the target area, respectively. Taking the center point (x, y) of the target in the first frame as the center, intercept a square area with side length z_sz. The calculation formula of z_sz is a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com