Human body action classification method and device, terminal equipment and storage medium

A technology of human action and classification method, applied in the field of image analysis, can solve the problems of low efficiency of human action classification results, slow calculation speed and high computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

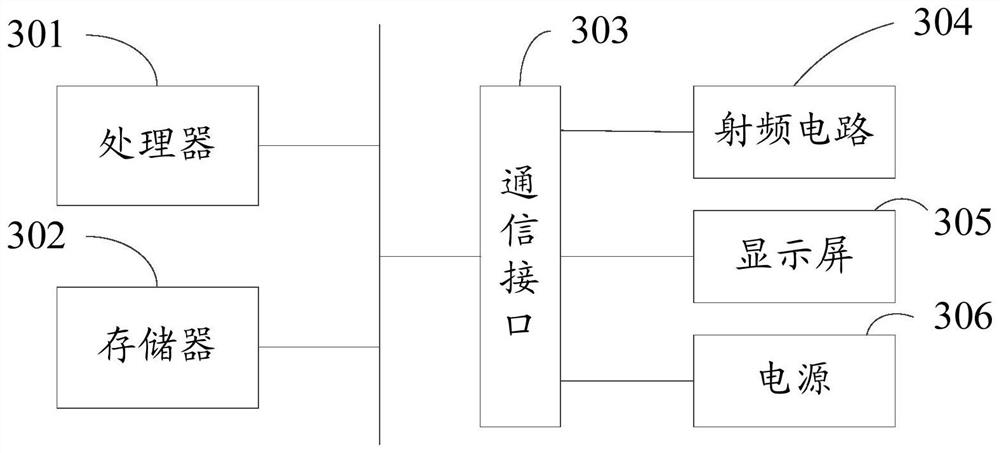

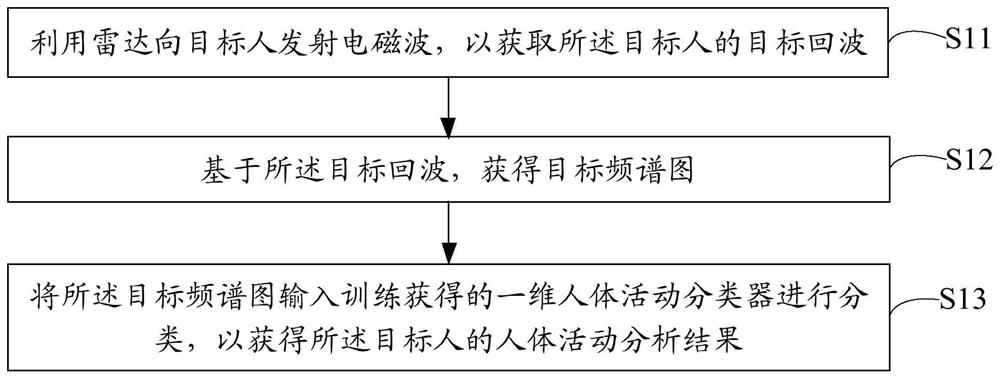

Embodiment Construction

[0060] The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only part of the embodiments of the present invention, not all of them. Based on the embodiments of the present invention, all other embodiments obtained by persons of ordinary skill in the art without creative efforts fall within the protection scope of the present invention.

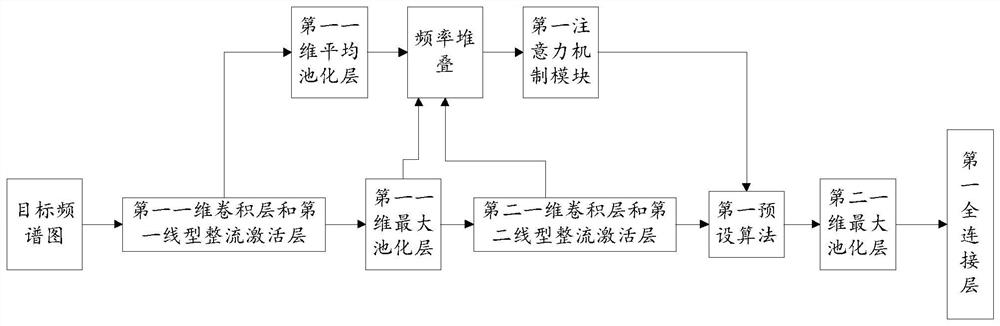

[0061] With the development of GPU (Graphics Processing Unit), deep convolutional neural network (DCNN) has played an important role in radar (especially micro-Doppler radar) based human action classification technology with its powerful feature extraction ability. Before sending the radar signal to the DCNN, the radar signal is usually preprocessed to make it more expressive, and the most common preprocessing method is to convert the radar signal into...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com