A human-computer interaction testing method and system for mobile terminals

A mobile terminal, human-computer interaction technology, applied in the input/output of user/computer interaction, mechanical mode conversion, computer parts and other directions, can solve the problems of reducing test accuracy, lack of a unified standard, affecting picture clarity, etc. , to improve the analysis accuracy, improve the comfort of use, and improve the test effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment 1

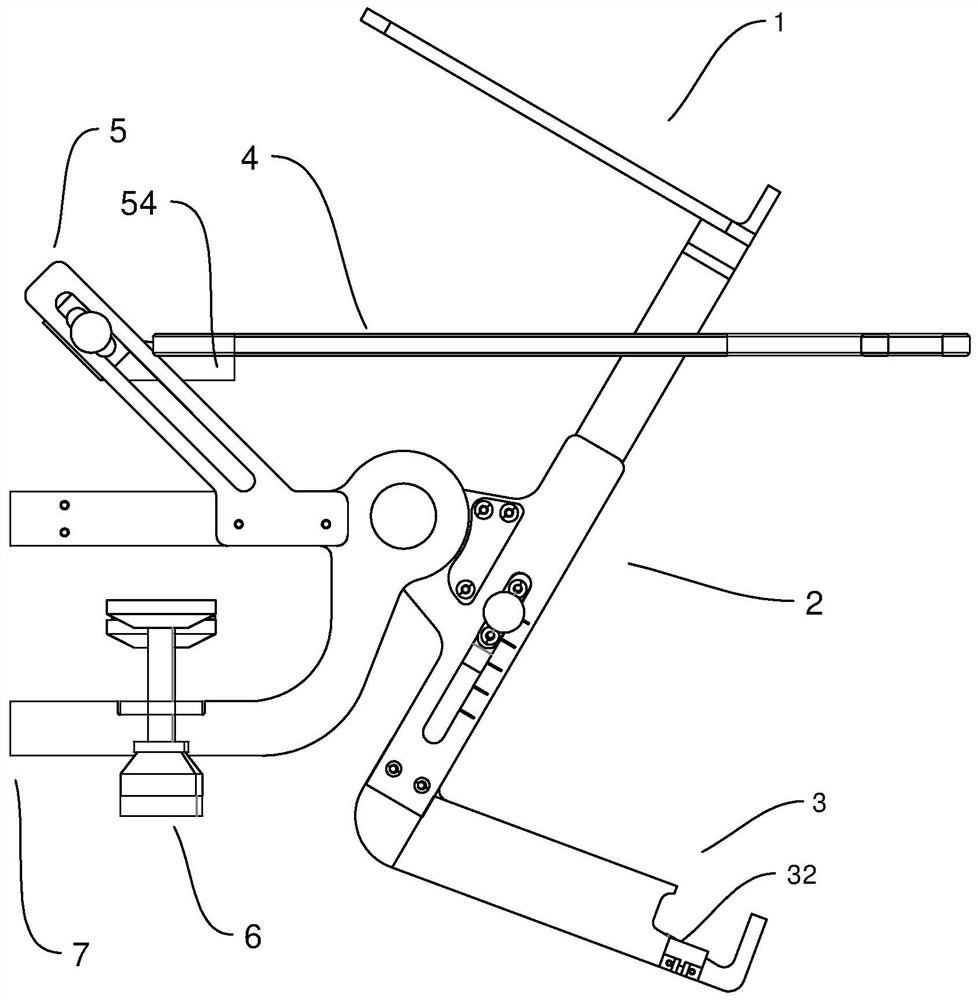

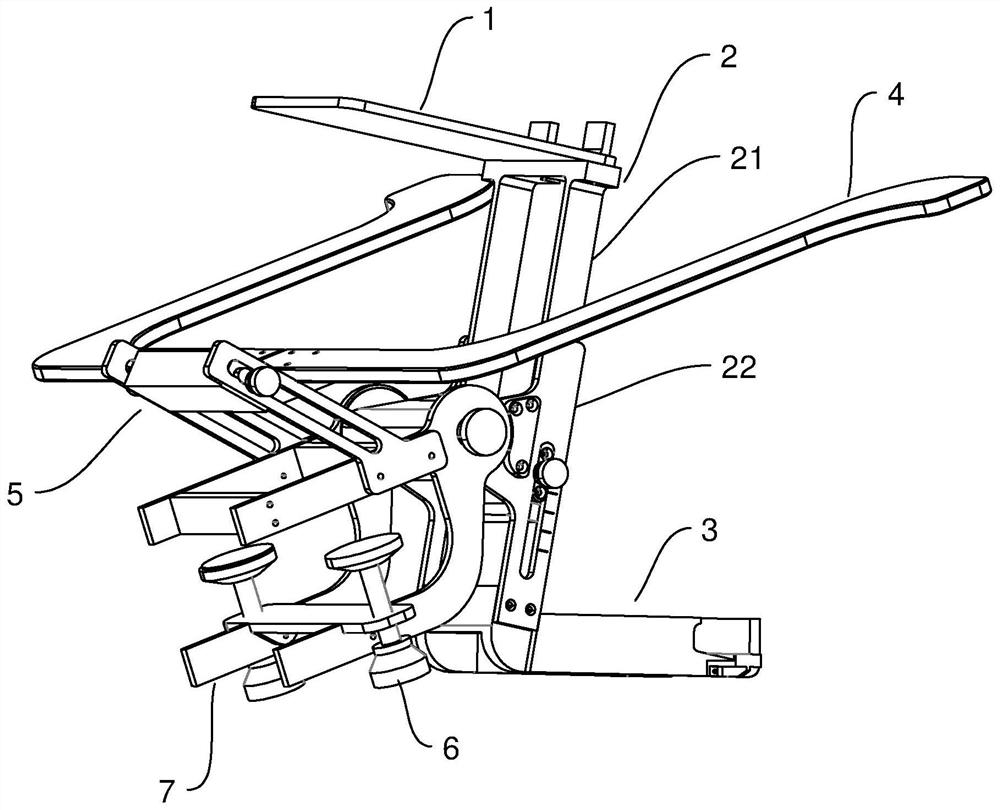

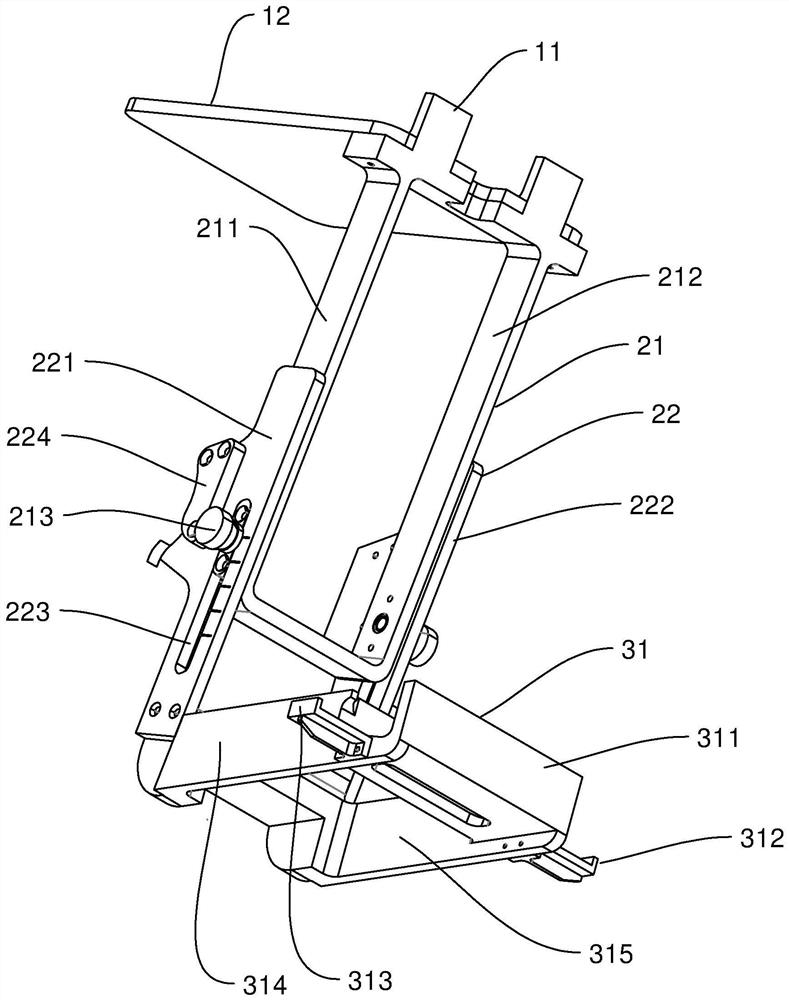

[0032] A human-computer interaction test system for mobile terminals of the present application, such as figure 1 , 2 As shown, it includes a mobile terminal sub-device 1, a support base 2, an eye-tracking sub-device 3, a booster device, and an arm support sub-device; the arm support sub-device includes a support plate 4 and an arm support frame 5; the booster device includes a clamping device 6. Blessing frame7.

[0033] The mobile terminal bracket sub-device 1 is fixed on one end of the support base 2, the eye-tracking sub-device 3 is fixed on the other end of the support base 2, and the eye-tracking sub-device 3 and the mobile terminal sub-device 1 are respectively arranged on both sides of the support base 2 , is fixedly connected with the support base 2.

[0034] The eye tracking sub-device 3 is used to collect the converging point of eye vision on the mobile terminal, and the mobile terminal sub-device 1 is used to support the mobile terminal.

[0035] Specifically, t...

specific Embodiment 2

[0074] A human-computer interaction testing system for a mobile terminal of the present application differs from the first embodiment in that it also includes an electric adjustment sub-device. Stepper motors are respectively set at the joint of the seat 2, the joint of the eye tracking sub-device 3 and the support base 2, the joint of the arm support frame 5 and the support frame 7, and the joint of the arm support frame 5 and the arm support fixed platform 54 , by controlling the stepping state of each motor, the relative position and angle between each component can be controlled.

[0075] The electric adjustment sub-device adjusts the eye tracking system according to the position information of the eye tracking system, adjusts the mobile terminal according to the position information of the mobile terminal, and realizes the automatic adjustment of the position of the eye tracking system and the position of the mobile terminal; adjusts the arm support according to the height...

specific Embodiment 3

[0078] A human-computer interaction test method for a mobile terminal of the present application is based on a human-computer interaction test device for a mobile terminal, including deriving screen information of the mobile terminal and converting it into screen video information; obtaining the visual acuity of the human eye Converge point track information and convert it into eye track video information; gather screen video information and eye track video information, and then through coordinate transformation, superimpose the screen video information and eye track video information at the same time on the same screen to obtain human The running trajectory of the eyesight convergence point on the screen of the mobile terminal is used to obtain the test results of human-computer interaction.

[0079]The screen information of the mobile terminal includes screen image information and touch screen information, and the touch screen information includes touch screen orientation inf...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com