SLAM method based on fusion of monocular vision feature method and direct method

A technology of monocular vision and feature method, applied in surveying and mapping, navigation, photo interpretation, image data processing, etc., can solve problems such as failure, and achieve the effect of eliminating accumulated errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

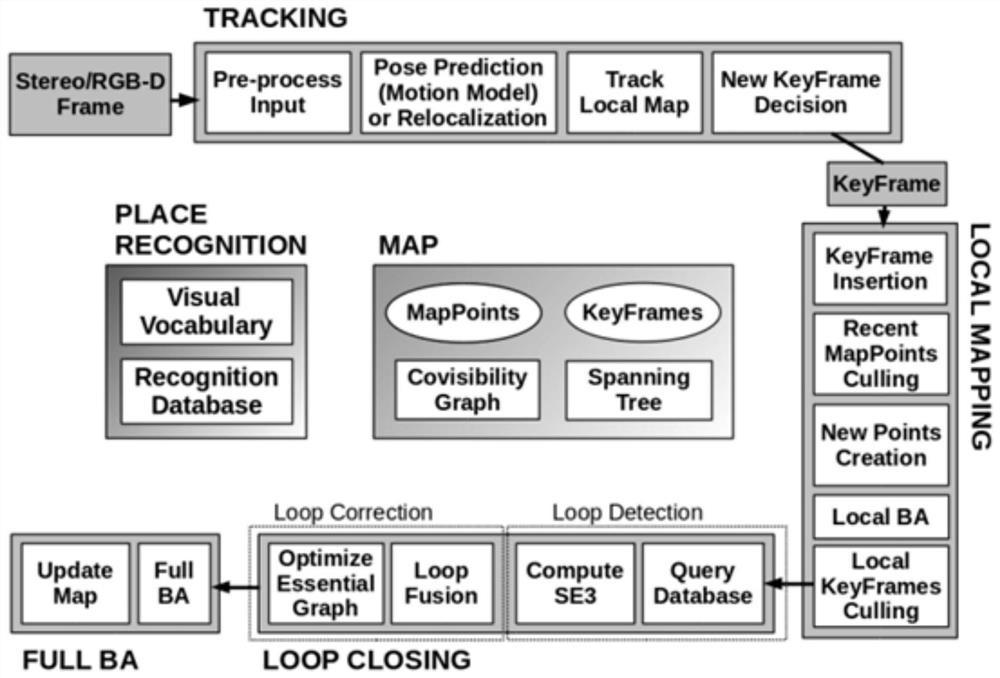

Method used

Image

Examples

Embodiment 1

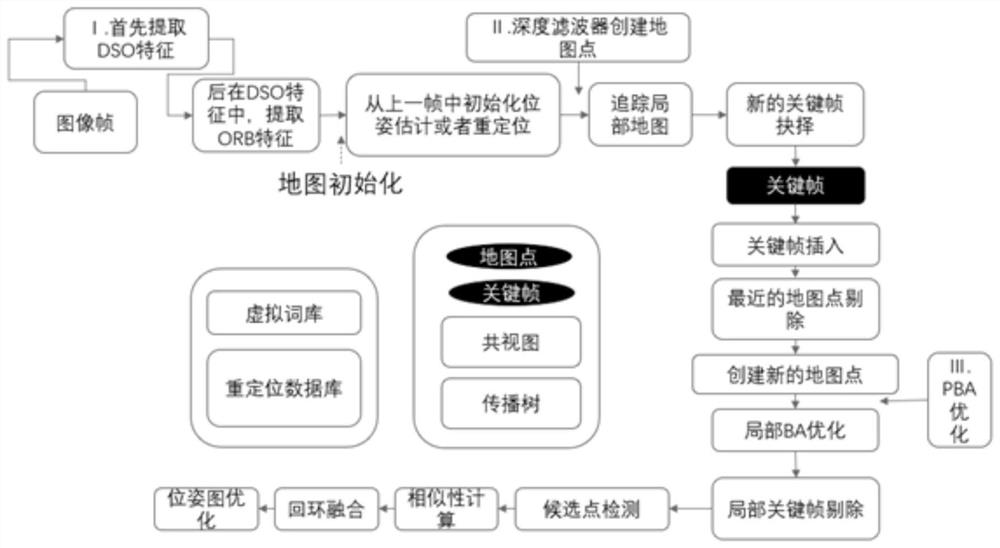

[0030] An embodiment of the present invention provides a SLAM method based on the integration of a monocular vision-based feature method and a direct method. The method includes the following steps:

[0031] 101: The initial image is input into the monocular camera sensor, and the algorithm provided by Opencv converts the initial image into a grayscale image; then extracts DSO features from the grayscale image;

[0032] In the specific implementation, the extraction of DSO features only needs to know the gray gradient existing in the gray image, so it is easier to extract than ORB features and contains ORB features.

[0033] Wherein, Opencv is a programming function library for real-time computer vision, which is well known to those skilled in the art, and will not be described in detail in this embodiment of the present invention.

[0034] 102: Extract ORB features from DSO features, and perform feature pair matching processing on DSO features and ORB features, specifically: ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com