Multi-modal emotion interaction method, intelligent equipment, system, electronic equipment and medium

An interactive method and multi-modal technology, applied in the field of human-computer emotional interaction, which can solve the problems of inconvenient user upgrade and maintenance, limited nursing function, and inability to correctly understand the user's emotional state.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0077] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

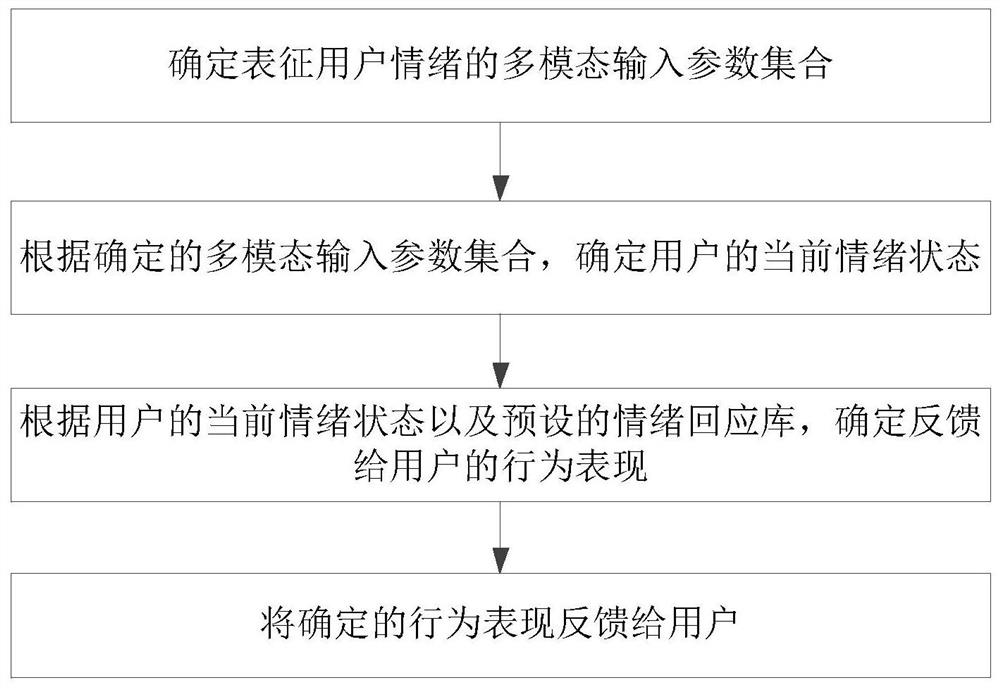

[0078] see figure 1 As shown, this embodiment provides a multi-modal emotional interaction method to realize emotional interaction with users. Specifically, the multi-modal emotional interaction method of this embodiment includes the following steps 1-4:

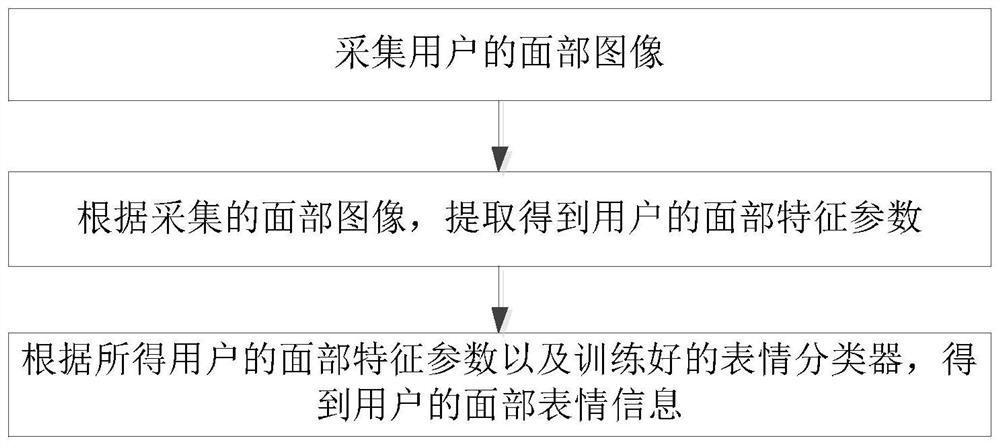

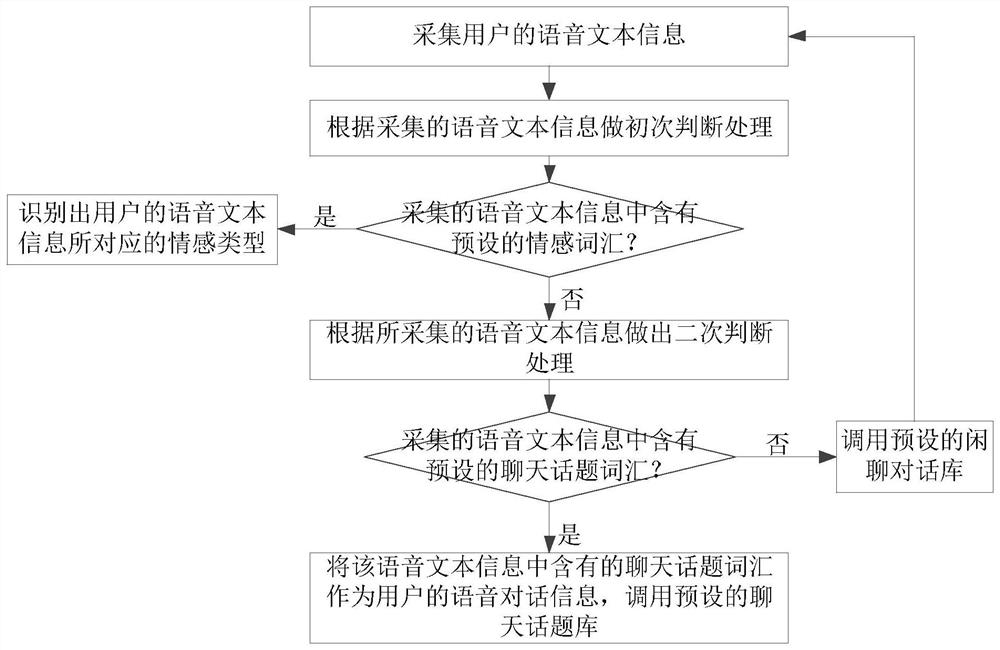

[0079] Step 1, determine the multi-modal input parameter set that characterizes the user's emotion; wherein, the multi-modal input parameter set includes at least facial expression information, voice dialogue text information and body movement information that characterize the user's emotion; in this embodiment, characterize the user The emotional facial expression information includes six facial expressions of joy, fear, surprise, sadness, disgust and anger; the voice dialogue text information representing the user's emotion can be the voice containing emotional vocabulary or the voice con...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com