Pushing and grabbing collaborative sorting network based on double viewing angles and sorting method and system thereof

A dual-view and network technology, applied in sorting, biological neural network models, instruments, etc., can solve problems such as poor generalization ability and low capture success rate, achieve high capture success rate, improve perception ability, and avoid The effect of missing object information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

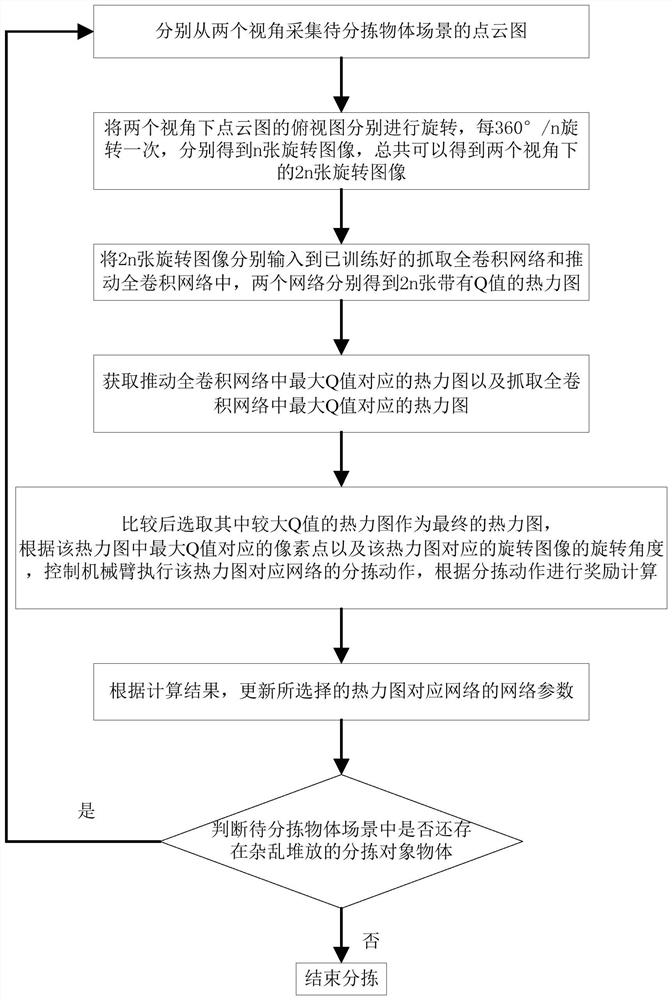

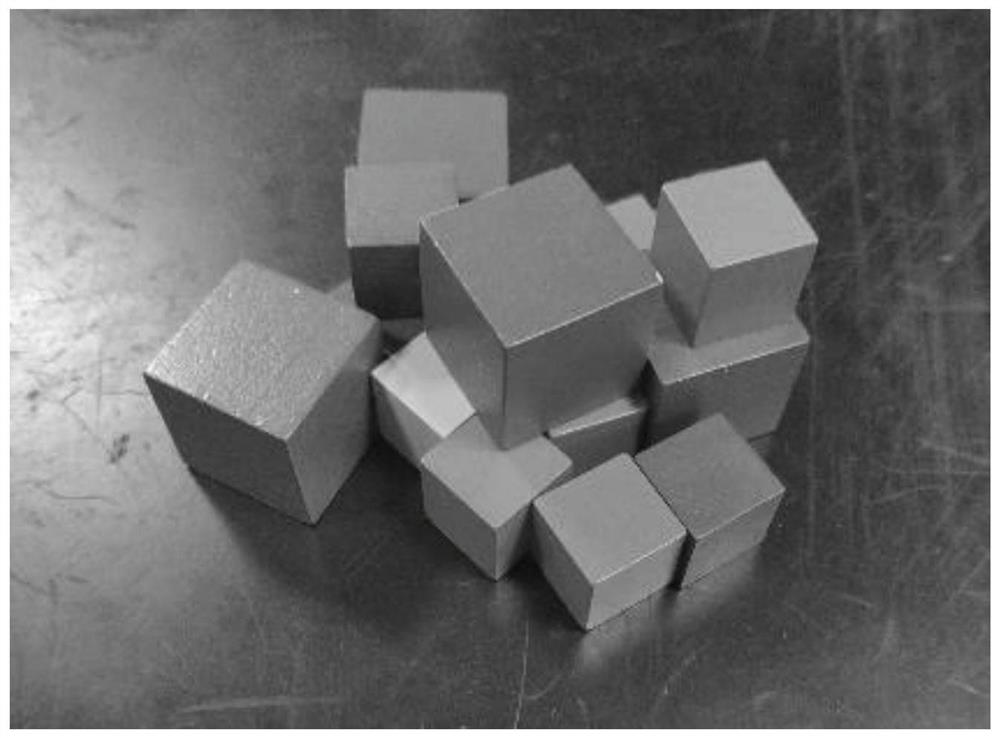

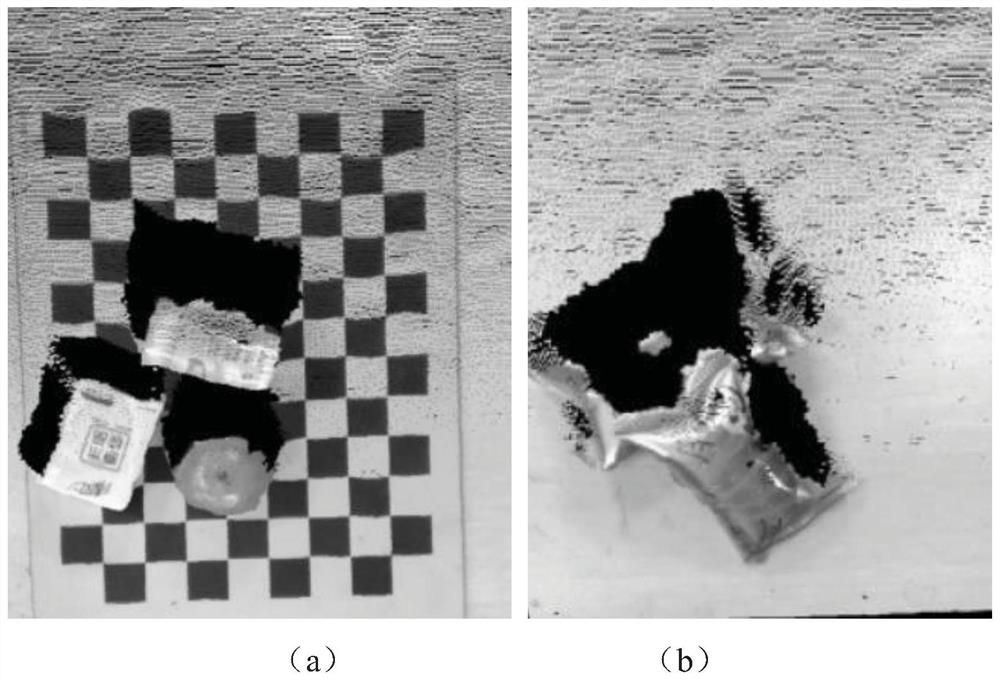

[0074] Step 1, for such as figure 2 In the messy stacking scene shown, two binocular cameras are used to collect point cloud images of the object scene to be sorted from two perspectives. Due to the point cloud image obtained by shooting the scene from a single perspective, some object information will be missing, such as image 3 (a) Neighboring objects in a single perspective, image 3 (b) Stacked objects from a single perspective. Therefore, the present invention adopts two viewing angles to obtain object information, and can obtain more complete object information.

[0075] Step 2. Perform top view projection on the point cloud image obtained in step 1 to obtain top views under two viewing angles, such as Figure 4 (a) and 4(b).

[0076] Step 3. Rotate the top views under the two viewing angles obtained in step 2 respectively, and rotate once every 22.5° to obtain 16 rotated images respectively. A total of 32 rotated images under the two viewing angles can be obtained...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com