Mobile robot localization method and system based on 3D point cloud and vision fusion

A mobile robot, three-dimensional point cloud technology, applied in radio wave measurement systems, instruments, electromagnetic wave re-radiation and other directions, can solve problems such as difficult positioning, and achieve the effect of avoiding sparse outdoor feature scenes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] The present invention will be described in detail below with reference to specific embodiments. The following examples will help those skilled in the art to further understand the present invention, but do not limit the present invention in any form. It should be noted that, for those skilled in the art, several changes and improvements can be made without departing from the inventive concept. These all belong to the protection scope of the present invention.

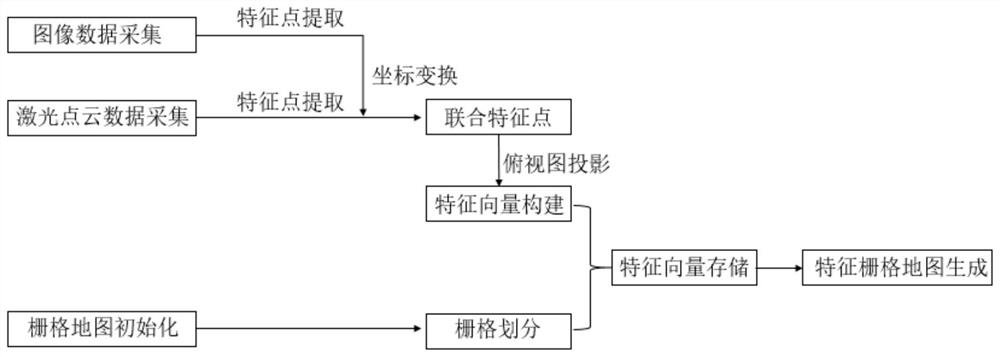

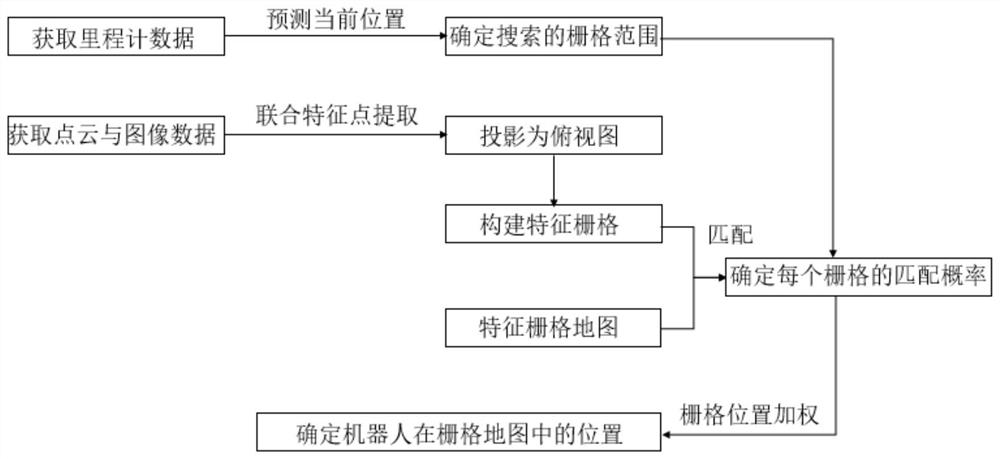

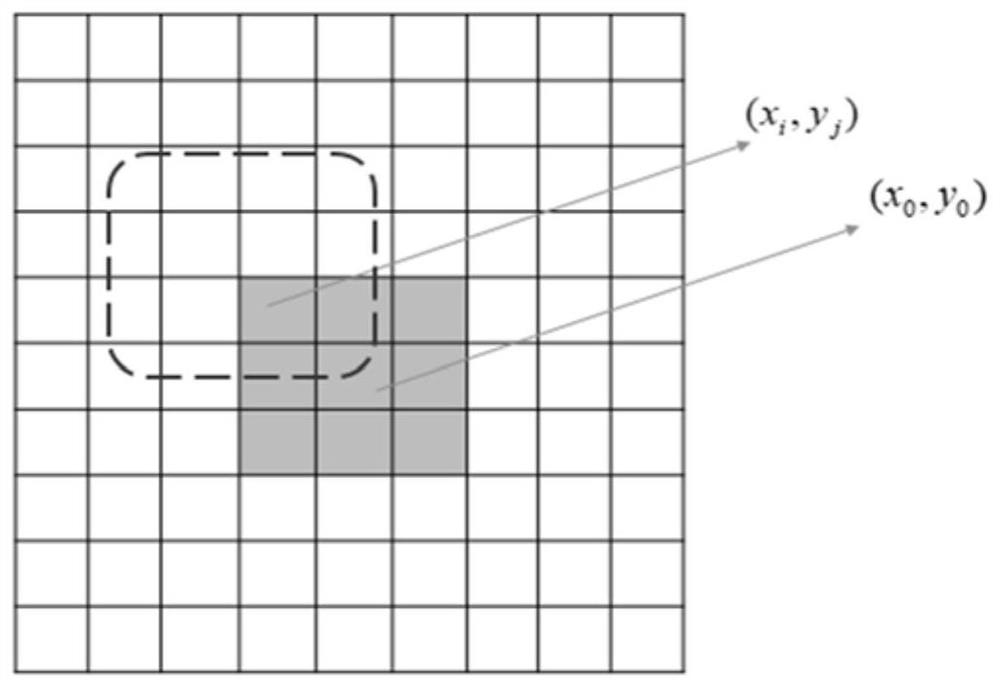

[0056] In view of the defects in the prior art, the present invention provides a method combining laser point cloud features and image features to form a joint feature, and matching with a feature grid map for positioning, respectively involving the establishment of an environment map, point cloud and visual feature fusion, and matching maps , wherein the environment map is to establish a feature grid map of the environment, and each grid in the feature grid map stores a point set composed of feature points extr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com