A visual robotic arm grasping method and device applied to parametric parts

A parametric, robotic arm technology, applied in the field of visual grasping, which can solve the problems of object models that are difficult to apply to different parts families, and the workload is large.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] Embodiments of the present invention will be described in detail below. It should be emphasized that the following description is only exemplary and not intended to limit the scope of the invention and its application.

[0050] In the automatic assembly line, it is required to grab various industrial parts from the material box by vision, and place them on the part pose adjuster with roughly correct posture. Industrial parts in real life are basically parametric, that is, they have a unified part template, but the specific size values are different. The embodiment of the present invention realizes the visual robotic arm grasping of parametric parts based on deep learning.

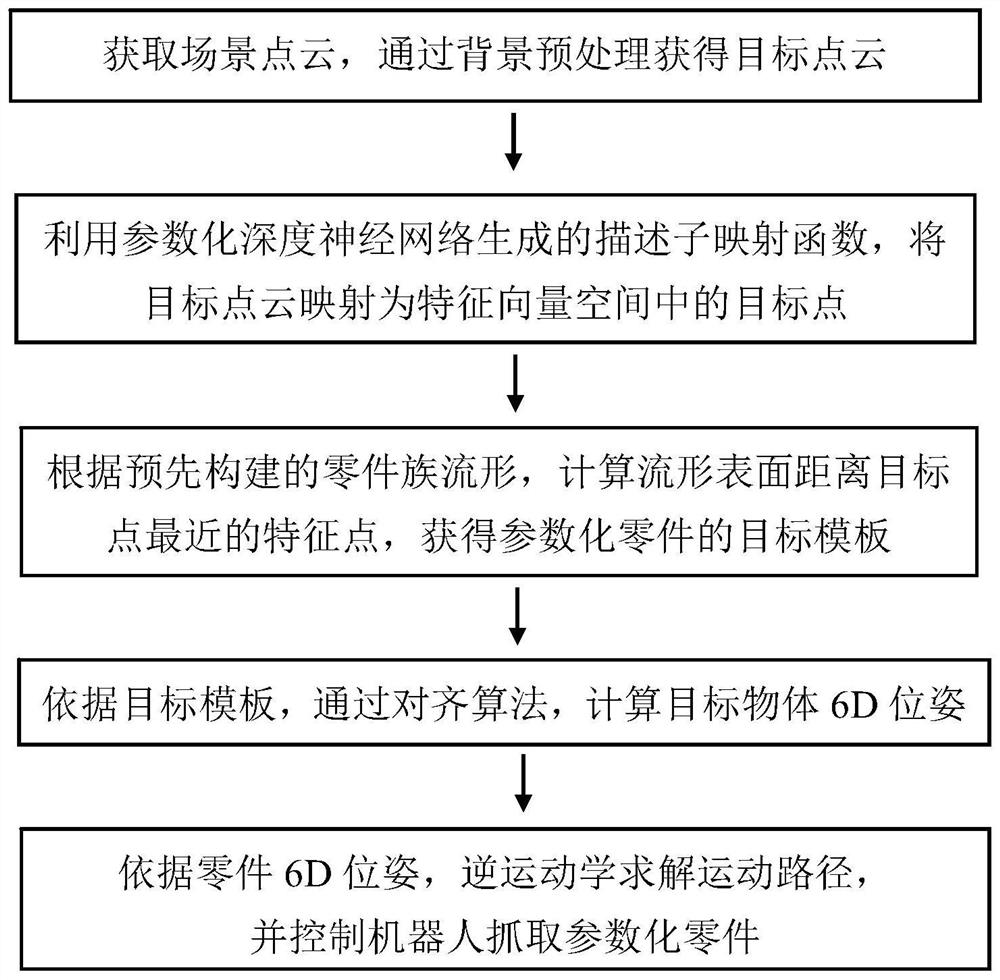

[0051] figure 1 It is a flow chart of a visual manipulator grasping method applied to parametric parts according to an embodiment of the present invention. The visual robotic arm grasping method provided by the embodiment of the present invention can realize the grasping task of parametric parts...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com