Visual context fused image description method

An image description, context technology, applied in instrumentation, biological neural network models, character and pattern recognition, etc., can solve problems affecting test performance, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

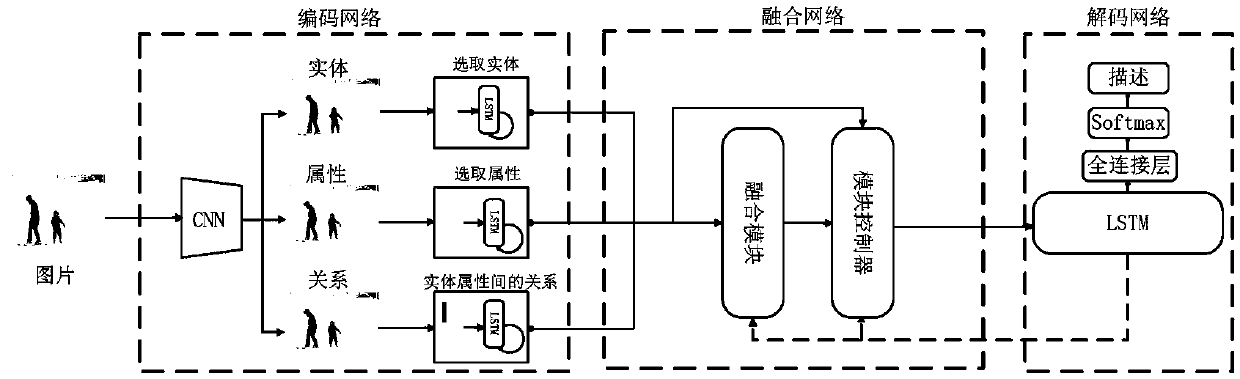

[0058] refer to figure 1 , an image description method for fusing visual context, comprising the following steps:

[0059] 1) Divide the images in the MS-COCO image description dataset into a training set and a test set at a ratio of 7:3, horizontally flip and luminance transform the images in the training set, and finally normalize the images to the values of all pixels in each image The mean value is 0, the variance is 1, the photo size of the test set is fixed to 512×512 pixels, and the rest of the processing is not performed;

[0060] 2) Image description tags are preprocessed: 5 sentences corresponding to each image in the MS-COCO image description dataset are used as image description tags, and the description of each image is set to 16 words in length. Sentences are filled with tokens, words that appear less than 5 times are filtered and discarded, and a vocabulary containing 10369 words is obtained, where the description tag corresponding to the image is a fixed val...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com