Cross-domain variational adversarial self-coding method

A self-encoding and self-encoder technology, applied in the field of cross-domain variational confrontation with self-encoding, to achieve good results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The present invention will be further described below in conjunction with specific examples.

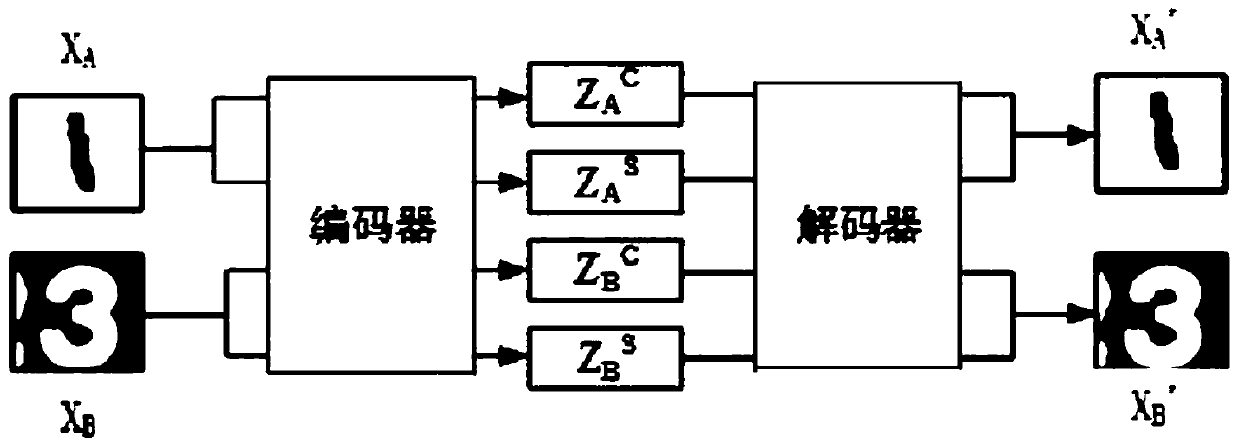

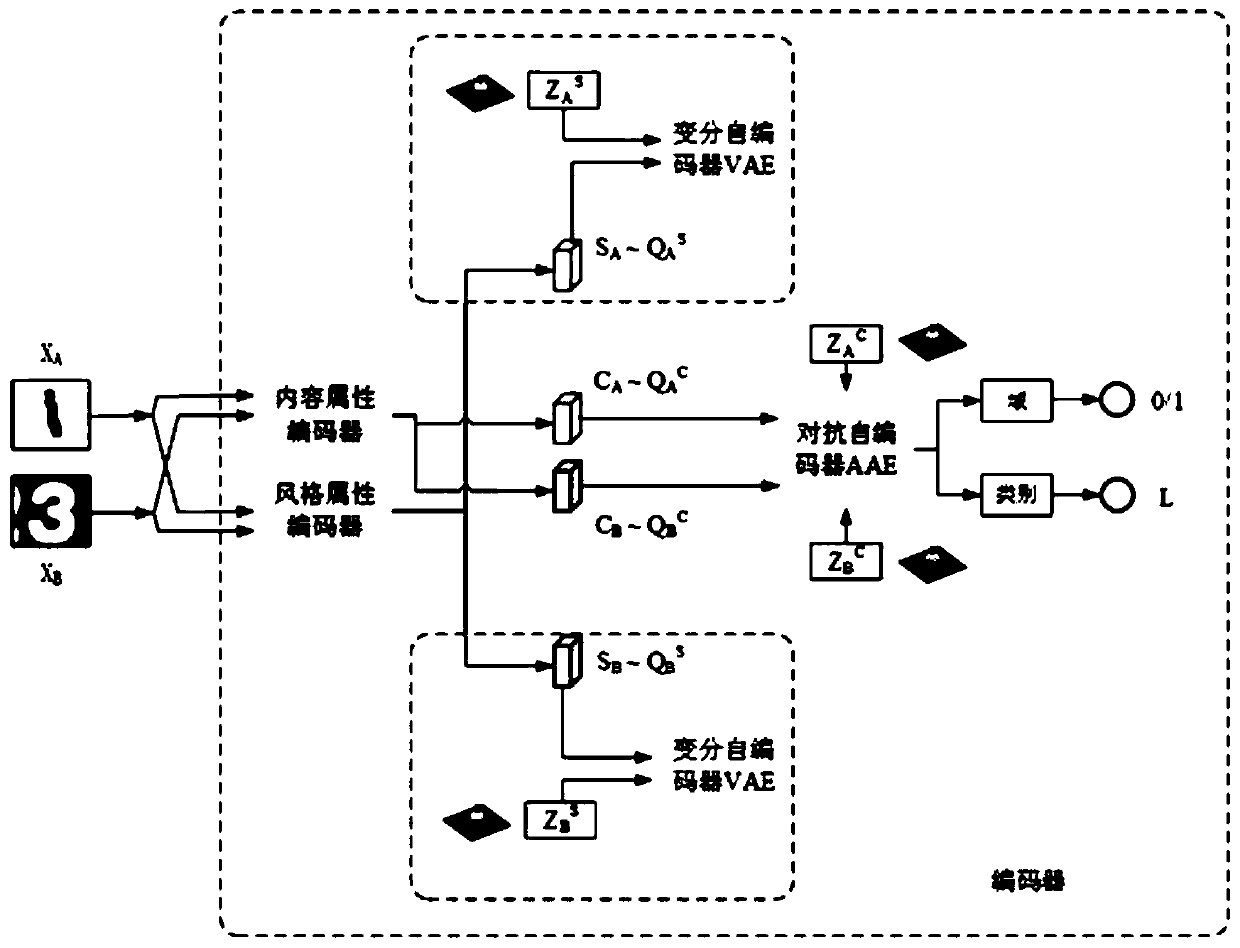

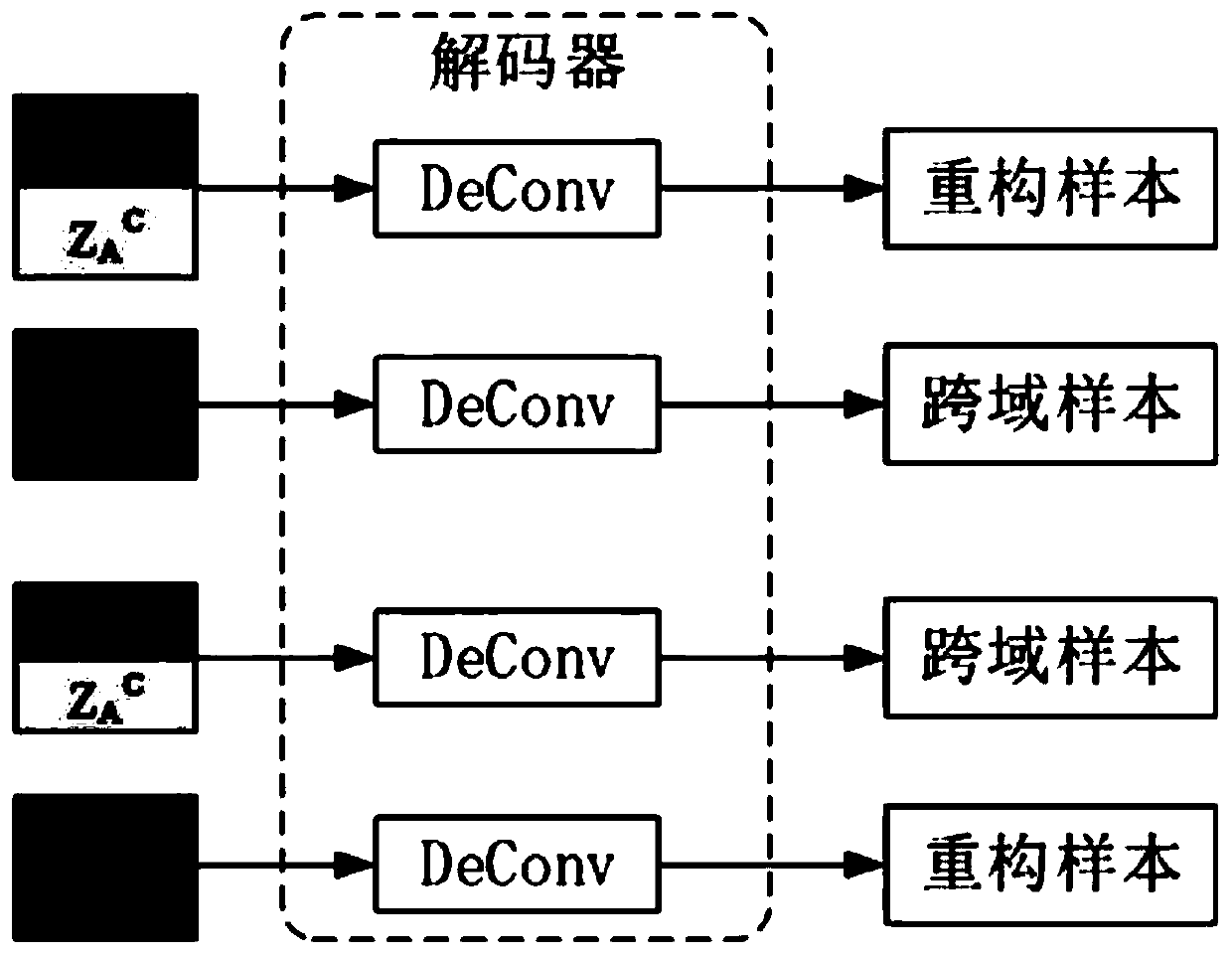

[0039] The cross-domain variational adversarial self-encoding method provided in this embodiment realizes the one-to-many continuous transformation of cross-domain images without providing any paired data, such as figure 1 As shown in , showing our overall network framework, the encoder decomposes samples into content-encoded and style encoding Content coding is used for confrontation, and style coding is used for variation. The decoder concatenates the content code and the style code to generate an image. It includes the following steps:

[0040] 1) Use encoders to decouple content encoding and style encoding for cross-domain data.

[0041] Firstly, the content coding and style coding of the image are decomposed by the encoder, and the corresponding posterior distribution is obtained. For content encoding, an adversarial autoencoder (AAE) is introduced; for style encod...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com