A binocular vision indoor positioning and mapping method and device

A binocular vision, indoor positioning technology, applied in the field of positioning and navigation, can solve the problems of poor navigation effect, poor relocation ability, and high requirements for scene texture, achieve good accuracy and robustness, and improve accuracy and robustness. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] In order to make the purposes, technical solutions and advantages of the embodiments of the present invention clearer, the technical solutions of the present invention will be described below with reference to the accompanying drawings. Obviously, the described embodiments are part of the embodiments of the present invention, not all of the embodiments.

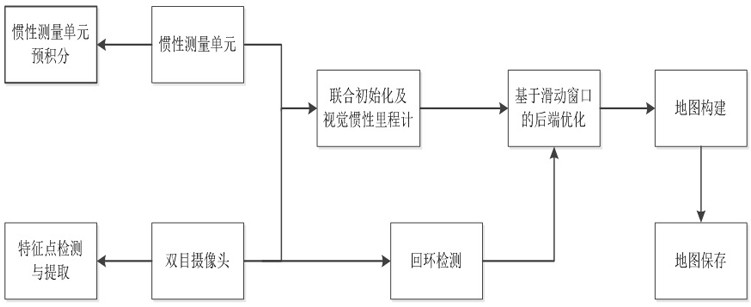

[0038] like figure 1 As shown, the present invention designs a binocular vision indoor positioning and mapping method, which can perform multi-sensor fusion positioning and mapping, and realize the functions of positioning, mapping and autonomous navigation in complex scenes. The method includes the following steps :

[0039] Step 1. Collect the left and right images in real time through the binocular vision sensor, and calculate the initial pose of the camera according to the left and right images.

[0040] First, for the left and right images obtained by the binocular vision sensor, if the image brightness is too hi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com