Method, device and system for automatically labeling target object in image

A technology for target objects and images, applied in the field of image processing, can solve problems such as affecting generality, inability to guarantee accuracy, and difficulty in obtaining CAD models of target objects, etc., to achieve the effect of improving generality and easy access.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

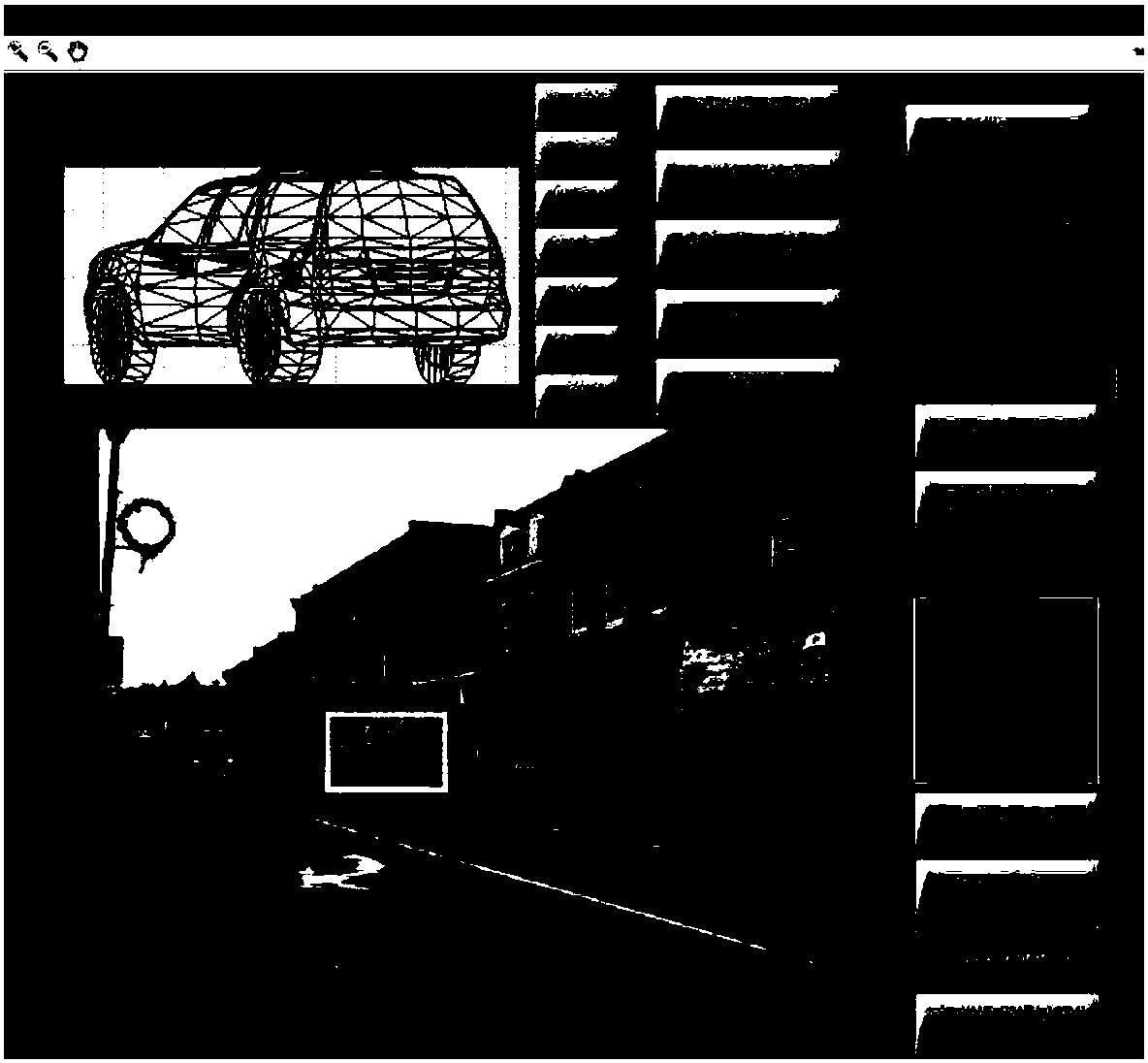

Image

Examples

Embodiment 1

[0068] see Image 6 , the embodiment of the present application provides a method for automatically marking the target object in the image, the method may specifically include:

[0069] S601: Obtain image training samples, including multiple images, each image is obtained by shooting the same target object, and there are the same environmental feature points between adjacent images;

[0070] Wherein, the image training sample can be obtained from a target video file, or can also be obtained from a plurality of pre-shot photos and other files. For example, the target video file can be pre-recorded. Specifically, it can be used to perform machine learning on the characteristics of a certain target object, and then the target object can be recognized in scenarios such as AR, and then the image of the target object can be collected in advance. , and then, each picture obtained by image acquisition is used as an image training sample, and a specific target image is marked from eac...

Embodiment 2

[0086] The second embodiment is an application of the automatic labeling method provided in the first embodiment, that is, after the automatic labeling of the target object in the image training sample is completed, it can be applied to the creation process of the target object recognition model. Specifically, Embodiment 2 of the present application provides a method for establishing a target object recognition model, see Figure 7 , the method may specifically include:

[0087]S701: Obtain image training samples, including multiple images, each image is obtained by shooting the same target object, and there are the same environmental feature points between adjacent images; each image also includes the location of the target object Annotation information of the position, the annotation information is obtained by using one of the images as a reference image, and creating a three-dimensional space model based on the reference three-dimensional coordinate system, and determining ...

Embodiment 3

[0091] The third embodiment further provides a method for providing augmented reality AR information on the basis of the second embodiment. Specifically, see Figure 8 , the method may specifically include:

[0092] S801: Collect a real-scene image, and use a pre-established target object recognition model to identify the location information of the target object from the real-scene image, wherein the target object recognition model is established by the method in the second embodiment above;

[0093] S802: Determine a display position of an associated virtual image according to the position information of the target object in the real-scene image, and display the virtual image.

[0094] During specific implementation, when the position of the target object in the real-scene image changes, the position of the virtual image follows the change of the position of the real-scene image.

[0095] However, in the prior art, it often occurs that the positions of the virtual image and...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com