Depth estimation method and apparatus of monocular video, terminal, and storage medium

A technology of depth estimation and video, applied in the field of image processing, can solve the problems of low accuracy of depth map, inability to estimate and output uncertainty distribution map, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

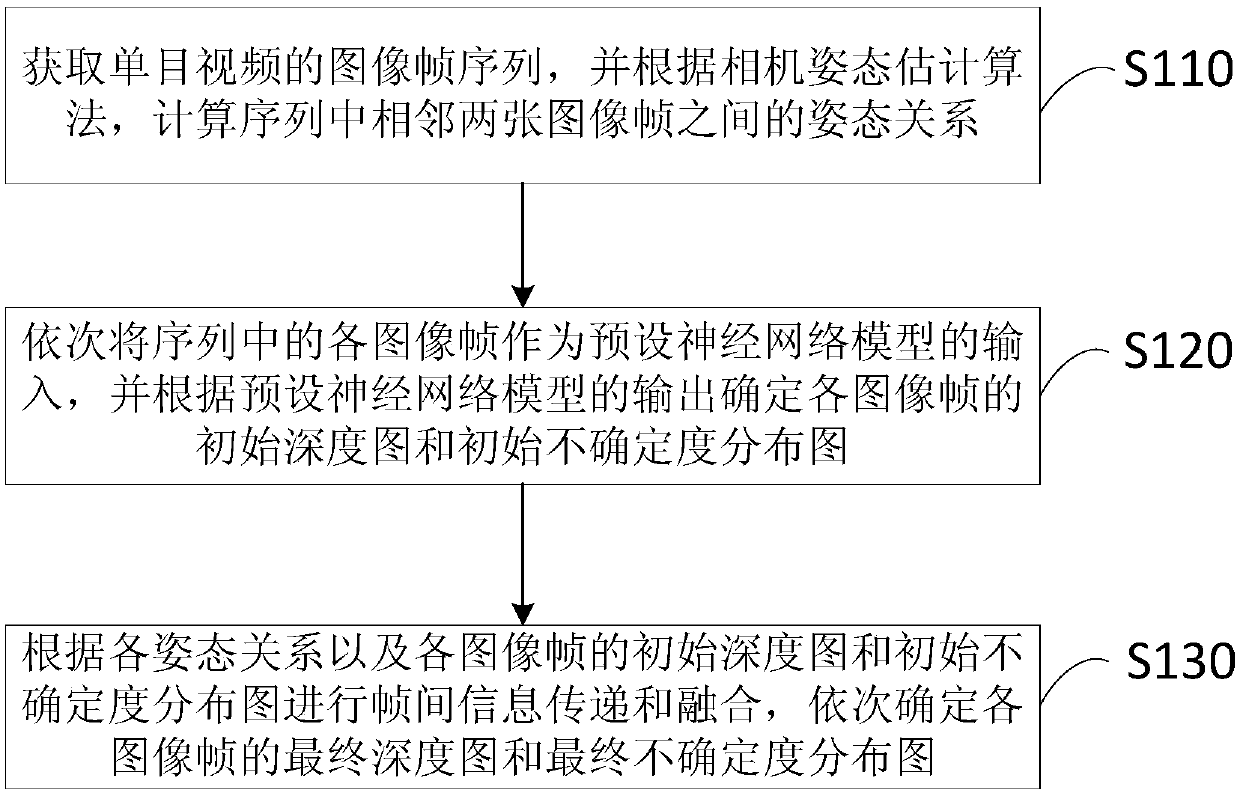

[0029] figure 1 It is a flow chart of a monocular video depth estimation method provided by Embodiment 1 of the present invention. This embodiment is applicable to the case of monocular depth estimation for each image frame in a sequence of video frames, especially for In drones, robots, autonomous driving technology or augmented reality technology, depth estimation is performed on image frames of monocular video, so that the distance between objects can be determined according to the estimated depth map, and it can also be used in other scenes that require monocular video In the application scene of depth estimation. The method can be performed by a monocular video depth estimation device, which can be implemented by software and / or hardware, and integrated in a terminal that needs to estimate depth, such as drones, robots, and the like. The method specifically includes the following steps:

[0030] S110. Acquire the image frame sequence of the monocular video, and calculat...

Embodiment 2

[0090] Figure 6 It is a schematic structural diagram of a monocular video depth estimation device provided by Embodiment 2 of the present invention. This embodiment is applicable to the case of performing monocular depth estimation on each image frame in a sequence of video frames. The device includes: a posture relationship determination module 210 , an initial depth information determination module 220 and a final depth information determination module 230 .

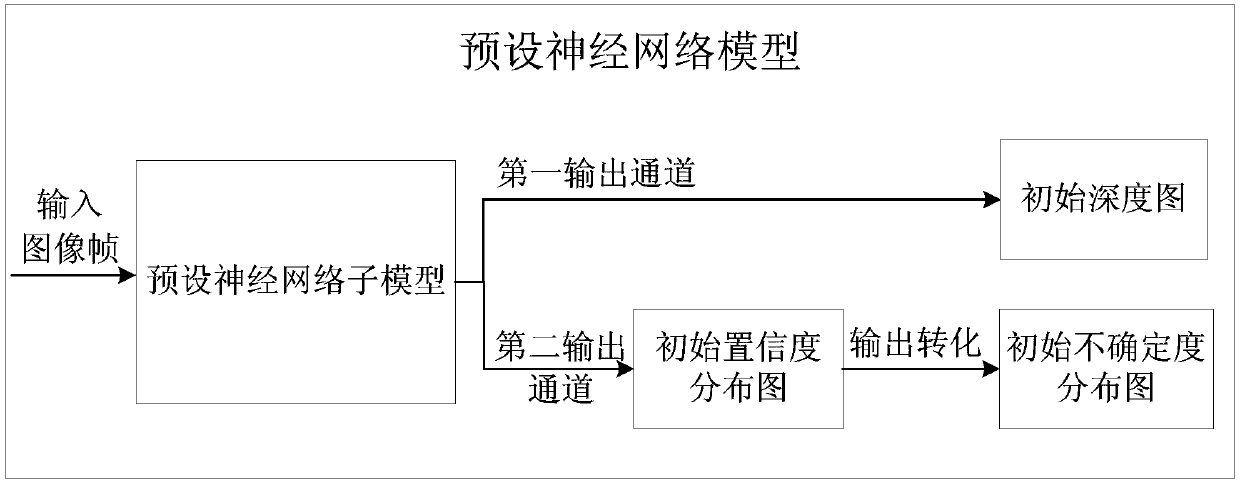

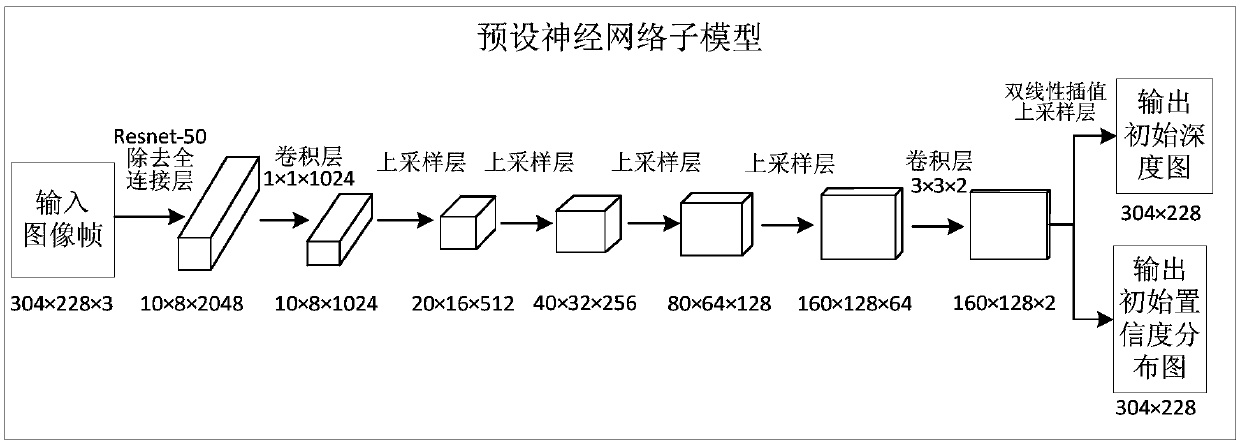

[0091] Wherein, the pose relationship determination module 210 is used to acquire the image frame sequence of the monocular video, and calculates the pose relationship between two adjacent image frames in the sequence according to the camera pose estimation algorithm; the initial depth information determination module 220 is used to Each image frame in the sequence is used as the input of the preset neural network model in turn, and the initial depth map and the initial uncertainty distribution map of each image frame...

Embodiment 3

[0130] Figure 7 It is a schematic structural diagram of a terminal provided in Embodiment 3 of the present invention. see Figure 7 , the terminal includes:

[0131] one or more processors 310;

[0132] memory 320, for storing one or more programs;

[0133] When one or more programs are executed by one or more processors 310, the one or more processors 310 implement the method for estimating the depth of a monocular video as proposed in any one of the above embodiments.

[0134] Figure 7 Take a processor 310 as an example; the processor 310 and the memory 320 in the terminal can be connected through a bus or other methods, Figure 7 Take connection via bus as an example.

[0135] The memory 320, as a computer-readable storage medium, can be used to store software programs, computer-executable programs and modules, such as program instructions / modules corresponding to the depth estimation method of monocular video in the embodiment of the present invention (for example,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com