Text topic classification model based on multi-source-domain integrated migration learning and classification method

A source domain, text technology, applied in the text topic classification model and classification field based on multi-source domain integrated transfer learning, can solve the problems of negative transfer, large resource consumption, and inability to judge the correct rate of pseudo-classified data in the source domain, etc. Achieve high accuracy, strong anti-interference ability, avoid negative migration phenomenon

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

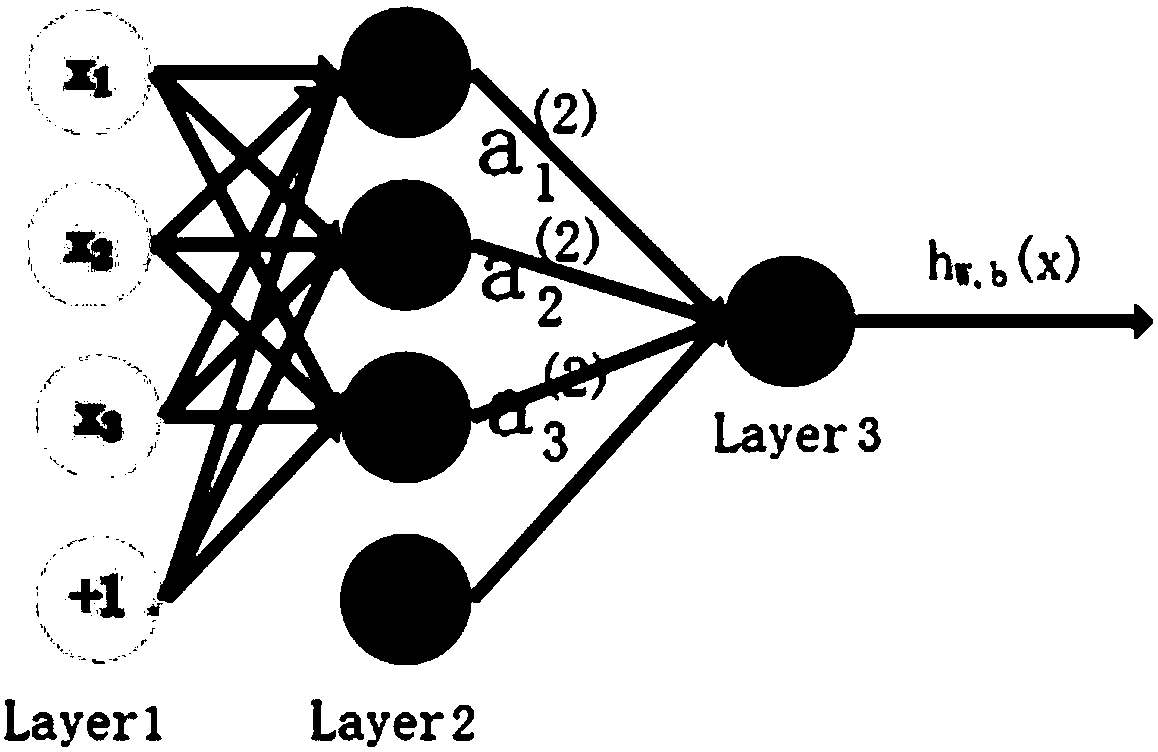

[0053] Step 1. Classify all three classifiers to obtain data with pseudo-labels of different types of text topics. When the target domain is C, use NN classifier, CNN classifier and Softmax for the data of source domain S, R, and T Classifier Three classifiers for classification;

[0054]Step 2. Use 100% of the C target domain data to use the Softmax classifier for experiments, and record the correct rate; 1% of the C target domain data uses Softmax for experiments, and record the correct rate; 1% of the C target domain data uses the NN classifier Do an experiment and record the correct rate; 1% of the C target domain data is tested with a CNN classifier, and the correct rate is recorded; 1% of the C data and the source domain S data added to it are used for experiments with the Softmax classifier, and the correct rate is recorded ;Use 1% of the C data and the data of the source domain R added to it to do experiments with the Softmax classifier, and record the correct rate; us...

Embodiment 2

[0056] Step 1. Classify all three classifiers to obtain data with pseudo-labels of different types of text topics. When the target domain is S, use NN classifier, CNN classifier and Softmax for the data of source domain C, R, and T Classifier Three classifiers for classification;

[0057] Step 2. Use 100% of the data in the S target domain to conduct experiments with Softmax classifiers, and record the correct rate; use Softmax for 1% of the S target domain data, and record the correct rate; use NN classifier for 1% of the S target domain data Do an experiment and record the correct rate; 1% of the S target domain data is tested with a CNN classifier to record the correct rate; use 1% of the S data and the data of the source domain C added to it to use the Softmax classifier for the experiment, and record the correct rate ; Use 1% of the S data and the data of the source domain R added to it and use the Softmax classifier to do experiments, and record the correct rate; use 1% ...

Embodiment 3

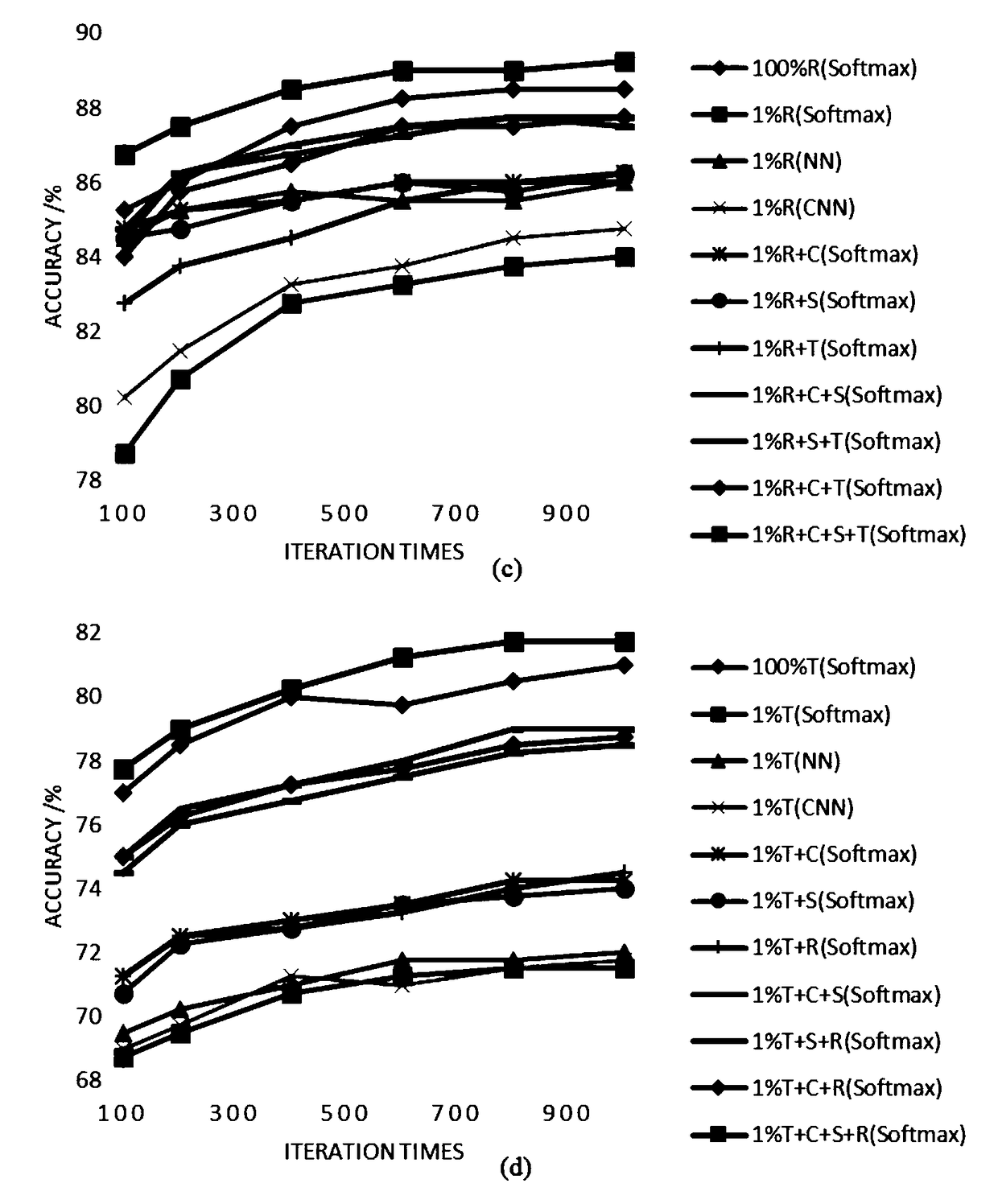

[0059] Step 1. Classify all three classifiers to obtain data with pseudo-labels of different types of text topics. When the target domain is R, use NN classifier, CNN classifier and Softmax for the data of source domain C, S, and T Classifier Three classifiers for classification;

[0060] Step 2. Use 100% of the data in the R target domain to experiment with the Softmax classifier, and record the correct rate; use Softmax for 1% of the R target domain data, and record the correct rate; 1% of the R target domain data uses the NN classifier Do an experiment and record the correct rate; 1% of the R target domain data is tested with a CNN classifier, and the correct rate is recorded; use 1% of the R data and the data of the source domain C added to it and the Softmax classifier is used for the experiment, and the correct rate is recorded ; Use 1% of the R data and the data of the source domain S added to it to use the Softmax classifier for experiments, and record the correct rate...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com