Human behavior recognition method and device based on depth camera and basic posture

A technology of depth camera and recognition method, which is applied in the field of human-computer interaction, can solve problems such as slow training speed, easy to be affected by environmental background, and time difference in completion, so as to improve accuracy and reliability, ensure the invariance of viewing angle, remove The effect of noise action

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] Embodiments of the present invention are described in detail below, and examples of the embodiments are shown in the drawings, wherein the same or similar reference numerals denote the same or similar elements or elements having the same or similar functions throughout. The embodiments described below by referring to the figures are exemplary and are intended to explain the present invention and should not be construed as limiting the present invention.

[0034]The following describes the human behavior recognition method and device based on the depth camera and basic postures according to the embodiments of the present invention with reference to the accompanying drawings. First, the human behavior recognition method based on the depth camera and basic postures according to the embodiments of the present invention will be described with reference to the accompanying drawings .

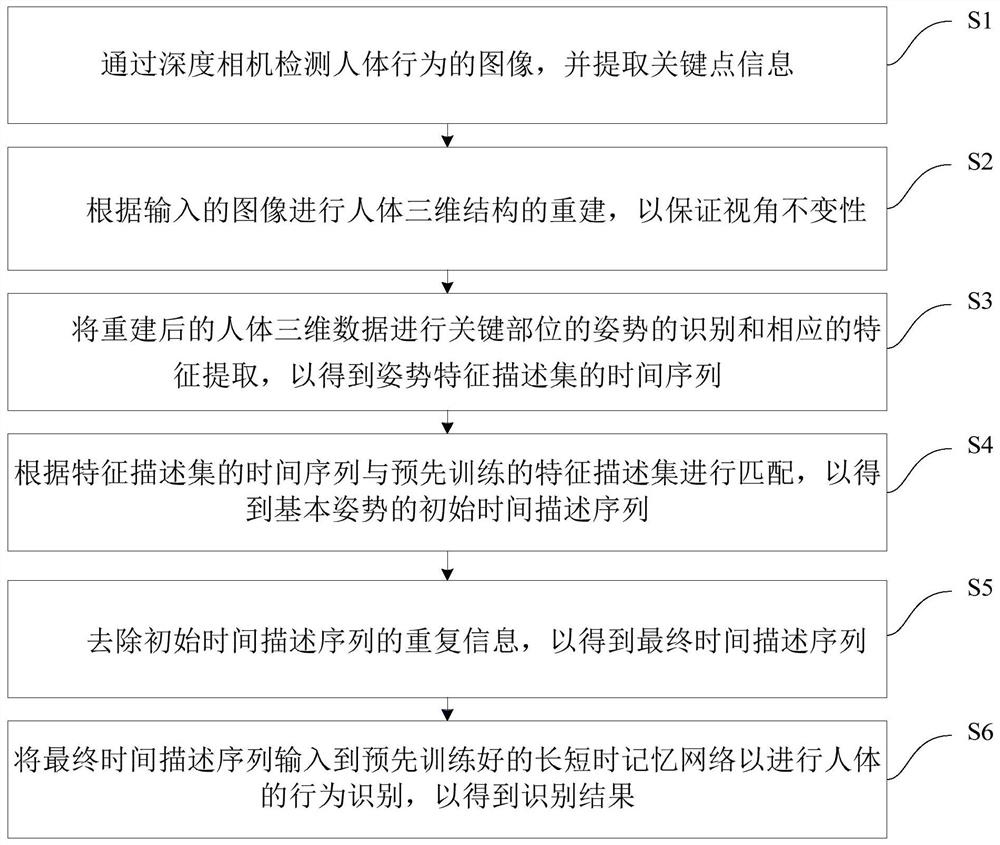

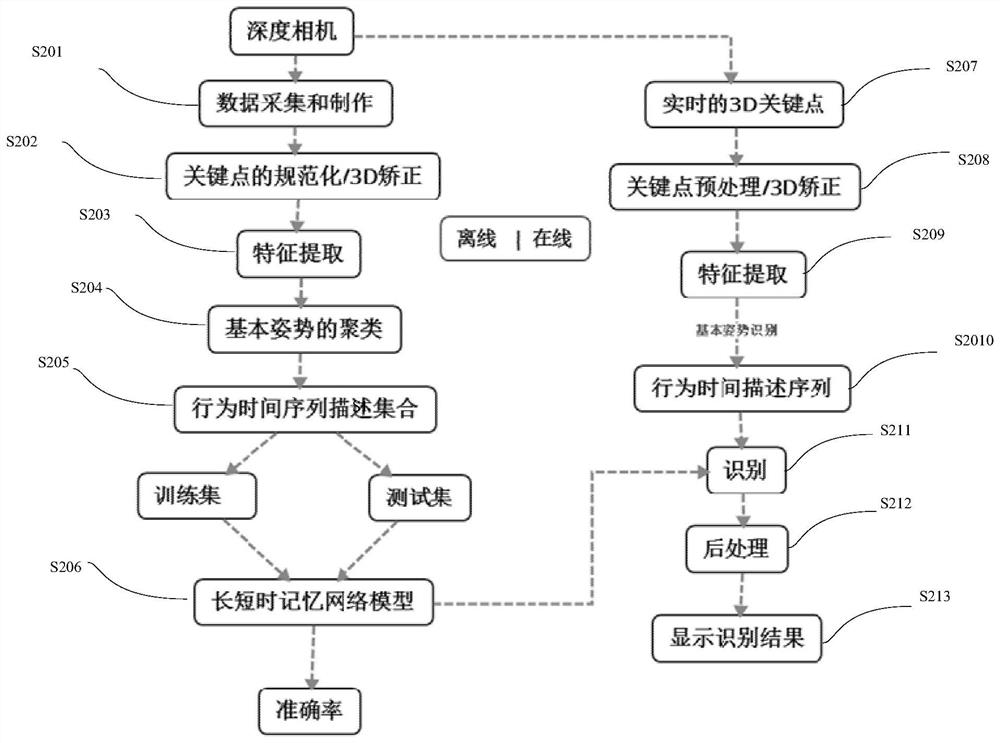

[0035] figure 1 It is a flow chart of a human behavior recognition method based on a depth...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com