Video feature learning method, device, electronic device and readable storage medium

A video feature and learning method technology, applied in the computer field, can solve the problems of resource consumption and cost, manual labeling, etc., and achieve the effect of strong versatility and good adaptability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

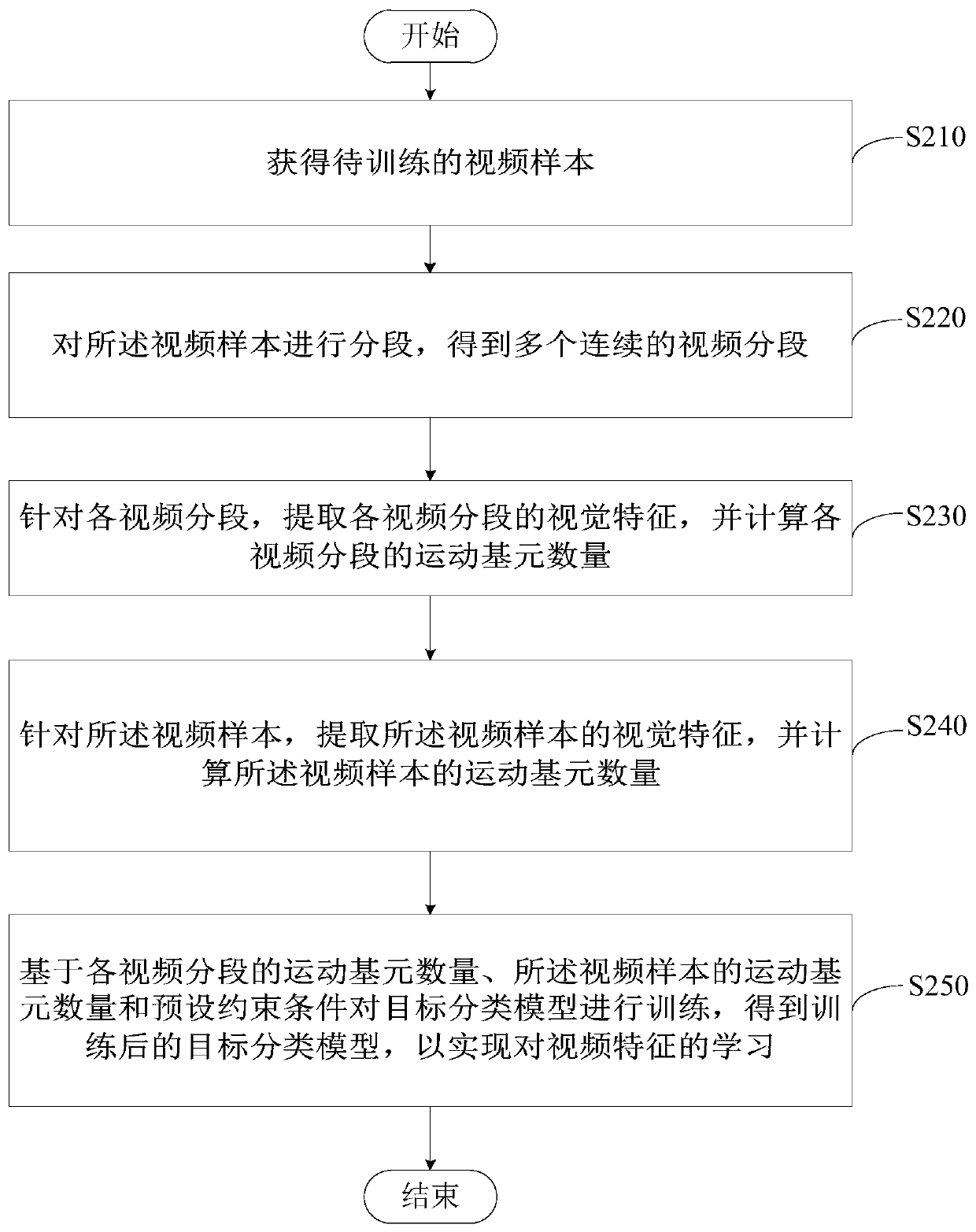

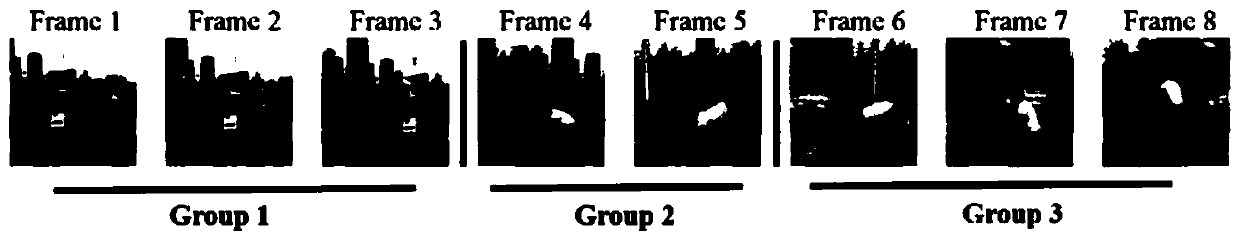

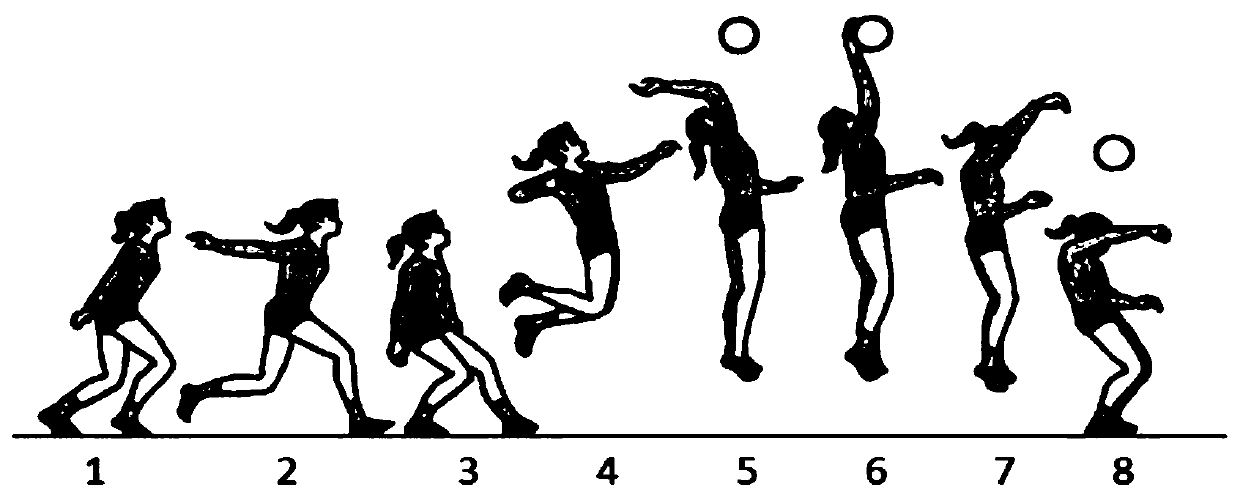

Method used

Image

Examples

Embodiment Construction

[0051]In the process of implementing the technical solutions provided by the embodiments of the present invention, the inventors of the present application found that the currently used supervised video feature learning method is based on video labels and classification information, which requires manual labeling operations. In actual business application scenarios with a huge amount of data, It consumes resources and cost very much. In view of the above problems, although the existing unsupervised video feature learning methods can improve the above problems to a certain extent, after careful study by the inventors, it is found that the current unsupervised video feature learning methods mainly use The continuous motion information of the main object in the video is obtained, and the visual properties of the video are unsupervised. However, due to the dependence on the motion of objects in the video, the effect is not good when there is little or no change in the video picture...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com