Learning system and learning method

A learning system and differential technology, applied in the field of learning systems, can solve the problems of over-specialization and over-learning of learning data features

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 Embodiment approach

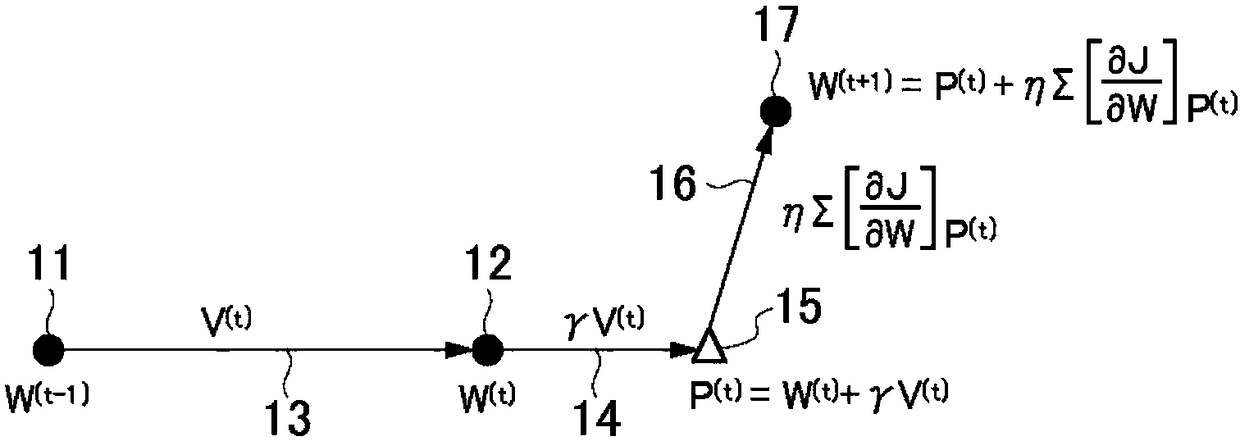

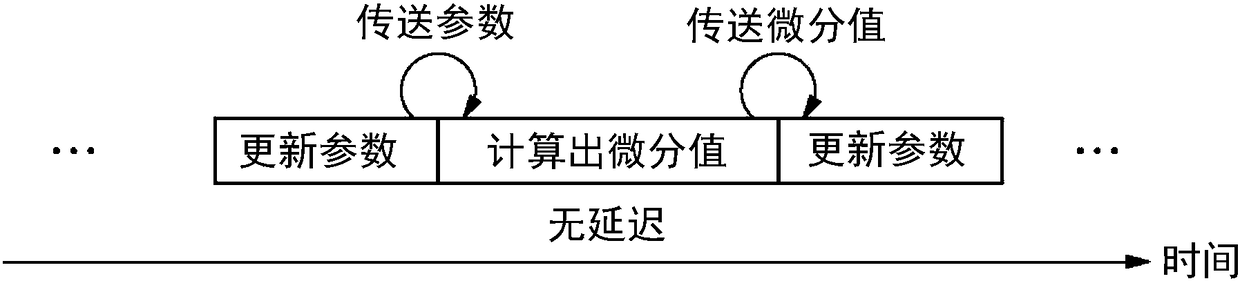

[0067] Distributed methods can be classified according to: (1) "what" to communicate, (2) "with whom" to communicate, and (3) "when" to communicate.

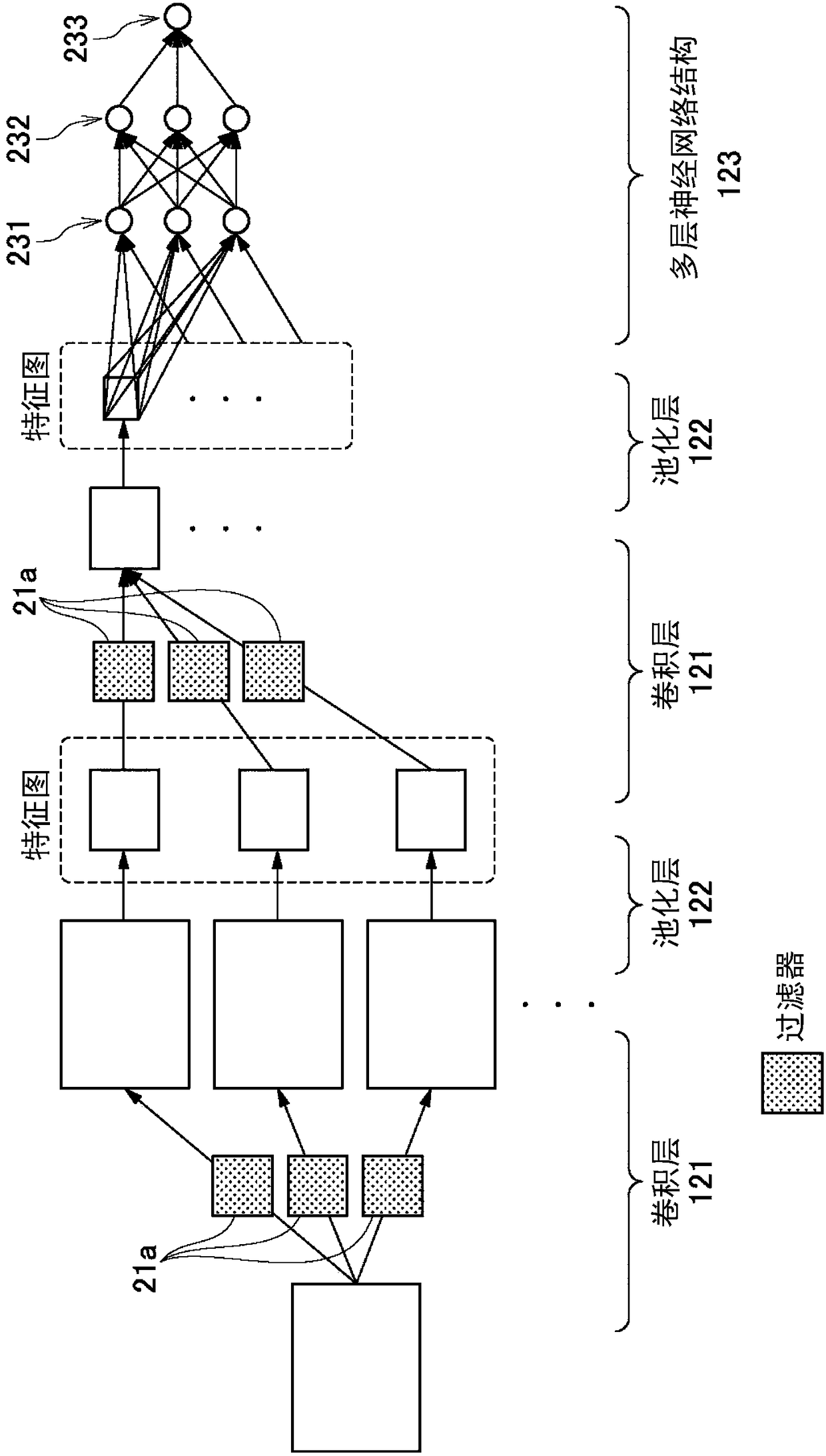

[0068] First of all, for the perspective of "communicating 'what content'", it includes the methods of "parallel model" and "parallel data". In the model parallel method, the model itself is dispersed among computers, and the intermediate variables of the neural network are communicated. In the data parallel method, the calculation of the model is enclosed in a single computer, and the differential value calculated by each computer is communicated.

[0069] In the data parallel method, each computer processes different data, so a large amount of data can be processed in one go. In the case of the mini-batch stochastic gradient method as a premise, it is logical to align data in a mini-batch, so the main assumption in this specification is data alignment.

[0070] Model side-by-side is very useful when dealing with huge neural ...

no. 2 Embodiment approach

[0114] Improvements to the first embodiment will be described below. The absolute value of the differential value is sometimes large in the early stage of learning. As a result, parameter updates tend to become unstable in the early stages of learning. Specifically, if the variation of the objective function is too large, the decrease speed may become small, and the value of the objective function may diverge infinitely.

[0115] Empirically, this restlessness is specific to the early stages of learning and is not a problem in the middle or second half of learning. In order to improve the unsteadiness in the early stage of learning, it is preferable to adopt the following method.

[0116] The first example is that when the parameter update unit 3 uses the value obtained by multiplying the differential value and the learning coefficient η to update the parameters, the learning coefficient η can be set to take a smaller value in the early stage of learning, and it can be incre...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com