A robot manipulator control method based on multi-leapmotion virtual gesture fusion

A technology of manipulators and gestures, applied in the direction of manipulators, program-controlled manipulators, manufacturing tools, etc., can solve problems such as the complexity of the robot control process, and achieve the effects of low cost, strong fault tolerance, and high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] The present invention will be further described below in conjunction with the drawings and embodiments.

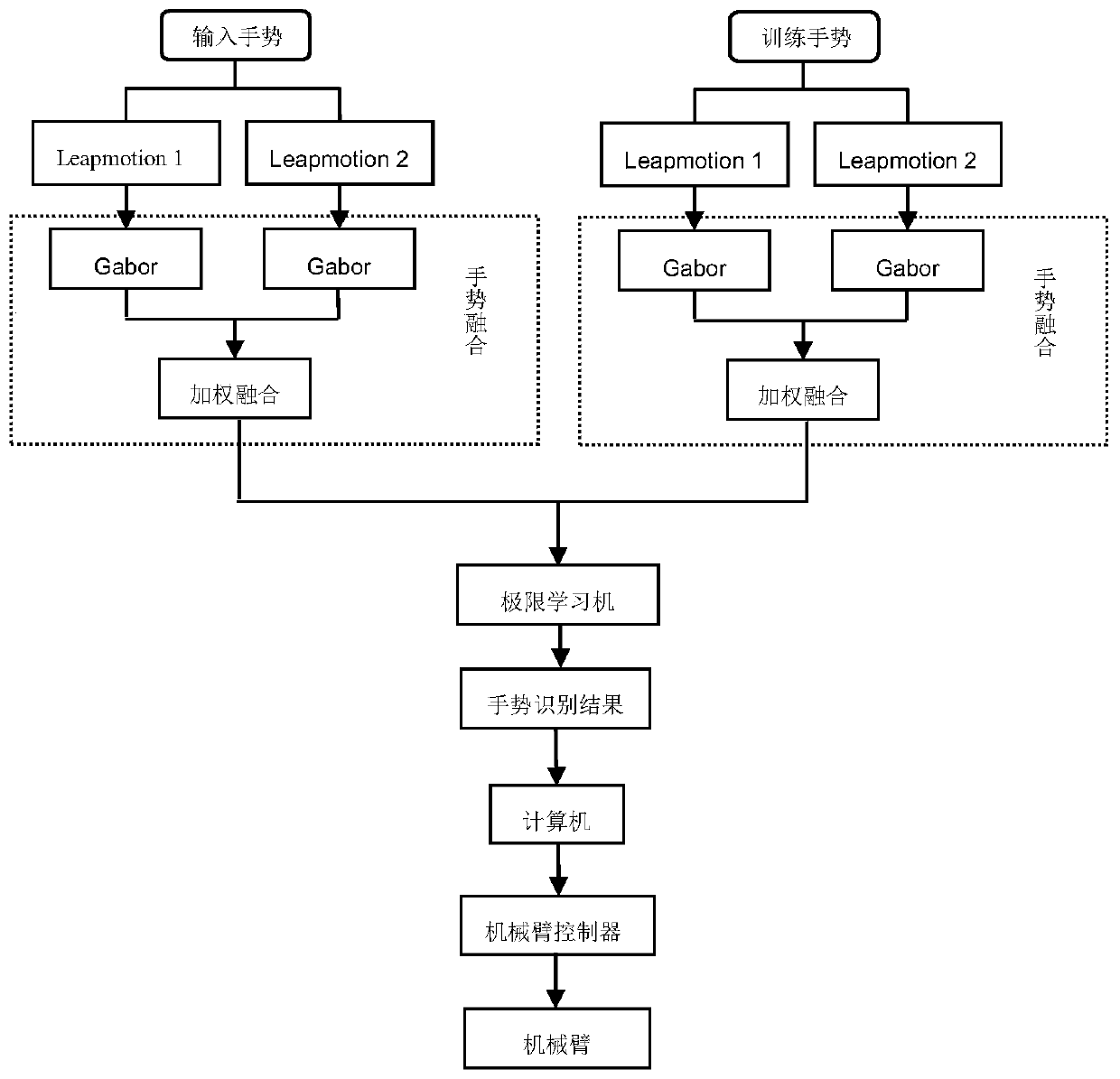

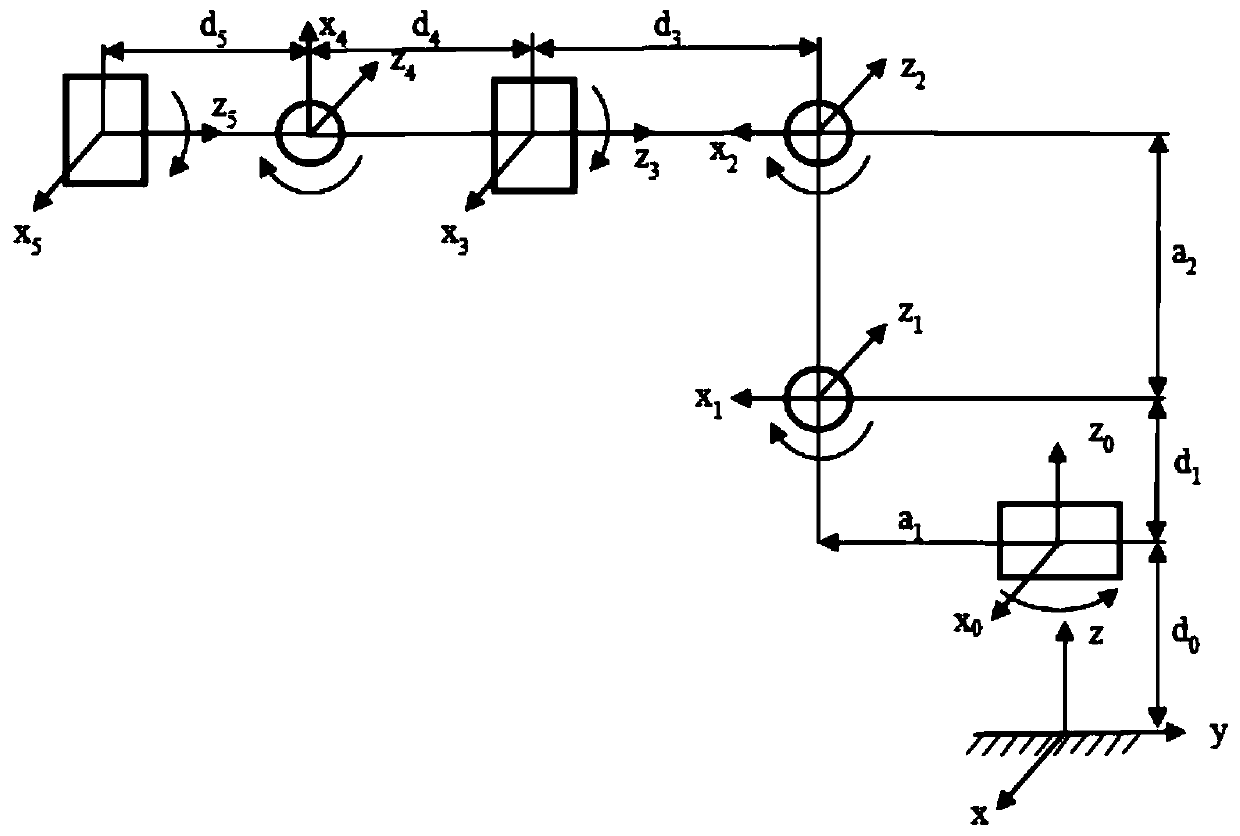

[0047] A robot manipulator control method based on the fusion of multiple Leapmotion virtual gestures includes the following steps:

[0048] Step 1: Set up gesture collection device;

[0049] Set at least two leapmotion sensors on the inner center of the upper and lower surfaces of the gesture collection area;

[0050] Step 2: Collect leapmotion sequence images of gestures that control the robotic arm based on the gesture acquisition device, and use the gesture recognition model based on the nuclear extreme learning machine to recognize the gestures;

[0051] The gesture recognition model based on the nuclear extreme learning machine uses the leapmotion sequence images of each gesture collected by the gesture acquisition device as input data in turn, and the category number of the corresponding gesture is used as output data to perform machine learning training on the nuclear...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com