Language input association detection method based on attention model

A technology of attention model and detection method, applied in the input/output process of data processing, speech analysis, speech recognition, etc., can solve the problems of increasing the amount of model parameters, unable to explicitly give word correlation, adding hidden nodes, etc. , to achieve the effect of improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

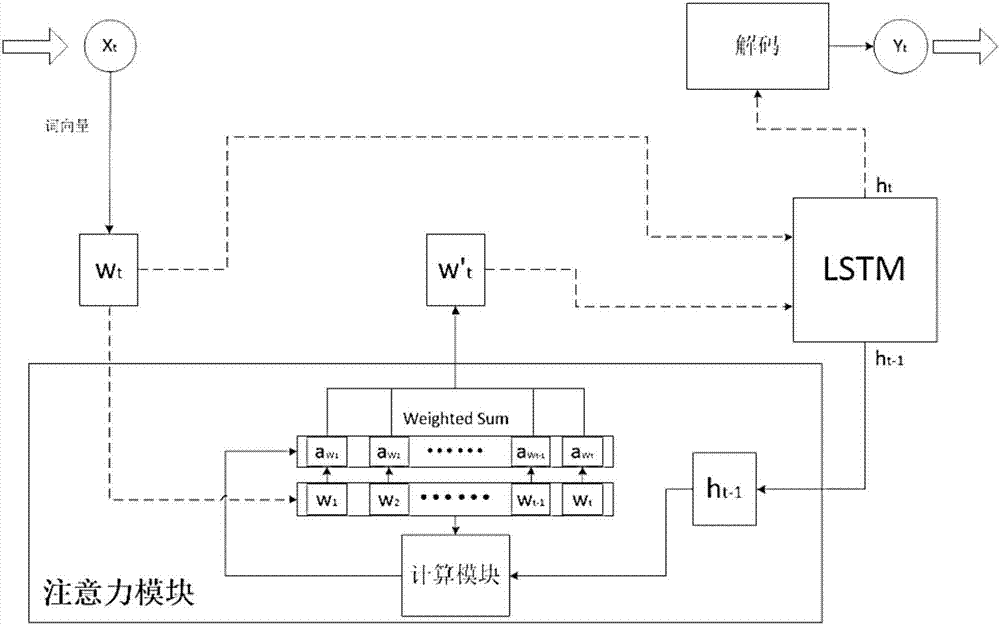

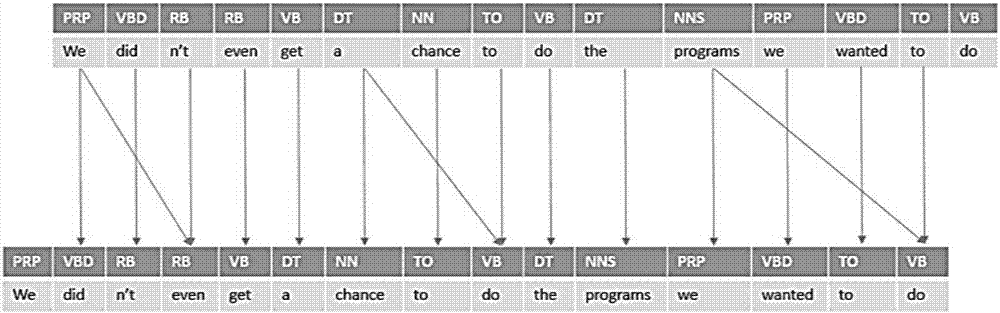

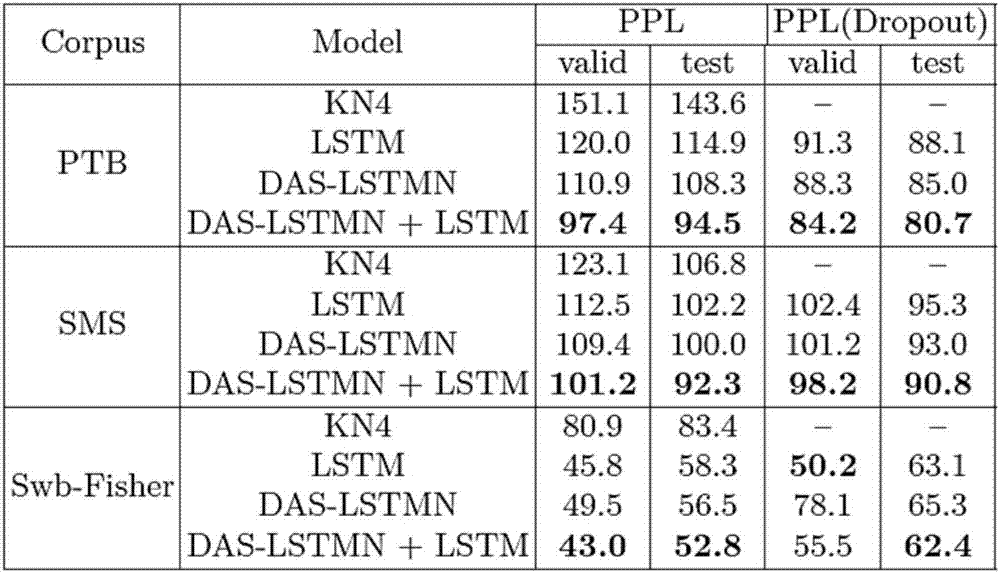

Method used

Image

Examples

Embodiment Construction

[0041] This embodiment includes the following steps:

[0042] Step 101, collect the training corpus required for training the language model, and do preprocessing: first, you need to consider the needs of the application, and collect corpus data for the corresponding field. Collect the corpus of spoken telephone. Convert the vocabulary in the corpus into its corresponding numerical serial number in the vocabulary, and replace the vocabulary that does not appear in the vocabulary in the corpus with , and return the corresponding serial number. At the same time, 10% of the data is selected as the validation set to prevent the model from overfitting.

[0043] Step 102, processing corresponding data and generating corresponding labels. For example, the word sequence is w 1 ,w 2 ,...,w n-1 ,w n , then the training sequence is w 1 ,w 2 ,...,w n-1 , the corresponding label sequence is w 2 ,...,w n-1 ,w n , where: the training and labeling sequences are in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com