Virtual-reality occlusion handling method based on depth image data flow

A technology of virtual and real occlusion and processing methods, which is applied in image data processing, 3D image processing, image enhancement, etc., and can solve problems such as time-consuming, lack of 3D information of real scenes, and difficult to deal with the occlusion relationship between virtual objects and real scenes. Achieving improved accuracy and robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] Embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings.

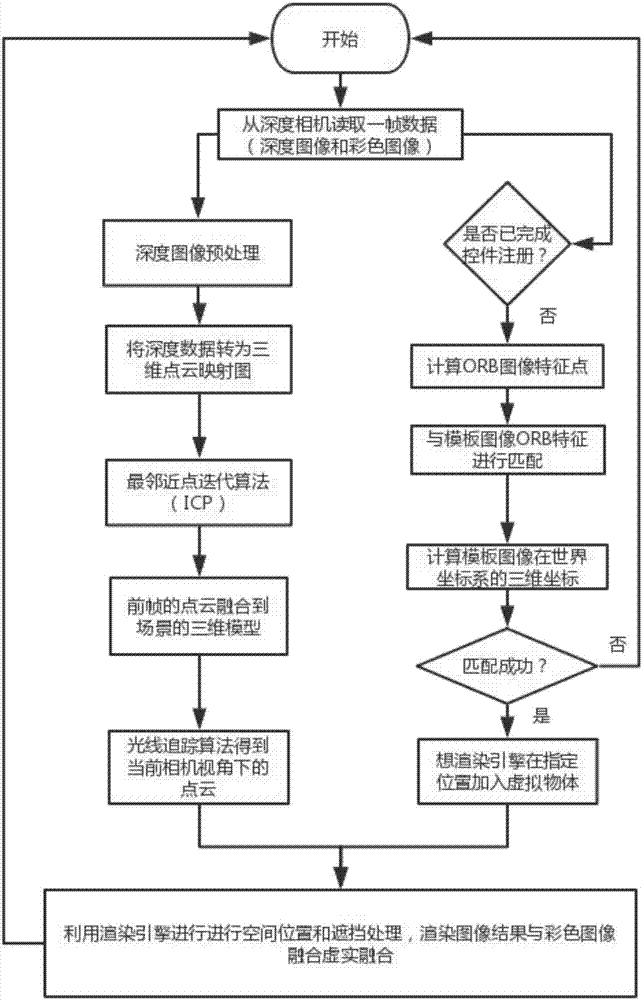

[0024] Such as figure 1 As shown, the implementation process of the present invention is mainly divided into four steps: depth data preprocessing, building a 3D point cloud model of a scene, 3D space registration, and virtual-real fusion rendering.

[0025] Step 1. In-depth data preprocessing

[0026] Its main steps are:

[0027] (11) For the depth data in the given input RGBD (color + depth) data stream, set the threshold w according to the error range of the depth camera min ,w max , the depth value is at w min with w max The points between are regarded as credible values, and only the depth data I within the threshold range are kept.

[0028] (12) Perform fast bilateral filtering on each pixel of the depth data, as follows:

[0029]

[0030] where p j for pixel p i The pixels in the neighborhood of , s is the number of effective pixels i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com