Pre-training and/or transfer learning for sequence taggers

A pre-training and machine learning technology, applied in the field of pre-training and/or migration learning for sequence taggers, can solve problems such as CRF's failure to use data

- Summary

- Abstract

- Description

- Claims

- Application Information

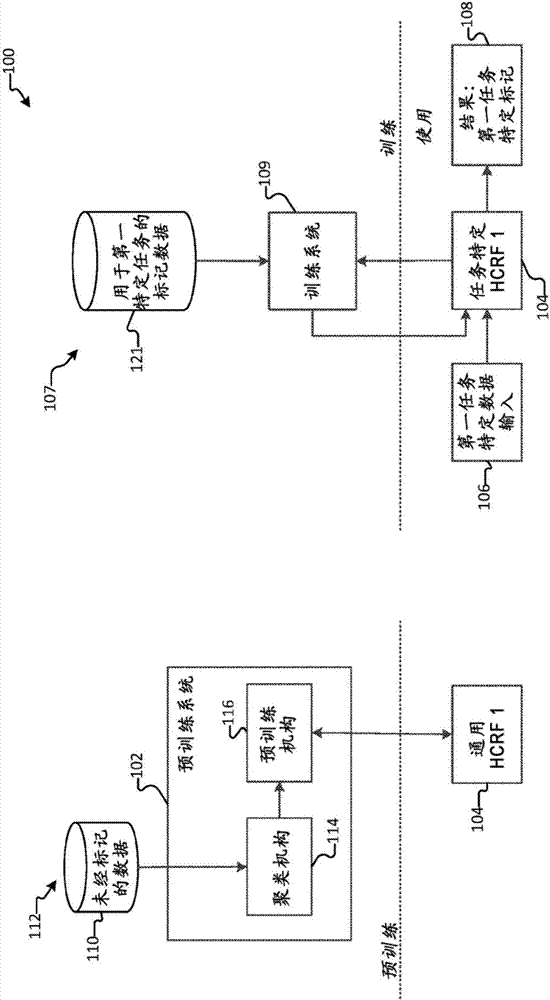

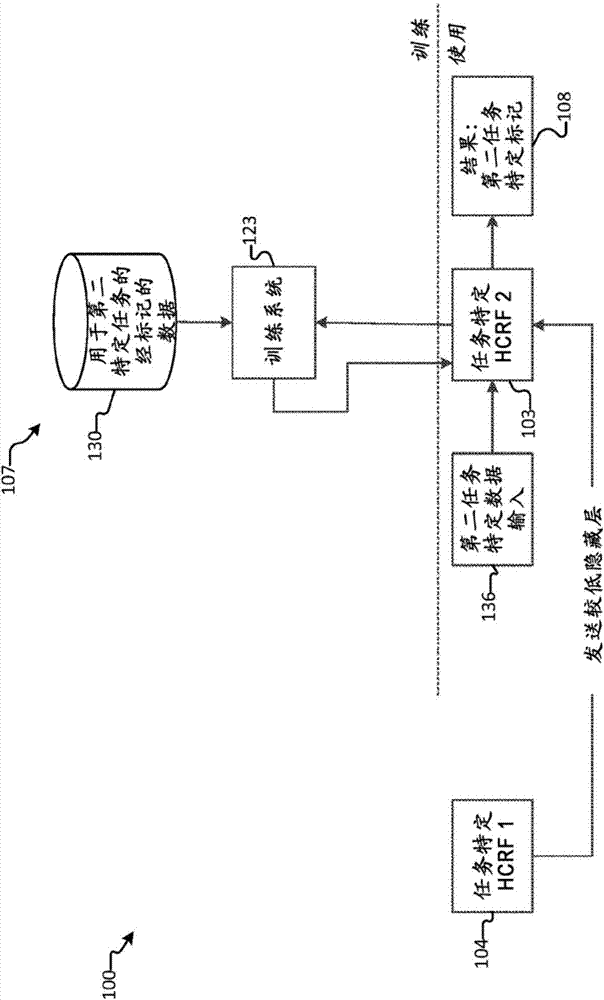

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example 1

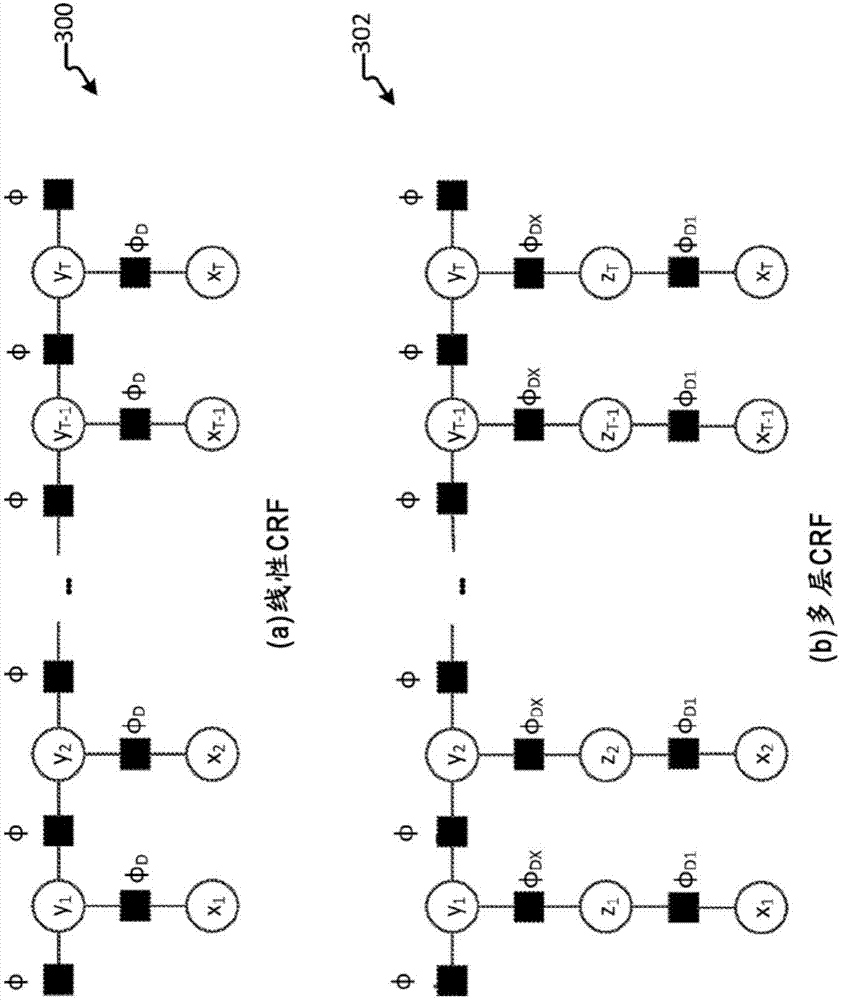

[0104] Experiments are conducted to compare traditional CRF, HCRF and pretrained HCRF (PHCRF) with each other. Traditional CRFs have no hidden layers, so traditional CRFs directly map input features to task-specific labels in a linear function. HCRF encodes 300 hidden units, which represent a shared representation of features. However, HCRF has not undergone any pre-training. Based on the systems and methods disclosed in this paper, PHCRF has been pre-trained with unlabeled data. All three CRFs are built to create language understanding models. Each of the CRFs leverages fully labeled crowdsourced data for training on a specific application. use Apply three different CRFs to various query domains. Hundreds of tagging tasks were performed related to each of these domains, including alarms, calendars, communications, notes, mobile applications, locations, reminders, and weather. Every query in every domain is executed by every CRF. Table 1 below shows the characteristics...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com