A Human Action Classification Method Based on Pose Recognition

A gesture recognition and human action technology, applied in the field of image processing, can solve the problems of not being able to accurately represent colorful human poses, ignoring details, etc., to achieve the effect of diverse arm movements and high classification accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] Below in conjunction with accompanying drawing, technical scheme of the present invention is described in further detail:

[0030] Human body action classification based on gesture recognition, firstly, perform human body upper body posture recognition on the collected human body motion pictures in the database to obtain the 'stickman model' (that is, skeleton features), and then use multi-classification SVM to train the obtained skeleton features, Get a classifier that can classify different actions, and use the trained classifier to classify different actions of the human body. Specifically:

[0031] 1. Human motion posture recognition

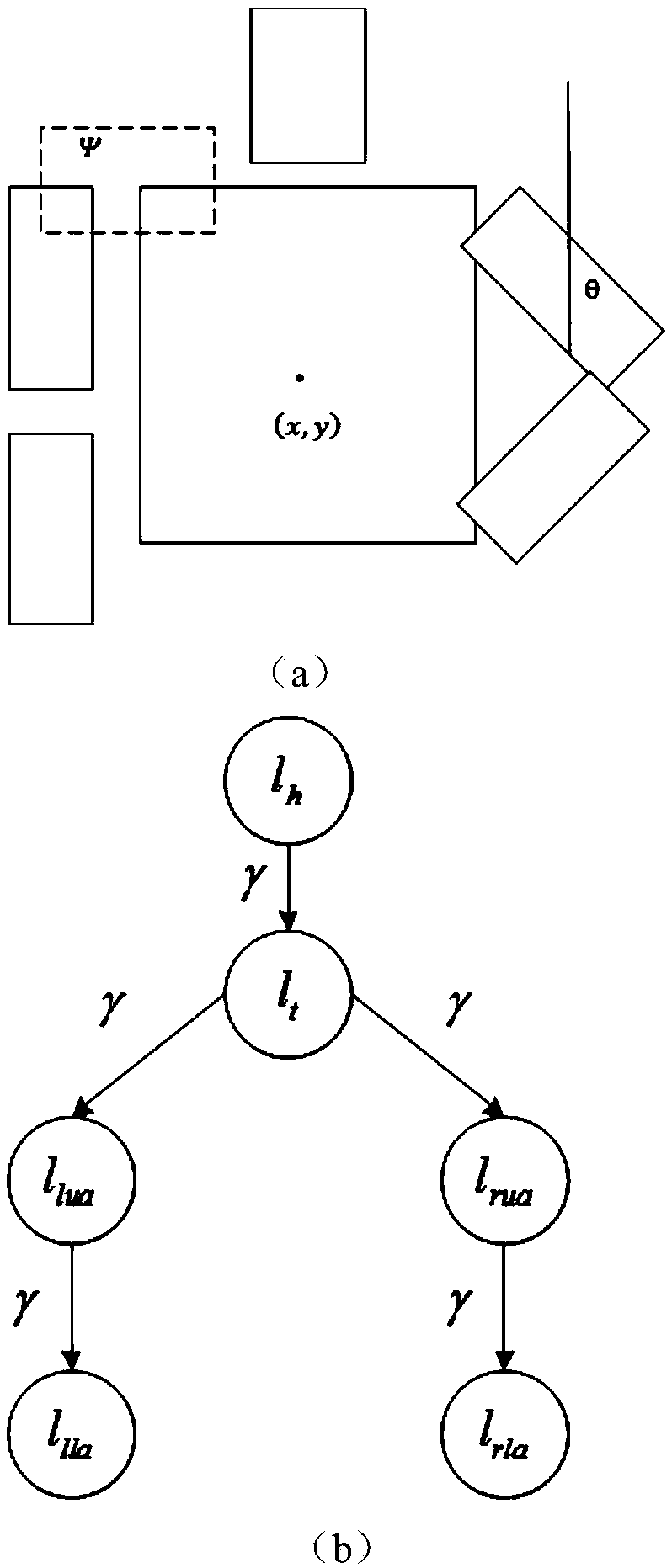

[0032] 1.1 Graphic structure model

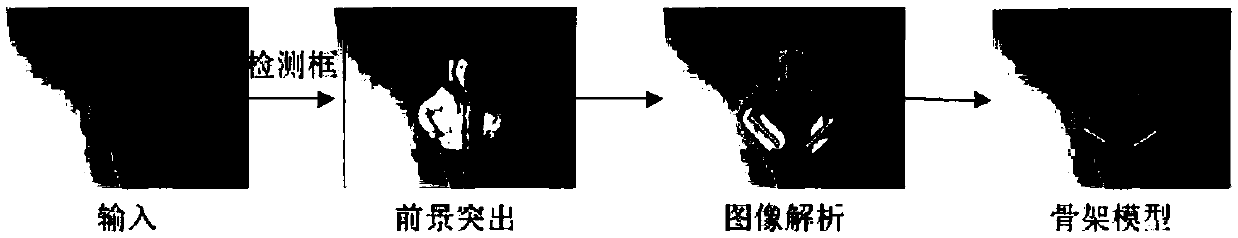

[0033] The invention utilizes Pictorial structures to estimate the human body appearance model, and then performs gesture recognition on the obtained human body structure model. The specific implementation steps include detecting the position of the human body, highlighting the foreground, and a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com