Cross-media retrieval method based on deep learning and consistent expression spatial learning

A deep learning and consistent technology, applied in the field of cross-media retrieval, can solve the problems of not being able to measure the similarity of modal features more accurately, not being able to express the global content well, and not considering the dimension of feature vector indicators.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments.

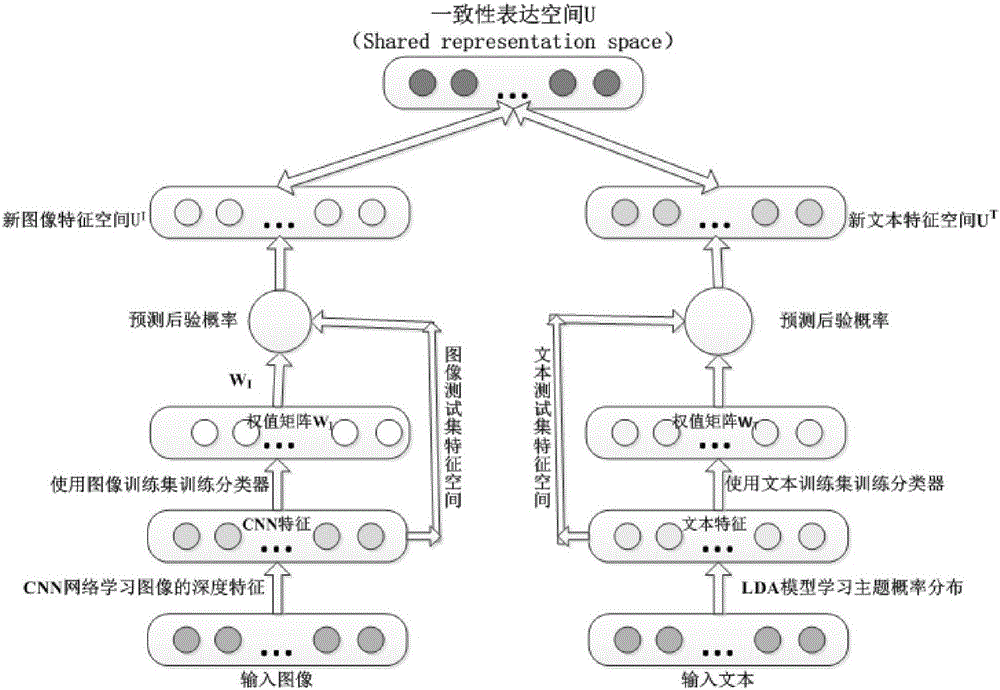

[0039] In order to solve the shortcomings of the existing technology, the present invention provides a cross-media retrieval method based on deep learning and consistent expression space learning. The method aims at mutual retrieval of multimedia information in two modes of image and text, and realizes cross-media retrieval. Retrieval accuracy is greatly improved.

[0040] Method of the present invention, main steps are as follows:

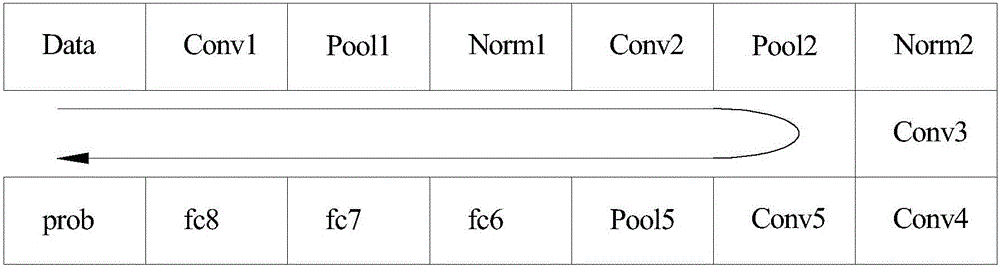

[0041] 1) After acquiring the image data and text data, extract the image feature I and text feature T respectively to obtain the image feature space and text feature space

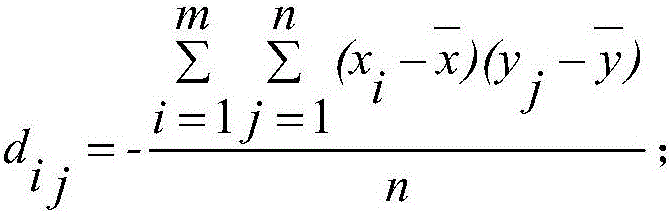

[0042] 2) The image feature space Mapped to a new image feature space U I , the text feature space Mapped to a new text feature space U T , the new image feature space U I with the new text feature space U T is isomorphic;

[00...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com