Human behavior identification method based on depth information

A recognition method and technology of depth information, applied in the field of computer vision and image processing, can solve the problem of lack of spatial structure information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The human behavior recognition method based on depth information provided by the present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

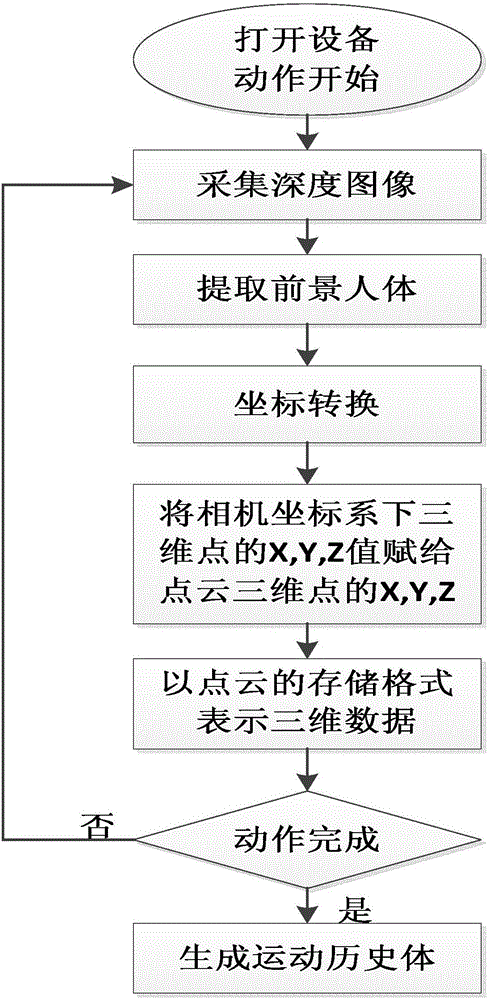

[0026] The human behavior recognition method based on depth information provided by the present invention includes the following steps in order:

[0027] (1) if figure 1 As shown, the depth camera is used to collect a series of depth images of several people performing multiple different actions in the same background, and one of the actions of one person corresponds to multiple frames of depth images, and then these depth images are transmitted to the depth images connected to the depth camera. Computer, because there are backgrounds other than the human body in the depth image collected, therefore extract the depth image of the human body region as the foreground from each frame of depth image collected on the computer; by setting the depth value threshold (0-2500m...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com