Optical-field-camera-based realization method for three-dimensional scene recording and broadcasting

A technology of three-dimensional scene and realization method, applied in image communication, selective content distribution, electrical components, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0016] The technical solution of this patent will be further described in detail below in conjunction with specific embodiments.

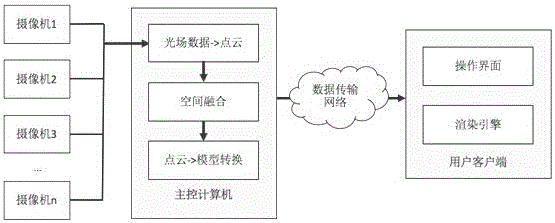

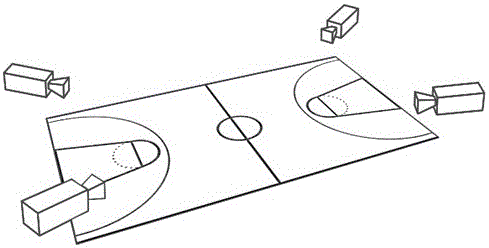

[0017] see Figure 1-2 , a method for realizing 3D scene recording and broadcasting based on a light field camera, the specific steps are as follows:

[0018] (1) The light field information is collected simultaneously by multi-angle and multiple light field cameras, and the main control computer collects these information and converts the light field information into a depth point cloud with RGB colors;

[0019] (2) The main control computer synchronously fuses and reconstructs the data of multiple light field cameras into a dynamic 3D scene data, and these data are scenes depicted in the form of point clouds

[0020] (3) The main control computer performs grid optimization on the point cloud data into 3D model and texture information;

[0021] (4) The main control computer transmits the 3D model data to the user client;

[0022] (5) The user c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com