Method for translation of characters in picture

A technology for text in pictures, applied in the field of image processing, can solve the problems of inconvenient extraction and difficult to preserve the typesetting format of pictures, and achieve the effect of improving translation accuracy, easy implementation and easy operation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

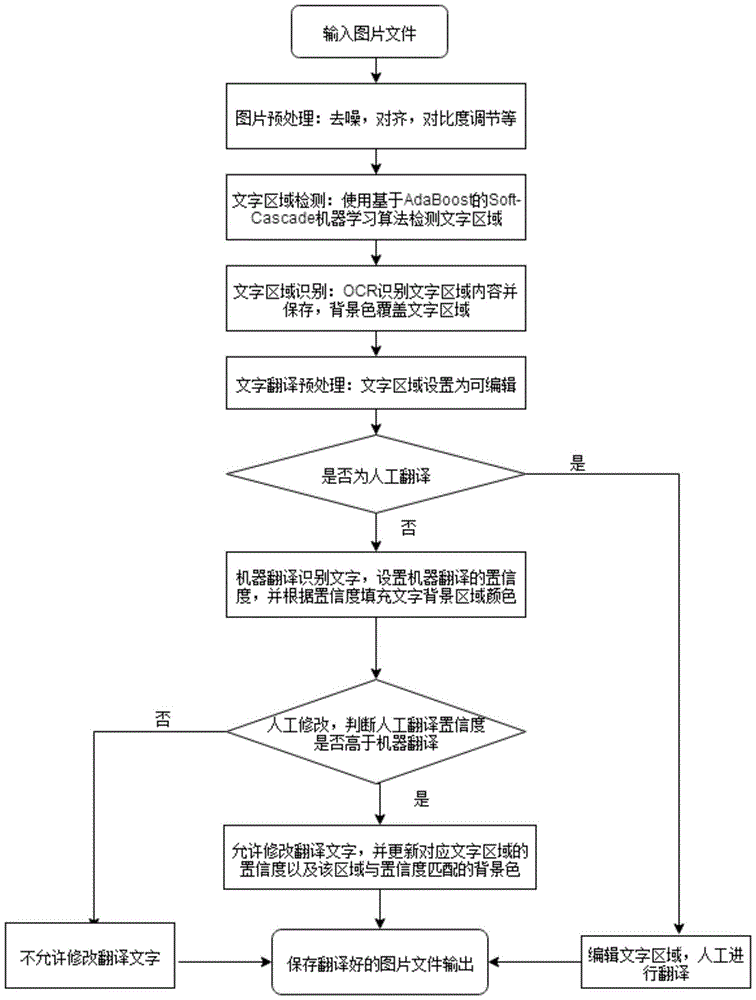

[0029] Such as figure 1 shown.

[0030] A method for translating text in a picture, comprising the following steps:

[0031] 1) Image preprocessing: denoise the image, align text content, and adjust contrast. Pictures from scanners or cameras generally contain noise points, the text content may be skewed, and the brightness and contrast of the pictures are also quite different. In order to improve the accuracy of subsequent text recognition, it is necessary to preprocess the picture to remove the noise points in the picture, correct the upper and lower edges of the picture to be in a horizontal position and correct the text lines in the picture to keep them horizontal, and adjust the contrast to make the text in the picture and The background can be clearly distinguished.

[0032] 2) Text area detection: In the picture, the position and size of the text area are not fixed, and the detector generated by the machine learning method detects and marks the text area and non-text...

Embodiment 2

[0042] According to the method for translating text in a picture described in embodiment 1, the difference is that the detection method of the text area in the step 2) is based on the Soft-Cascade algorithm of AdaBoost. The Soft-Cascade algorithm based on AdaBoost uses several weak classifiers to generate a strong classifier, cascades the weak classifiers, and sets the detection threshold at each level to quickly detect and reject negative samples to speed up the detection. Among them, the AdaBoost algorithm is an algorithm that trains different weak classifiers for the same training set, and combines these weak classifiers according to certain rules, and finally forms a strong classifier. A weak classifier refers to a classification accuracy rate slightly higher than 50%, that is, the accuracy rate is only slightly better than a random guessing classifier, and the final strong classifier can obtain a higher accuracy rate, and its performance is far better than any single weak...

Embodiment 3

[0044] According to the method for translating text in a picture described in embodiment 1, the difference is that the specific method of machine translation in said step 4) is to call the API of Baidu translation to obtain preliminary results, and then adjust the preliminary results by manual translation .

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com