Three-dimensional model reconstruction method and system

A 3D model and model technology, applied in 3D modeling, image data processing, instruments, etc., to achieve the effect of improving accuracy, reducing data redundancy, and reducing motion blur

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0050] The present invention aims at the defect that in the existing 3D model reconstruction process, the key identification points must be manually marked by the user to create a 3D model, the operation is inconvenient, and the accuracy is low. The method of reconstruction enables image splicing and fusion without the need for the user to manually select key recognition points in the image, thereby achieving the purpose of improving the accuracy and speed of obtaining the 3D model.

[0051] The invention will now be described in detail in conjunction with the accompanying drawings and specific embodiments.

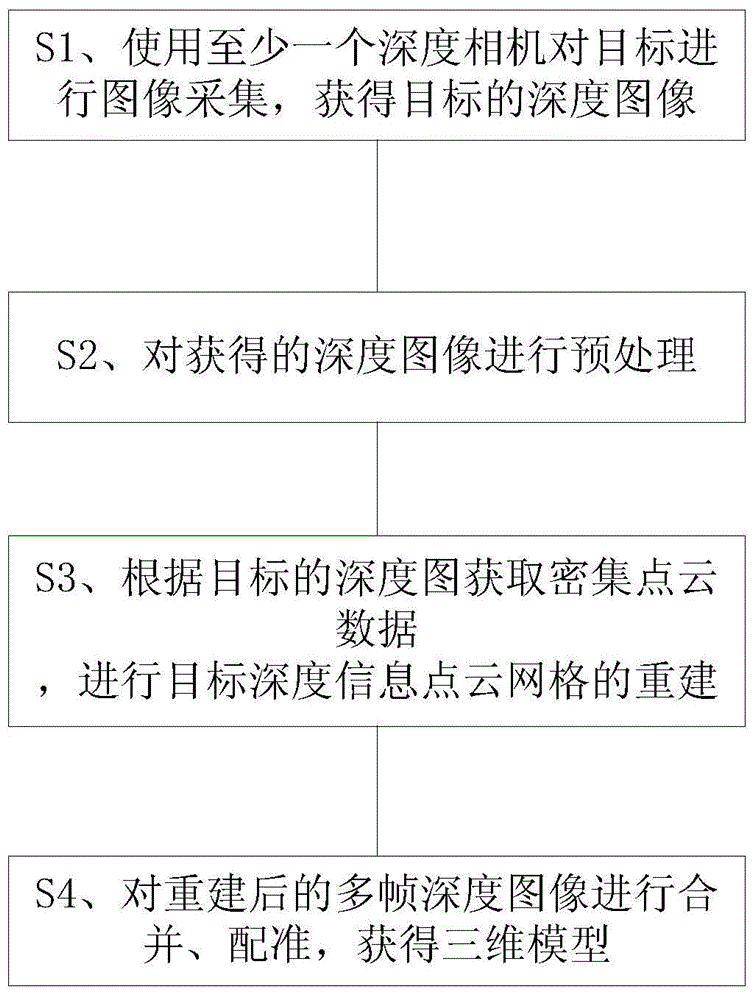

[0052] Such as figure 1 Shown is a flowchart of a three-dimensional model reconstruction method provided by a preferred embodiment of the present invention. In this embodiment, step S1 is first performed: using at least one depth camera to conduct multi-angle and continuous acquisition of a target to be modeled to generate multiple depth maps. The depth camera used in t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com