Three-dimensional video depth map coding method based on just distinguishable parallax error estimation

A technology of depth map coding and 3D video, which is applied in the field of 3D video depth map coding based on just identifiable parallax error estimation, can solve problems such as distortion and inability to guarantee rate-distortion performance, and achieve the effect of reducing bit rate and improving subjective quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

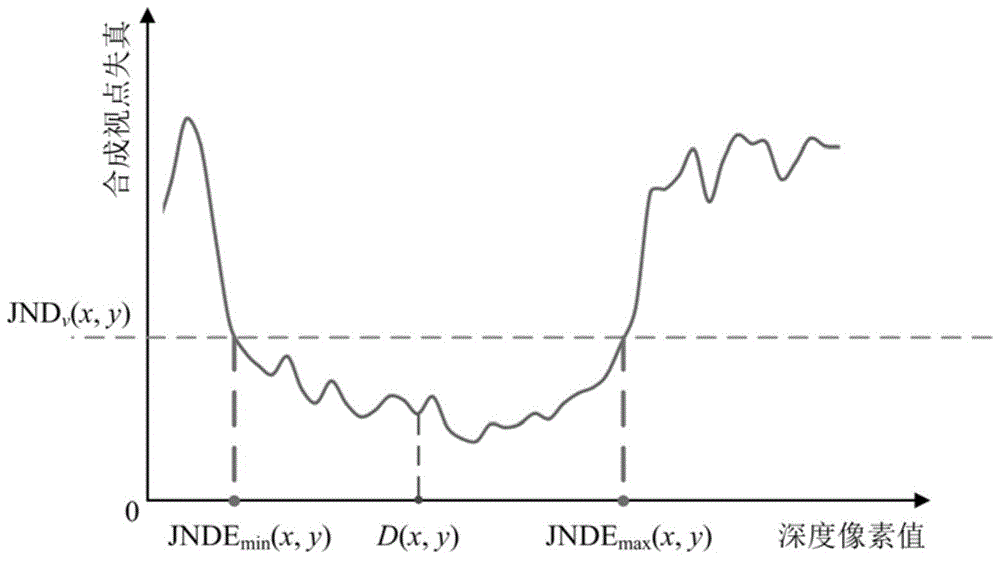

[0062] A 3D video depth map coding method based on just-identifiable parallax error estimation, comprising the following steps;

[0063] (1) Input a frame of 3D video depth map and the texture image corresponding to the 3D video depth map;

[0064] (2) Synthesize the texture image of the virtual viewpoint through the 3D video depth map and its corresponding texture image;

[0065] Steps (1) and (2) are performed using existing technologies. For each frame of 3D video, there are depth maps and texture images corresponding to each other.

[0066] (3) Calculating the just identifiable error map of the texture image of the virtual viewpoint, which specifically includes the following steps:

[0067] 3-1. According to the response characteristics of the human visual system, calculate the background brightness masking effect value T at each pixel (x, y) in the texture image of the virtual viewpoint l (x,y);

[0068] T l ( ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com