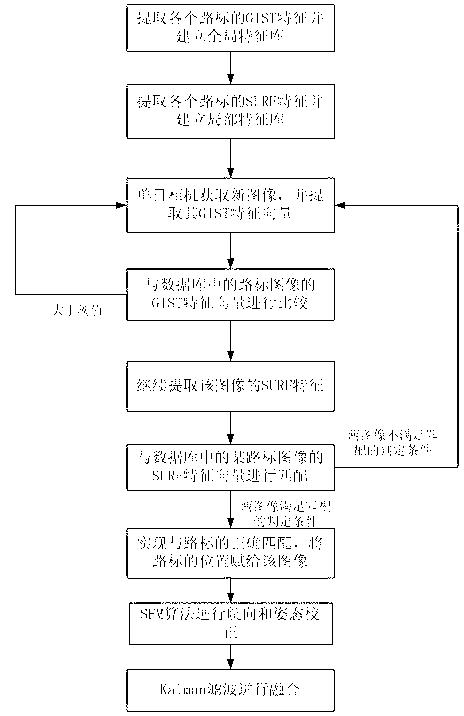

Monocular natural vision landmark assisted mobile robot positioning method

A mobile robot and positioning method technology, which is applied in the fields of inertial navigation and image processing, and can solve problems such as large amount of calculation, low positioning accuracy, and error accumulation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

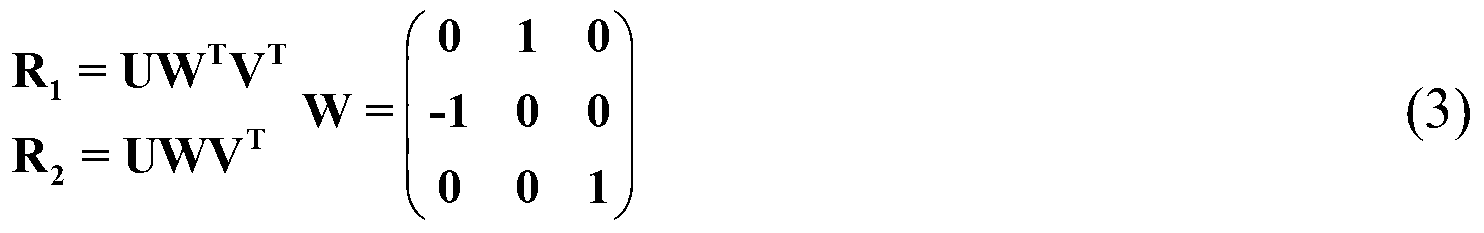

Method used

Image

Examples

Embodiment

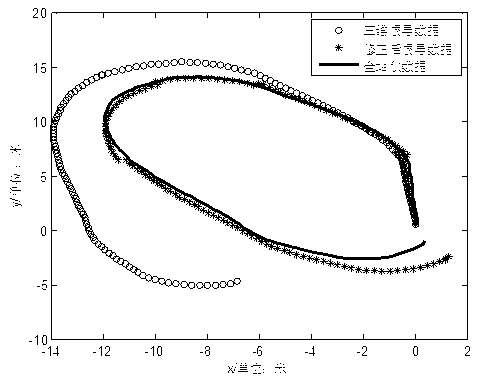

[0057] The experiment uses the pioneer3 robot as a platform for online collection and algorithm testing. The platform is equipped with a PointGrey Bumblebee stereo camera, and only one of the cameras is used in the experiment. There are also two Novatel GPSs and one NV-IMU200 IMU installed on the car. The highest frequency of GPS is 20hz, the camera can collect images up to 10 frames per second, and the frequency of IMU is 100hz. The positioning accuracy of GPS using RTK technology can reach up to 2cm. The experiment uses dual GPS to measure the direction of the camera and the initial heading of the car body when collecting road signs, and the baseline distance is 50cm. The experimental environment is an outdoor grass field, and the Sokia SRX1 total station system is used to accurately locate the position of the car body as the true value of the measurement. The total station (TS) locates the car body by tracking the omnidirectional prism installed on the car body, and the accu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com