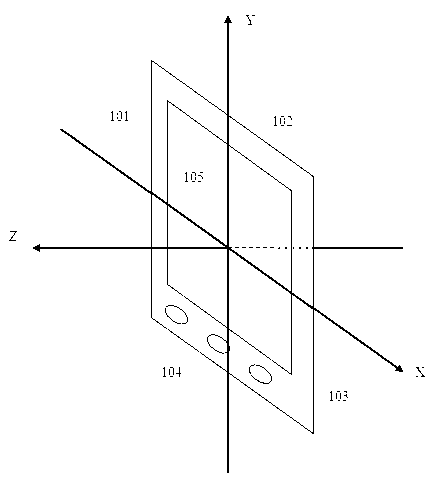

Man-machine interaction method and equipment based on acceleration sensor and motion recognition

A human-computer interaction device and acceleration sensor technology, applied in the field of human-computer interaction, can solve the problems of no tap detection, many misoperations, and low recognition rate, so as to achieve improved user experience, low misjudgment, and high recognition rate Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0054] When browsing pictures on the mobile phone, the acceleration data is collected through the acceleration sensor, and the classifier pre-trained with the BOOST algorithm is used in real time to identify whether there is a tapping action. When a tap action is detected, it is judged whether it is tapped from the left or from the right according to the coordinate axis of the tap and whether the orientation of the current device is horizontal or vertical. If the tap is from the left, the previous image will be displayed according to the preset Picture (the next picture can also be displayed according to the default), if it is tapped on the right, the next picture can be displayed according to the default (the previous picture can also be displayed according to the default).

Embodiment 2

[0056] When watching a video on a tablet computer, the motion data is collected through the acceleration sensor and gyroscope, and more accurate acceleration data is obtained through preprocessing, and the classifier trained in advance with the SVM algorithm is used in real time to identify whether there is a tapping action. When a tap action is detected, it is judged whether it is tapped from the left or from the right according to the coordinate axis where the tap occurred and whether the orientation of the current device is horizontal or vertical. The playback will start after N seconds (N can be set, positive or negative). If it is tapped on the right, it will start to play from N seconds before the current moment according to the preset.

Embodiment 3

[0058] When the phone is in your pocket and it is inconvenient to take it out to check the time, you can tap the back of the phone three times in a row. The acceleration sensor of the phone collects the acceleration data and uses the pre-trained classifier with the HMM algorithm to identify whether there is a tap in real time. action. When the tapping action is detected, the mobile phone reads out the current time.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com