Method for filling indoor light detection and ranging (LiDAR) missing data based on Kinect

A technology for missing data and scanning data, applied in the field of indoor LiDAR missing data filling, it can solve the problems of inability to complete data collection in small areas and limited scanning range, and achieve the effect of realizing collection work, low price and maintaining integrity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment

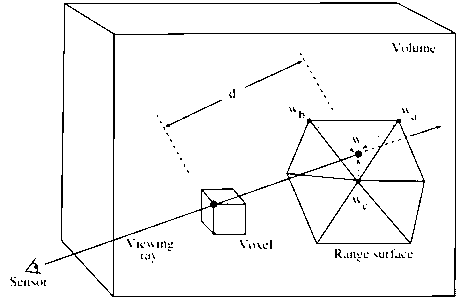

[0059] Step 1, the key frame extraction of the Kinect scanning process, to obtain relatively sparse scanning data. The Kinect device is used to scan the missing areas of the LiDAR device during the single-point scanning process. Since the Kinect device collects data at a speed of 30 frames per second, and there are many repeated areas between adjacent frames, the key frame extraction method is used to obtain local effective scanning data on the premise of ensuring the integrity of missing scene data , to reduce post-data processing time. The present invention determines the addition of key frames by directly calculating the angle deflection and translation of the camera.

[0060] Step 2, feature extraction of Kinect-based RGB images. Since the RGB image and the point cloud data have been registered, the feature points extracted from the RGB image can be mapped to the point cloud data as the features of the point cloud data. Among them, the RGB image realizes the feature ext...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com