Network cache architecture

A cache and memory technology, applied in digital transmission systems, secure communication devices, selective content distribution, etc., to solve problems such as communication interruptions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

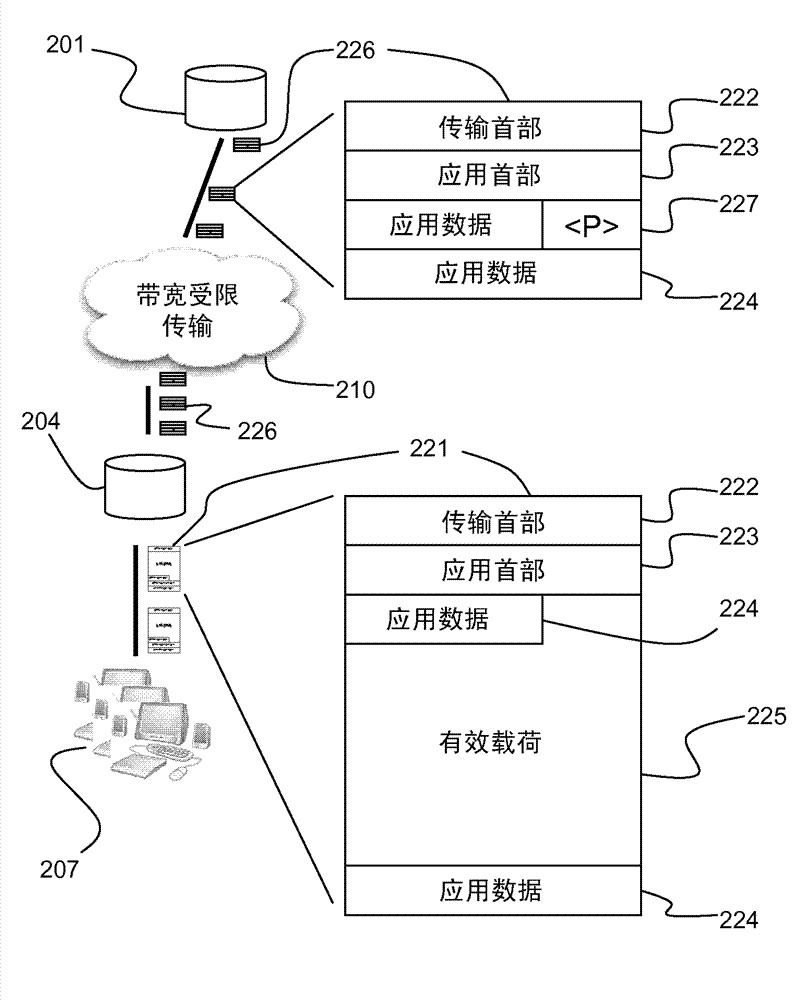

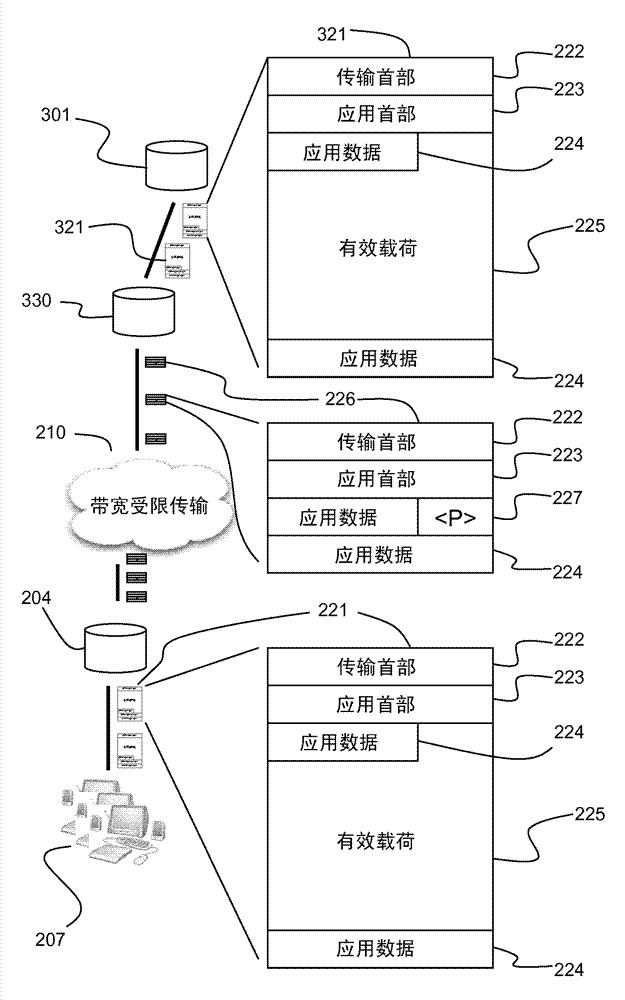

[0062] figure 2 is a schematic diagram of a network architecture, showing content server 201 , cache memory 204 and client 207 . A portion of the network 210 is bandwidth constrained, so sending large amounts of data over that portion needs to be avoided.

[0063] The network is used to send the packet 221 to the client 207 . Each packet 221 received by the client 207 should include transport and application headers 222 , 223 , application data 224 (such as status information), and a data payload 225 . These packets are large and require a large amount of bandwidth.

[0064] In order not to overload the bandwidth limited transport 210 , the content server 201 sends the cache 204 packets 226 of reduced size. This is only possible if the content server 201 knows that the cache memory 204 holds content. In the file held in the cache memory 204, a simple pointer is used in the reduced size packet 226 227 replaces the data payload 225 (all data content except the application ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com