Vision navigation method of mobile robot based on hand-drawing map and path

A mobile robot and visual navigation technology, applied in road network navigators, two-dimensional position/channel control, etc., can solve problems such as trapped detection ability, navigation failure, and prone to mismatching, and achieve high efficiency and robustness Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

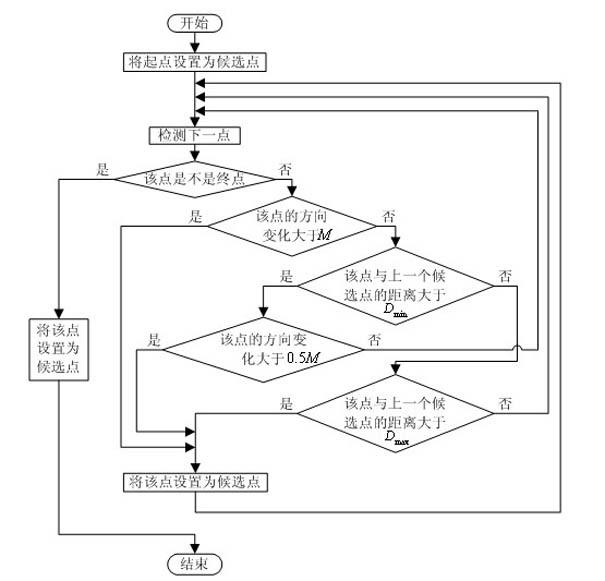

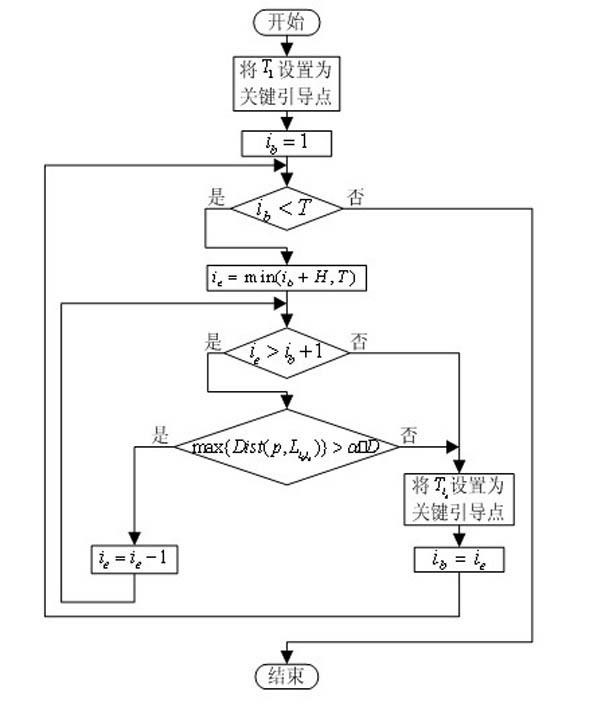

Method used

Image

Examples

Embodiment Construction

[0034] 1 Drawing and representation of hand-drawn maps

[0035] Suppose the actual environment map is M,

[0036] Here landmarks (size, location) represent key landmarks for navigation setup; static obstacles (size, location) represent objects that remain stationary for a longer period of time and cannot be used for navigation due to their indistinct features Reference objects, but the robot must avoid these static obstacles in consideration of obstacle avoidance during the moving process; dynamic obstacles (size, position) indicate that the position of objects in the environment is constantly changing during the moving process of the robot; task Area (object, position, range) represents the target or task operation area. The initial pose of the mobile robot (size, position).

[0037] The drawing of hand-drawn maps is relatively simple. Open the interactive drawing interface. Since the image information of the key landmarks in the environment is saved in the system in adva...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com