Throttle Body Optimization for Cloud Computing Systems

JUL 18, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Cloud Throttling Evolution

Cloud throttling has undergone significant evolution since its inception in the early days of cloud computing. Initially, throttling mechanisms were rudimentary, primarily focused on limiting resource consumption to prevent system overload. As cloud technologies matured, so did the sophistication of throttling techniques.

In the early 2010s, cloud providers introduced basic rate limiting and request throttling to manage API calls and prevent abuse. These early implementations were often static and lacked the flexibility to adapt to varying workloads and user demands. However, they laid the foundation for more advanced throttling strategies.

The mid-2010s saw the emergence of more dynamic throttling approaches. Cloud providers began implementing adaptive throttling algorithms that could adjust limits based on real-time system performance and resource availability. This period also witnessed the integration of machine learning techniques to predict and preemptively manage potential system overloads.

As microservices architecture gained popularity, throttling evolved to address the unique challenges of distributed systems. Circuit breakers and bulkhead patterns were introduced to isolate failures and prevent cascading issues across interconnected services. These patterns became essential components of cloud-native application design and resilience strategies.

The late 2010s brought about the concept of intelligent throttling, which leverages advanced analytics and AI to optimize resource allocation dynamically. This approach considers factors such as user behavior, historical usage patterns, and business priorities to make more informed throttling decisions.

Recent years have seen a shift towards fine-grained, context-aware throttling mechanisms. These systems can differentiate between various types of requests, prioritize critical operations, and apply throttling policies based on complex, multi-dimensional criteria. This evolution has enabled more efficient resource utilization and improved overall system performance.

The integration of edge computing with cloud systems has further influenced throttling strategies. Edge-based throttling mechanisms have been developed to manage traffic closer to the source, reducing latency and improving the responsiveness of cloud services.

Looking ahead, the future of cloud throttling is likely to involve even more sophisticated, AI-driven approaches that can anticipate and mitigate potential bottlenecks before they occur. The ongoing evolution of cloud throttling continues to play a crucial role in optimizing performance, ensuring fairness, and maintaining the stability of increasingly complex cloud computing environments.

In the early 2010s, cloud providers introduced basic rate limiting and request throttling to manage API calls and prevent abuse. These early implementations were often static and lacked the flexibility to adapt to varying workloads and user demands. However, they laid the foundation for more advanced throttling strategies.

The mid-2010s saw the emergence of more dynamic throttling approaches. Cloud providers began implementing adaptive throttling algorithms that could adjust limits based on real-time system performance and resource availability. This period also witnessed the integration of machine learning techniques to predict and preemptively manage potential system overloads.

As microservices architecture gained popularity, throttling evolved to address the unique challenges of distributed systems. Circuit breakers and bulkhead patterns were introduced to isolate failures and prevent cascading issues across interconnected services. These patterns became essential components of cloud-native application design and resilience strategies.

The late 2010s brought about the concept of intelligent throttling, which leverages advanced analytics and AI to optimize resource allocation dynamically. This approach considers factors such as user behavior, historical usage patterns, and business priorities to make more informed throttling decisions.

Recent years have seen a shift towards fine-grained, context-aware throttling mechanisms. These systems can differentiate between various types of requests, prioritize critical operations, and apply throttling policies based on complex, multi-dimensional criteria. This evolution has enabled more efficient resource utilization and improved overall system performance.

The integration of edge computing with cloud systems has further influenced throttling strategies. Edge-based throttling mechanisms have been developed to manage traffic closer to the source, reducing latency and improving the responsiveness of cloud services.

Looking ahead, the future of cloud throttling is likely to involve even more sophisticated, AI-driven approaches that can anticipate and mitigate potential bottlenecks before they occur. The ongoing evolution of cloud throttling continues to play a crucial role in optimizing performance, ensuring fairness, and maintaining the stability of increasingly complex cloud computing environments.

Market Demand Analysis

The market demand for throttle body optimization in cloud computing systems has been experiencing significant growth in recent years. This surge is primarily driven by the increasing adoption of cloud services across various industries and the growing need for efficient resource management in data centers.

Cloud service providers are constantly seeking ways to improve their infrastructure's performance and energy efficiency. Throttle body optimization plays a crucial role in achieving these goals by regulating the flow of resources, such as CPU, memory, and network bandwidth, to different applications and services running on cloud platforms. This optimization technique helps in balancing workloads, preventing resource contention, and ensuring optimal utilization of available resources.

The global cloud computing market is projected to reach substantial growth in the coming years, with a compound annual growth rate (CAGR) exceeding industry averages. This growth is fueled by the digital transformation initiatives of businesses across various sectors, including finance, healthcare, retail, and manufacturing. As organizations increasingly migrate their operations to the cloud, the demand for efficient resource management solutions, including throttle body optimization, is expected to rise proportionally.

One of the key drivers for the market demand of throttle body optimization is the need for cost reduction in cloud operations. By implementing effective throttling mechanisms, cloud providers can optimize resource allocation, reduce energy consumption, and improve overall system efficiency. This, in turn, allows them to offer more competitive pricing to their customers while maintaining profitability.

Another factor contributing to the market demand is the growing complexity of cloud environments. As multi-cloud and hybrid cloud architectures become more prevalent, the need for sophisticated throttling mechanisms to manage resources across diverse platforms increases. Throttle body optimization solutions that can seamlessly integrate with various cloud environments and provide unified resource management are particularly in high demand.

The rise of edge computing and the Internet of Things (IoT) is also fueling the demand for throttle body optimization in cloud systems. As more devices connect to the cloud and generate massive amounts of data, efficient resource allocation becomes critical to ensure smooth operations and prevent bottlenecks. Throttle body optimization techniques that can dynamically adjust resource allocation based on real-time demand are gaining traction in this context.

Furthermore, the increasing focus on sustainability and green computing is driving the adoption of throttle body optimization solutions. By optimizing resource utilization and reducing energy consumption, these solutions help cloud providers meet their environmental goals and comply with regulatory requirements related to energy efficiency and carbon emissions.

Cloud service providers are constantly seeking ways to improve their infrastructure's performance and energy efficiency. Throttle body optimization plays a crucial role in achieving these goals by regulating the flow of resources, such as CPU, memory, and network bandwidth, to different applications and services running on cloud platforms. This optimization technique helps in balancing workloads, preventing resource contention, and ensuring optimal utilization of available resources.

The global cloud computing market is projected to reach substantial growth in the coming years, with a compound annual growth rate (CAGR) exceeding industry averages. This growth is fueled by the digital transformation initiatives of businesses across various sectors, including finance, healthcare, retail, and manufacturing. As organizations increasingly migrate their operations to the cloud, the demand for efficient resource management solutions, including throttle body optimization, is expected to rise proportionally.

One of the key drivers for the market demand of throttle body optimization is the need for cost reduction in cloud operations. By implementing effective throttling mechanisms, cloud providers can optimize resource allocation, reduce energy consumption, and improve overall system efficiency. This, in turn, allows them to offer more competitive pricing to their customers while maintaining profitability.

Another factor contributing to the market demand is the growing complexity of cloud environments. As multi-cloud and hybrid cloud architectures become more prevalent, the need for sophisticated throttling mechanisms to manage resources across diverse platforms increases. Throttle body optimization solutions that can seamlessly integrate with various cloud environments and provide unified resource management are particularly in high demand.

The rise of edge computing and the Internet of Things (IoT) is also fueling the demand for throttle body optimization in cloud systems. As more devices connect to the cloud and generate massive amounts of data, efficient resource allocation becomes critical to ensure smooth operations and prevent bottlenecks. Throttle body optimization techniques that can dynamically adjust resource allocation based on real-time demand are gaining traction in this context.

Furthermore, the increasing focus on sustainability and green computing is driving the adoption of throttle body optimization solutions. By optimizing resource utilization and reducing energy consumption, these solutions help cloud providers meet their environmental goals and comply with regulatory requirements related to energy efficiency and carbon emissions.

Current Throttling Tech

Current throttling techniques in cloud computing systems primarily focus on managing resource allocation and utilization to optimize performance, cost-efficiency, and energy consumption. One of the most widely adopted approaches is CPU throttling, which involves dynamically adjusting the processing speed of virtual machines (VMs) or containers based on workload demands and system constraints.

CPU throttling mechanisms typically employ a combination of hardware and software-based solutions. At the hardware level, modern processors support dynamic voltage and frequency scaling (DVFS), allowing for real-time adjustments to clock speeds and power consumption. Cloud providers leverage these capabilities through hypervisor-level controls, enabling fine-grained management of CPU resources across multiple tenants.

Software-defined throttling techniques complement hardware-based methods by implementing intelligent scheduling algorithms and resource allocation policies. These algorithms continuously monitor system metrics such as CPU utilization, memory usage, and I/O operations to make informed decisions about when and how to throttle resources. Advanced machine learning models are increasingly being employed to predict workload patterns and proactively adjust throttling parameters for optimal performance.

Network throttling is another critical aspect of cloud computing systems, particularly in multi-tenant environments. Cloud providers implement traffic shaping and rate limiting techniques to ensure fair bandwidth allocation among different users and applications. Software-defined networking (SDN) technologies play a crucial role in enabling dynamic and programmable network throttling, allowing for real-time adjustments based on application requirements and network conditions.

Storage throttling mechanisms are also essential in managing I/O operations and maintaining quality of service (QoS) for cloud-based applications. Current techniques include I/O scheduling algorithms that prioritize and limit storage access based on predefined policies or service level agreements (SLAs). Some cloud providers offer tiered storage options with different performance characteristics, allowing users to choose the appropriate level of throttling for their specific needs.

Containerization technologies, such as Docker and Kubernetes, have introduced new dimensions to resource throttling in cloud environments. These platforms provide granular control over resource allocation and usage limits for individual containers, enabling more efficient utilization of underlying hardware resources. Container orchestration tools offer advanced features like auto-scaling and load balancing, which dynamically adjust resource allocation based on application demands and system metrics.

As cloud computing systems continue to evolve, emerging throttling techniques are focusing on more holistic approaches that consider the interdependencies between different resource types. For example, some solutions aim to optimize the trade-offs between CPU, memory, storage, and network resources to achieve overall system efficiency. Additionally, there is growing interest in leveraging artificial intelligence and machine learning algorithms to develop more sophisticated and adaptive throttling mechanisms that can automatically optimize resource allocation in complex, dynamic cloud environments.

CPU throttling mechanisms typically employ a combination of hardware and software-based solutions. At the hardware level, modern processors support dynamic voltage and frequency scaling (DVFS), allowing for real-time adjustments to clock speeds and power consumption. Cloud providers leverage these capabilities through hypervisor-level controls, enabling fine-grained management of CPU resources across multiple tenants.

Software-defined throttling techniques complement hardware-based methods by implementing intelligent scheduling algorithms and resource allocation policies. These algorithms continuously monitor system metrics such as CPU utilization, memory usage, and I/O operations to make informed decisions about when and how to throttle resources. Advanced machine learning models are increasingly being employed to predict workload patterns and proactively adjust throttling parameters for optimal performance.

Network throttling is another critical aspect of cloud computing systems, particularly in multi-tenant environments. Cloud providers implement traffic shaping and rate limiting techniques to ensure fair bandwidth allocation among different users and applications. Software-defined networking (SDN) technologies play a crucial role in enabling dynamic and programmable network throttling, allowing for real-time adjustments based on application requirements and network conditions.

Storage throttling mechanisms are also essential in managing I/O operations and maintaining quality of service (QoS) for cloud-based applications. Current techniques include I/O scheduling algorithms that prioritize and limit storage access based on predefined policies or service level agreements (SLAs). Some cloud providers offer tiered storage options with different performance characteristics, allowing users to choose the appropriate level of throttling for their specific needs.

Containerization technologies, such as Docker and Kubernetes, have introduced new dimensions to resource throttling in cloud environments. These platforms provide granular control over resource allocation and usage limits for individual containers, enabling more efficient utilization of underlying hardware resources. Container orchestration tools offer advanced features like auto-scaling and load balancing, which dynamically adjust resource allocation based on application demands and system metrics.

As cloud computing systems continue to evolve, emerging throttling techniques are focusing on more holistic approaches that consider the interdependencies between different resource types. For example, some solutions aim to optimize the trade-offs between CPU, memory, storage, and network resources to achieve overall system efficiency. Additionally, there is growing interest in leveraging artificial intelligence and machine learning algorithms to develop more sophisticated and adaptive throttling mechanisms that can automatically optimize resource allocation in complex, dynamic cloud environments.

Throttling Mechanisms

01 Throttle body design optimization

Optimizing the design of throttle bodies to improve airflow and engine performance. This includes modifications to the shape, size, and internal components of the throttle body to enhance efficiency and responsiveness.- Throttle body design optimization: Optimizing the design of throttle bodies to improve airflow and engine performance. This includes modifications to the shape, size, and internal components of the throttle body to enhance air intake efficiency and responsiveness.

- Electronic throttle control systems: Implementation of electronic throttle control systems to improve precision and responsiveness. These systems use sensors and actuators to regulate airflow more accurately, enhancing fuel efficiency and engine performance.

- Throttle body airflow management: Techniques for managing airflow through the throttle body, including the use of variable geometry designs and adaptive airflow control mechanisms. These innovations aim to optimize air intake across different engine operating conditions.

- Integration with engine management systems: Integrating throttle body optimization with broader engine management systems. This approach involves coordinating throttle control with other engine parameters to achieve optimal performance, fuel efficiency, and emissions control.

- Throttle body materials and manufacturing: Advancements in materials and manufacturing processes for throttle bodies. This includes the use of lightweight materials, improved sealing technologies, and precision manufacturing techniques to enhance durability and performance.

02 Electronic throttle control systems

Implementation of electronic throttle control systems to improve precision and responsiveness. These systems use sensors and actuators to regulate airflow more accurately, resulting in better fuel efficiency and performance.Expand Specific Solutions03 Throttle body airflow management

Techniques for managing airflow through the throttle body, including the use of variable geometry designs and advanced valve systems. These innovations aim to optimize air intake across different engine operating conditions.Expand Specific Solutions04 Integration with engine management systems

Integrating throttle body optimization with broader engine management systems. This approach involves coordinating throttle control with other engine parameters to achieve optimal performance, fuel efficiency, and emissions control.Expand Specific Solutions05 Throttle body materials and manufacturing

Advancements in materials and manufacturing processes for throttle bodies. This includes the use of lightweight materials, precision manufacturing techniques, and innovative coatings to improve durability and performance.Expand Specific Solutions

Key Cloud Providers

The throttle body optimization for cloud computing systems is in a rapidly evolving phase, with the market experiencing significant growth due to increasing demand for efficient cloud infrastructure. The technology's maturity varies among key players, with established tech giants like IBM, Intel, and Microsoft leading in innovation and implementation. Emerging companies such as Snowflake and Kyndryl are also making strides in this field. The competitive landscape is diverse, featuring both traditional IT powerhouses and specialized cloud service providers, each contributing to the advancement of throttle body optimization techniques for improved cloud performance and resource management.

International Business Machines Corp.

Technical Solution: IBM's approach to throttle body optimization for cloud computing systems involves the use of advanced machine learning algorithms and predictive analytics. Their solution utilizes IBM Watson AI to dynamically adjust resource allocation based on real-time workload demands and historical usage patterns[1]. This system employs a feedback loop that continuously monitors system performance, network traffic, and application requirements to fine-tune throttling parameters. IBM's implementation also incorporates their patented "cognitive throttling" technology, which uses natural language processing to interpret user intentions and prioritize tasks accordingly[2]. The system can scale across multiple data centers, ensuring consistent performance optimization across geographically distributed cloud environments[3].

Strengths: Highly adaptive and intelligent system, leveraging IBM's strong AI capabilities. Weaknesses: May require significant computational overhead for AI processing, potentially increasing operational costs.

Intel Corp.

Technical Solution: Intel's throttle body optimization for cloud computing systems is built around their Intel Resource Director Technology (RDT) framework. This hardware-assisted solution provides fine-grained control over shared resources such as cache and memory bandwidth[4]. Intel's approach integrates with their latest Xeon processors, utilizing built-in telemetry and monitoring capabilities to make real-time throttling decisions. The system employs Intel's Speed Select Technology to dynamically adjust core frequencies and power consumption based on workload characteristics[5]. Additionally, Intel's solution incorporates their Advanced Vector Extensions (AVX) frequency control, which allows for precise throttling of high-performance computing tasks without impacting other cloud services[6]. Intel's implementation also leverages their Optane DC persistent memory technology to optimize data access patterns and reduce I/O bottlenecks, further enhancing overall system efficiency[7].

Strengths: Hardware-level optimization provides low-latency control and high efficiency. Weaknesses: Heavily dependent on Intel hardware, potentially limiting flexibility in heterogeneous cloud environments.

Innovative Algorithms

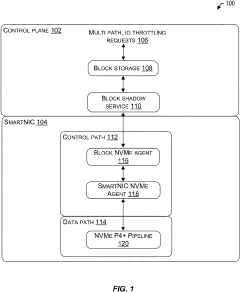

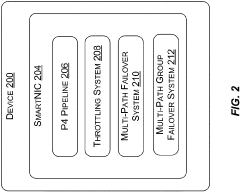

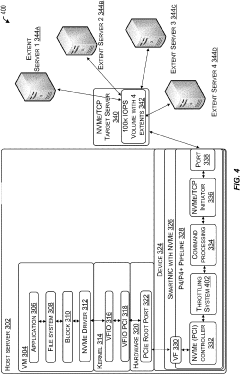

Increased data processing performance of a non-volatile memory express (NVME) block store

PatentActiveUS20230138546A1

Innovation

- Implementing a throttling system at a centralized node in a cloud infrastructure environment to distribute I/O operations across multiple servers, dynamically assigning tasks based on throttling values and processing parameters, and utilizing multiple routing paths to ensure efficient processing and failover in case of path failures.

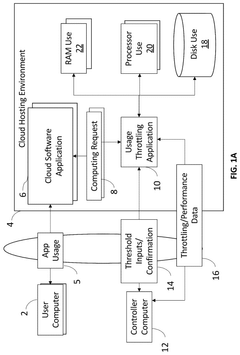

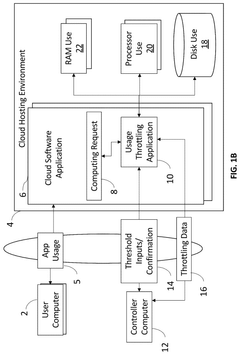

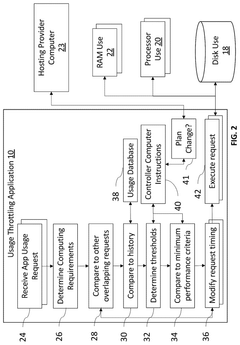

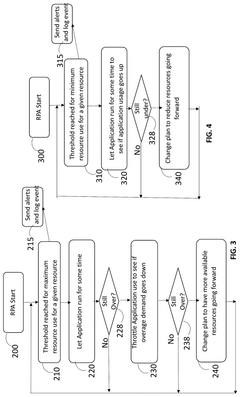

Cloud application threshold based throttling

PatentPendingUS20250086019A1

Innovation

- A system and method that intercepts computing requests, determines delays to keep resource usage below thresholds, and uses machine learning to predict usage patterns and adjust hosting plans accordingly, while generating alerts and automating actions based on resource monitoring.

Performance Metrics

Performance metrics play a crucial role in evaluating and optimizing throttle body systems for cloud computing environments. These metrics provide quantitative measures to assess the efficiency, responsiveness, and overall performance of the throttling mechanisms implemented in cloud infrastructures.

One of the primary performance metrics for throttle body optimization is throughput. This metric measures the amount of data or requests that can be processed by the system within a given time frame. In cloud computing, high throughput is essential to handle large volumes of concurrent requests and ensure efficient resource utilization. Throttle body optimization aims to maximize throughput while maintaining system stability and preventing overload.

Latency is another critical performance metric in throttle body systems. It represents the time taken for a request to be processed and a response to be delivered. Low latency is crucial for real-time applications and user experience in cloud environments. Throttle body optimization techniques focus on minimizing latency by efficiently managing resource allocation and request prioritization.

Resource utilization is a key metric that reflects the efficiency of throttle body systems in managing cloud resources. This metric measures how effectively CPU, memory, storage, and network resources are utilized across the cloud infrastructure. Optimal throttle body configurations aim to achieve high resource utilization while preventing resource contention and maintaining system performance.

Scalability is an essential performance metric for cloud computing systems. It measures the system's ability to handle increased workloads by adding or removing resources dynamically. Throttle body optimization techniques should ensure that the system can scale horizontally or vertically without compromising performance or introducing bottlenecks.

Quality of Service (QoS) metrics are crucial for assessing the overall user experience and meeting service level agreements (SLAs) in cloud environments. These metrics include availability, reliability, and consistency of service delivery. Throttle body optimization strategies should aim to maintain high QoS levels while efficiently managing system resources and handling varying workloads.

Cost-effectiveness is an increasingly important performance metric in cloud computing. It measures the balance between system performance and operational costs. Throttle body optimization techniques should consider resource allocation efficiency, energy consumption, and overall infrastructure costs to achieve optimal cost-performance ratios.

By focusing on these performance metrics, throttle body optimization for cloud computing systems can significantly enhance overall system efficiency, responsiveness, and scalability. Continuous monitoring and analysis of these metrics enable cloud service providers to fine-tune their throttling mechanisms and deliver superior performance to their users.

One of the primary performance metrics for throttle body optimization is throughput. This metric measures the amount of data or requests that can be processed by the system within a given time frame. In cloud computing, high throughput is essential to handle large volumes of concurrent requests and ensure efficient resource utilization. Throttle body optimization aims to maximize throughput while maintaining system stability and preventing overload.

Latency is another critical performance metric in throttle body systems. It represents the time taken for a request to be processed and a response to be delivered. Low latency is crucial for real-time applications and user experience in cloud environments. Throttle body optimization techniques focus on minimizing latency by efficiently managing resource allocation and request prioritization.

Resource utilization is a key metric that reflects the efficiency of throttle body systems in managing cloud resources. This metric measures how effectively CPU, memory, storage, and network resources are utilized across the cloud infrastructure. Optimal throttle body configurations aim to achieve high resource utilization while preventing resource contention and maintaining system performance.

Scalability is an essential performance metric for cloud computing systems. It measures the system's ability to handle increased workloads by adding or removing resources dynamically. Throttle body optimization techniques should ensure that the system can scale horizontally or vertically without compromising performance or introducing bottlenecks.

Quality of Service (QoS) metrics are crucial for assessing the overall user experience and meeting service level agreements (SLAs) in cloud environments. These metrics include availability, reliability, and consistency of service delivery. Throttle body optimization strategies should aim to maintain high QoS levels while efficiently managing system resources and handling varying workloads.

Cost-effectiveness is an increasingly important performance metric in cloud computing. It measures the balance between system performance and operational costs. Throttle body optimization techniques should consider resource allocation efficiency, energy consumption, and overall infrastructure costs to achieve optimal cost-performance ratios.

By focusing on these performance metrics, throttle body optimization for cloud computing systems can significantly enhance overall system efficiency, responsiveness, and scalability. Continuous monitoring and analysis of these metrics enable cloud service providers to fine-tune their throttling mechanisms and deliver superior performance to their users.

Scalability Challenges

Cloud computing systems face significant scalability challenges as they strive to meet the ever-increasing demands of modern applications and services. One of the primary issues is the ability to handle rapid and unpredictable fluctuations in workload. As user bases grow and application complexity increases, cloud infrastructures must be able to dynamically allocate resources to maintain performance and reliability.

The horizontal scaling of resources, while effective, introduces its own set of challenges. Coordinating and managing a large number of distributed nodes becomes increasingly complex, requiring sophisticated orchestration tools and strategies. Load balancing across these nodes presents another hurdle, as uneven distribution can lead to bottlenecks and inefficiencies in resource utilization.

Data management at scale poses additional difficulties. As data volumes grow exponentially, traditional database systems struggle to maintain performance and consistency. Distributed database solutions offer potential remedies but come with their own complexities in terms of data partitioning, replication, and consistency models.

Network scalability is another critical factor. As the number of nodes and the volume of data transfers increase, network infrastructure must evolve to prevent communication bottlenecks. Software-defined networking and advanced routing algorithms are being developed to address these challenges, but their implementation and optimization remain ongoing concerns.

The scalability of management and monitoring systems is often overlooked but becomes crucial as cloud environments expand. Collecting, processing, and analyzing metrics from thousands or millions of components in real-time requires highly efficient and scalable monitoring solutions.

Security and compliance requirements add another layer of complexity to scalability efforts. As systems grow, maintaining consistent security policies and ensuring regulatory compliance across all components becomes increasingly challenging. Scalable identity management, access control, and encryption systems must be designed to grow seamlessly with the infrastructure.

Lastly, the scalability of the underlying hardware infrastructure presents its own set of challenges. Power consumption, cooling requirements, and physical space limitations in data centers can become bottlenecks as systems scale up. Innovations in hardware design, such as more energy-efficient processors and advanced cooling technologies, are necessary to address these physical constraints.

The horizontal scaling of resources, while effective, introduces its own set of challenges. Coordinating and managing a large number of distributed nodes becomes increasingly complex, requiring sophisticated orchestration tools and strategies. Load balancing across these nodes presents another hurdle, as uneven distribution can lead to bottlenecks and inefficiencies in resource utilization.

Data management at scale poses additional difficulties. As data volumes grow exponentially, traditional database systems struggle to maintain performance and consistency. Distributed database solutions offer potential remedies but come with their own complexities in terms of data partitioning, replication, and consistency models.

Network scalability is another critical factor. As the number of nodes and the volume of data transfers increase, network infrastructure must evolve to prevent communication bottlenecks. Software-defined networking and advanced routing algorithms are being developed to address these challenges, but their implementation and optimization remain ongoing concerns.

The scalability of management and monitoring systems is often overlooked but becomes crucial as cloud environments expand. Collecting, processing, and analyzing metrics from thousands or millions of components in real-time requires highly efficient and scalable monitoring solutions.

Security and compliance requirements add another layer of complexity to scalability efforts. As systems grow, maintaining consistent security policies and ensuring regulatory compliance across all components becomes increasingly challenging. Scalable identity management, access control, and encryption systems must be designed to grow seamlessly with the infrastructure.

Lastly, the scalability of the underlying hardware infrastructure presents its own set of challenges. Power consumption, cooling requirements, and physical space limitations in data centers can become bottlenecks as systems scale up. Innovations in hardware design, such as more energy-efficient processors and advanced cooling technologies, are necessary to address these physical constraints.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!