Throttle Body Applications in Artificial Intelligence (AI) Hardware

JUL 18, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

AI Hardware Throttling: Background and Objectives

Throttle body applications in AI hardware have emerged as a critical area of focus in the rapidly evolving field of artificial intelligence. This technology, originally developed for automotive applications, has found new relevance in managing power consumption and thermal output in AI systems. The primary objective of implementing throttle body techniques in AI hardware is to optimize performance while maintaining energy efficiency and thermal stability.

The evolution of AI hardware has been marked by an increasing demand for computational power, leading to challenges in power management and heat dissipation. As AI models grow more complex and data-intensive, the need for sophisticated hardware solutions becomes paramount. Throttle body applications address these challenges by dynamically regulating the flow of resources, such as power and cooling, to different components of AI systems.

The development of throttle body technology for AI hardware can be traced back to the early 2010s when researchers began exploring ways to adapt power management techniques from other industries to the unique demands of AI processing. Initial efforts focused on simple throttling mechanisms that could reduce clock speeds or voltage levels in response to thermal thresholds. However, as AI workloads became more diverse and unpredictable, more advanced throttling strategies were required.

Current objectives in this field include the development of intelligent throttling algorithms that can predict and preemptively adjust system parameters based on workload characteristics. These algorithms aim to balance performance requirements with power and thermal constraints, ensuring optimal utilization of hardware resources. Additionally, researchers are working on integrating throttle body techniques with other power management strategies, such as dynamic voltage and frequency scaling (DVFS) and heterogeneous computing architectures.

Another key goal is to create adaptive throttling systems that can learn from historical data and adjust their behavior over time. This approach promises to enhance the efficiency of AI hardware across a wide range of applications, from edge devices to data center servers. By fine-tuning throttling parameters based on specific AI models and usage patterns, these systems can significantly improve energy efficiency without compromising performance.

As the field progresses, there is a growing emphasis on developing standardized metrics and benchmarks for evaluating the effectiveness of throttle body applications in AI hardware. This standardization effort aims to facilitate comparisons between different throttling techniques and guide future research and development initiatives. The ultimate objective is to create AI hardware systems that can dynamically adapt to changing computational demands while maintaining optimal performance and energy efficiency.

The evolution of AI hardware has been marked by an increasing demand for computational power, leading to challenges in power management and heat dissipation. As AI models grow more complex and data-intensive, the need for sophisticated hardware solutions becomes paramount. Throttle body applications address these challenges by dynamically regulating the flow of resources, such as power and cooling, to different components of AI systems.

The development of throttle body technology for AI hardware can be traced back to the early 2010s when researchers began exploring ways to adapt power management techniques from other industries to the unique demands of AI processing. Initial efforts focused on simple throttling mechanisms that could reduce clock speeds or voltage levels in response to thermal thresholds. However, as AI workloads became more diverse and unpredictable, more advanced throttling strategies were required.

Current objectives in this field include the development of intelligent throttling algorithms that can predict and preemptively adjust system parameters based on workload characteristics. These algorithms aim to balance performance requirements with power and thermal constraints, ensuring optimal utilization of hardware resources. Additionally, researchers are working on integrating throttle body techniques with other power management strategies, such as dynamic voltage and frequency scaling (DVFS) and heterogeneous computing architectures.

Another key goal is to create adaptive throttling systems that can learn from historical data and adjust their behavior over time. This approach promises to enhance the efficiency of AI hardware across a wide range of applications, from edge devices to data center servers. By fine-tuning throttling parameters based on specific AI models and usage patterns, these systems can significantly improve energy efficiency without compromising performance.

As the field progresses, there is a growing emphasis on developing standardized metrics and benchmarks for evaluating the effectiveness of throttle body applications in AI hardware. This standardization effort aims to facilitate comparisons between different throttling techniques and guide future research and development initiatives. The ultimate objective is to create AI hardware systems that can dynamically adapt to changing computational demands while maintaining optimal performance and energy efficiency.

Market Analysis for AI-Optimized Hardware

The market for AI-optimized hardware has experienced significant growth in recent years, driven by the increasing demand for high-performance computing solutions capable of handling complex artificial intelligence and machine learning workloads. This surge in demand is primarily fueled by the rapid adoption of AI technologies across various industries, including healthcare, finance, automotive, and consumer electronics.

The global AI chip market size was valued at approximately $8 billion in 2020 and is projected to reach over $70 billion by 2026, with a compound annual growth rate (CAGR) of around 40% during the forecast period. This remarkable growth is attributed to the rising investments in AI technologies, the proliferation of AI applications, and the need for more efficient and powerful hardware solutions to support these applications.

Key market segments within the AI-optimized hardware space include graphics processing units (GPUs), application-specific integrated circuits (ASICs), field-programmable gate arrays (FPGAs), and central processing units (CPUs) with AI acceleration capabilities. Among these, GPUs currently dominate the market due to their versatility and widespread adoption in training deep learning models. However, ASICs are gaining traction for their energy efficiency and performance in specific AI tasks.

The market landscape is characterized by intense competition among major players such as NVIDIA, Intel, AMD, Google, and IBM, as well as emerging startups developing specialized AI chips. These companies are continuously innovating to improve performance, reduce power consumption, and enhance the overall efficiency of AI hardware.

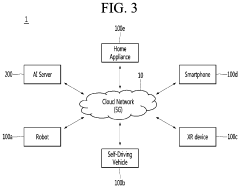

Cloud service providers, including Amazon Web Services, Microsoft Azure, and Google Cloud Platform, are also significant consumers of AI-optimized hardware, driving demand for high-performance solutions to power their AI services and infrastructure. Additionally, the edge computing segment is experiencing rapid growth, with an increasing need for AI-capable hardware in IoT devices, autonomous vehicles, and smart city applications.

Geographically, North America leads the market, followed by Asia-Pacific and Europe. The Asia-Pacific region, particularly China, is expected to witness the highest growth rate due to substantial investments in AI research and development, as well as the presence of major semiconductor manufacturers.

Key trends shaping the market include the development of more specialized and task-specific AI chips, the integration of AI capabilities into existing hardware platforms, and the focus on reducing the environmental impact of AI computing through energy-efficient designs. The market is also seeing a shift towards heterogeneous computing architectures that combine different types of processors to optimize performance for various AI workloads.

The global AI chip market size was valued at approximately $8 billion in 2020 and is projected to reach over $70 billion by 2026, with a compound annual growth rate (CAGR) of around 40% during the forecast period. This remarkable growth is attributed to the rising investments in AI technologies, the proliferation of AI applications, and the need for more efficient and powerful hardware solutions to support these applications.

Key market segments within the AI-optimized hardware space include graphics processing units (GPUs), application-specific integrated circuits (ASICs), field-programmable gate arrays (FPGAs), and central processing units (CPUs) with AI acceleration capabilities. Among these, GPUs currently dominate the market due to their versatility and widespread adoption in training deep learning models. However, ASICs are gaining traction for their energy efficiency and performance in specific AI tasks.

The market landscape is characterized by intense competition among major players such as NVIDIA, Intel, AMD, Google, and IBM, as well as emerging startups developing specialized AI chips. These companies are continuously innovating to improve performance, reduce power consumption, and enhance the overall efficiency of AI hardware.

Cloud service providers, including Amazon Web Services, Microsoft Azure, and Google Cloud Platform, are also significant consumers of AI-optimized hardware, driving demand for high-performance solutions to power their AI services and infrastructure. Additionally, the edge computing segment is experiencing rapid growth, with an increasing need for AI-capable hardware in IoT devices, autonomous vehicles, and smart city applications.

Geographically, North America leads the market, followed by Asia-Pacific and Europe. The Asia-Pacific region, particularly China, is expected to witness the highest growth rate due to substantial investments in AI research and development, as well as the presence of major semiconductor manufacturers.

Key trends shaping the market include the development of more specialized and task-specific AI chips, the integration of AI capabilities into existing hardware platforms, and the focus on reducing the environmental impact of AI computing through energy-efficient designs. The market is also seeing a shift towards heterogeneous computing architectures that combine different types of processors to optimize performance for various AI workloads.

Current Challenges in AI Hardware Throttling

AI hardware throttling faces several significant challenges in the current technological landscape. One of the primary issues is the dynamic nature of AI workloads, which often require varying computational resources. Traditional throttling mechanisms struggle to adapt quickly to these fluctuating demands, leading to suboptimal performance or unnecessary power consumption.

Another challenge lies in the complexity of modern AI architectures. As AI models become more sophisticated, they often involve multiple interconnected components, each with its own processing requirements. Coordinating throttling across these diverse elements while maintaining overall system efficiency proves to be a daunting task.

The trade-off between performance and power efficiency presents a persistent challenge. Aggressive throttling can significantly reduce power consumption but may compromise the AI system's ability to meet real-time processing requirements. Conversely, minimal throttling may lead to excessive power usage and thermal issues, particularly in edge devices with limited cooling capabilities.

Thermal management is a critical concern in AI hardware throttling. The high computational demands of AI workloads generate substantial heat, which can degrade performance and reduce hardware lifespan if not properly managed. Developing effective throttling strategies that balance performance with thermal constraints remains an ongoing challenge.

Heterogeneous computing environments, which combine different types of processors (e.g., CPUs, GPUs, and specialized AI accelerators), further complicate throttling efforts. Each component may have distinct throttling requirements and capabilities, making it difficult to implement a unified throttling strategy across the entire system.

The need for fine-grained control presents another hurdle. Optimal throttling often requires precise adjustments at the level of individual cores or even specific functional units within a processor. Implementing such granular control mechanisms while maintaining system stability and predictability is technically challenging.

Lastly, the rapid pace of AI hardware innovation introduces a moving target for throttling solutions. As new architectures and accelerators emerge, throttling techniques must continuously evolve to address the unique characteristics and requirements of these novel hardware designs. This constant evolution demands ongoing research and development efforts to keep throttling strategies aligned with the latest advancements in AI hardware technology.

Another challenge lies in the complexity of modern AI architectures. As AI models become more sophisticated, they often involve multiple interconnected components, each with its own processing requirements. Coordinating throttling across these diverse elements while maintaining overall system efficiency proves to be a daunting task.

The trade-off between performance and power efficiency presents a persistent challenge. Aggressive throttling can significantly reduce power consumption but may compromise the AI system's ability to meet real-time processing requirements. Conversely, minimal throttling may lead to excessive power usage and thermal issues, particularly in edge devices with limited cooling capabilities.

Thermal management is a critical concern in AI hardware throttling. The high computational demands of AI workloads generate substantial heat, which can degrade performance and reduce hardware lifespan if not properly managed. Developing effective throttling strategies that balance performance with thermal constraints remains an ongoing challenge.

Heterogeneous computing environments, which combine different types of processors (e.g., CPUs, GPUs, and specialized AI accelerators), further complicate throttling efforts. Each component may have distinct throttling requirements and capabilities, making it difficult to implement a unified throttling strategy across the entire system.

The need for fine-grained control presents another hurdle. Optimal throttling often requires precise adjustments at the level of individual cores or even specific functional units within a processor. Implementing such granular control mechanisms while maintaining system stability and predictability is technically challenging.

Lastly, the rapid pace of AI hardware innovation introduces a moving target for throttling solutions. As new architectures and accelerators emerge, throttling techniques must continuously evolve to address the unique characteristics and requirements of these novel hardware designs. This constant evolution demands ongoing research and development efforts to keep throttling strategies aligned with the latest advancements in AI hardware technology.

Existing Throttle Body Implementations for AI

01 Throttle body design and structure

Throttle bodies are designed with specific structures to control airflow into an engine. They may include features like adjustable valves, airflow passages, and integrated sensors to optimize engine performance and fuel efficiency.- Throttle body design and construction: Throttle bodies are designed to control airflow into an engine's intake manifold. They typically consist of a housing with a butterfly valve that can be opened or closed to regulate air intake. Various designs focus on improving airflow efficiency, reducing turbulence, and enhancing overall engine performance.

- Electronic throttle control systems: Modern throttle bodies often incorporate electronic control systems, replacing mechanical linkages with sensors and actuators. These systems allow for more precise control of the throttle position, improving fuel efficiency and engine response. They can also integrate with other engine management systems for optimal performance.

- Throttle body cleaning and maintenance: Throttle bodies can accumulate carbon deposits and other contaminants over time, affecting their performance. Various cleaning methods and maintenance procedures have been developed to keep throttle bodies functioning optimally. This includes the use of specialized cleaning solutions and tools designed to remove buildup without damaging sensitive components.

- Throttle body integration with fuel injection systems: Many throttle body designs are integrated with fuel injection systems to improve fuel delivery and atomization. This integration can involve incorporating fuel injectors directly into the throttle body or designing the throttle body to work in conjunction with separate fuel injectors. The goal is to achieve better fuel-air mixture and more efficient combustion.

- Throttle body modifications for performance enhancement: Aftermarket modifications and custom throttle body designs are used to enhance engine performance. These modifications can include increasing the throttle bore size, improving airflow characteristics, or adding features like adjustable stops. Such enhancements aim to increase horsepower and torque output, particularly in high-performance or racing applications.

02 Electronic throttle control systems

Modern throttle bodies often incorporate electronic control systems, including sensors and actuators, to precisely regulate airflow based on various engine parameters and driver input. These systems can improve responsiveness and fuel economy.Expand Specific Solutions03 Idle air control mechanisms

Throttle bodies may include idle air control mechanisms to maintain stable engine idle speed. These mechanisms can bypass the main throttle plate to provide a controlled amount of air during idle conditions.Expand Specific Solutions04 Throttle body cleaning and maintenance

Various methods and devices are developed for cleaning and maintaining throttle bodies to ensure optimal performance. These may include specialized cleaning solutions, tools, or automated cleaning systems to remove carbon deposits and other contaminants.Expand Specific Solutions05 Integration with fuel injection systems

Throttle bodies are often designed to work in conjunction with fuel injection systems. This integration can involve precise positioning of fuel injectors, airflow sensors, and other components to optimize air-fuel mixture and combustion efficiency.Expand Specific Solutions

Key Players in AI Hardware and Throttling Solutions

The competitive landscape for Throttle Body Applications in Artificial Intelligence (AI) Hardware is characterized by a rapidly evolving market in its early growth stage. Major players like Intel, GM Global Technology Operations, and Honda Motor Co. are investing heavily in this emerging field, leveraging their expertise in automotive and semiconductor technologies. The market size is expanding as AI hardware becomes increasingly crucial for autonomous vehicles and smart transportation systems. While established companies are leading in research and development, startups like Mythic and Cerebras Systems are introducing innovative AI chip designs. The technology is still maturing, with companies focusing on improving performance, energy efficiency, and integration with existing automotive systems.

Intel Corp.

Technical Solution: Intel has developed AI-optimized hardware solutions that integrate throttle body control for improved performance and efficiency in AI applications. Their neuromorphic computing chip, Loihi, mimics the human brain's neural structure and can be applied to throttle body control in AI systems[1]. Intel's Field Programmable Gate Arrays (FPGAs) offer flexible, reconfigurable hardware that can be optimized for throttle body applications in AI, allowing for real-time adjustments and improved energy efficiency[2]. Additionally, Intel's Movidius Vision Processing Units (VPUs) provide dedicated AI acceleration for edge devices, which can be utilized in throttle body control systems for autonomous vehicles and robotics[3].

Strengths: Diverse AI hardware portfolio, strong R&D capabilities, and established market presence. Weaknesses: Competition from specialized AI chip manufacturers and potential for slower adaptation to rapidly evolving AI hardware requirements.

Hewlett Packard Enterprise Development LP

Technical Solution: HPE has developed innovative AI hardware solutions that incorporate throttle body applications for enhanced performance and efficiency. Their Memory-Driven Computing architecture, which places memory at the center of the computing platform, can be applied to throttle body control in AI systems, allowing for faster data processing and reduced latency[4]. HPE's Superdome Flex servers provide high-performance computing capabilities that can be leveraged for complex AI workloads involving throttle body applications[5]. Furthermore, HPE's edge computing solutions, such as the Edgeline Converged Edge Systems, offer AI processing capabilities at the network edge, which can be utilized for real-time throttle body control in autonomous vehicles and industrial automation[6].

Strengths: Strong enterprise focus, innovative memory-centric architecture, and comprehensive edge-to-cloud solutions. Weaknesses: Less specialized in AI-specific hardware compared to some competitors and potential challenges in consumer-facing AI applications.

Innovative Throttling Mechanisms for AI Hardware

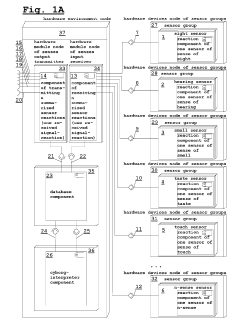

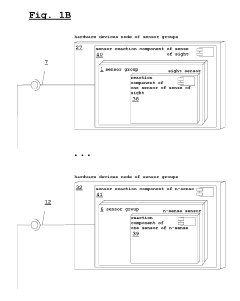

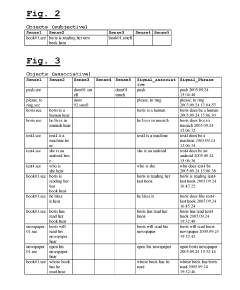

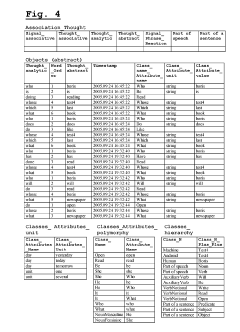

Computer system of an artificial intelligence of a cyborg or an android, wherein a received signal-reaction of the computer system of the artificial intelligence, a corresponding association of the computer system of the artificial intelligence, a corresponding thought of the computer system of the artificial intelligence are physically built, and a working method of the computer system of the artificial intelligence of the cyborg or the android

PatentInactiveUS20190197112A1

Innovation

- A computer system based on natural language that substantiates received signal-reactions, associations, and thoughts as subjective, associative, and abstract objects, respectively, using a pointer-oriented approach, allowing for object-oriented functionality without reliance on hardware, operating systems, or specific programming languages, and enabling the system to operate as an artificial brain.

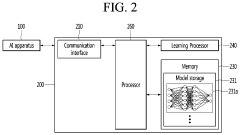

Artificial intelligence server and method for updating artificial intelligence model by merging plurality of pieces of update information

PatentActiveUS11669781B2

Innovation

- An AI server and method that selects and merges update information from multiple AI apparatuses, excluding outliers, to update the AI model by adding a sum, average, or weighted sum of the selected information to the model parameters, thereby reducing network traffic and maintaining model reliability.

Energy Efficiency and Thermal Management in AI Systems

Energy efficiency and thermal management are critical aspects of AI hardware design, particularly in the context of throttle body applications. As AI systems become more complex and powerful, they generate significant amounts of heat, which can lead to performance degradation and increased energy consumption. To address these challenges, innovative approaches to energy efficiency and thermal management are being developed and implemented.

One key strategy for improving energy efficiency in AI hardware is the use of advanced power management techniques. These include dynamic voltage and frequency scaling (DVFS), which allows the system to adjust its operating parameters based on workload demands. By reducing power consumption during periods of low activity, DVFS can significantly improve overall energy efficiency. Additionally, power gating techniques are employed to shut down inactive components, further reducing power consumption.

Thermal management in AI systems is equally crucial, as excessive heat can lead to reduced performance and reliability. Advanced cooling solutions, such as liquid cooling and phase-change materials, are being integrated into AI hardware designs to dissipate heat more effectively. These solutions allow for higher power densities and improved thermal performance compared to traditional air cooling methods.

The development of energy-efficient AI accelerators is another area of focus. These specialized processors are designed to perform AI computations with minimal energy consumption. By optimizing the architecture for specific AI workloads, these accelerators can achieve significant improvements in performance per watt compared to general-purpose processors.

In the context of throttle body applications, energy efficiency and thermal management are particularly important due to the potential for high-frequency, real-time processing requirements. Implementing efficient power management and cooling solutions in these systems can help maintain optimal performance while minimizing energy consumption and heat generation.

Furthermore, the integration of AI-driven energy management systems is emerging as a promising approach. These systems use machine learning algorithms to predict and optimize power consumption based on historical data and current operating conditions. By continuously adapting to changing workloads and environmental factors, AI-driven energy management can achieve substantial improvements in overall system efficiency.

As AI hardware continues to evolve, the focus on energy efficiency and thermal management will remain paramount. Future developments in this area are likely to include advanced materials for improved heat dissipation, more sophisticated power management algorithms, and the integration of AI-driven optimization techniques across all aspects of system design and operation.

One key strategy for improving energy efficiency in AI hardware is the use of advanced power management techniques. These include dynamic voltage and frequency scaling (DVFS), which allows the system to adjust its operating parameters based on workload demands. By reducing power consumption during periods of low activity, DVFS can significantly improve overall energy efficiency. Additionally, power gating techniques are employed to shut down inactive components, further reducing power consumption.

Thermal management in AI systems is equally crucial, as excessive heat can lead to reduced performance and reliability. Advanced cooling solutions, such as liquid cooling and phase-change materials, are being integrated into AI hardware designs to dissipate heat more effectively. These solutions allow for higher power densities and improved thermal performance compared to traditional air cooling methods.

The development of energy-efficient AI accelerators is another area of focus. These specialized processors are designed to perform AI computations with minimal energy consumption. By optimizing the architecture for specific AI workloads, these accelerators can achieve significant improvements in performance per watt compared to general-purpose processors.

In the context of throttle body applications, energy efficiency and thermal management are particularly important due to the potential for high-frequency, real-time processing requirements. Implementing efficient power management and cooling solutions in these systems can help maintain optimal performance while minimizing energy consumption and heat generation.

Furthermore, the integration of AI-driven energy management systems is emerging as a promising approach. These systems use machine learning algorithms to predict and optimize power consumption based on historical data and current operating conditions. By continuously adapting to changing workloads and environmental factors, AI-driven energy management can achieve substantial improvements in overall system efficiency.

As AI hardware continues to evolve, the focus on energy efficiency and thermal management will remain paramount. Future developments in this area are likely to include advanced materials for improved heat dissipation, more sophisticated power management algorithms, and the integration of AI-driven optimization techniques across all aspects of system design and operation.

Standardization Efforts for AI Hardware Throttling

Standardization efforts for AI hardware throttling have gained significant momentum in recent years, driven by the need for efficient and consistent performance management across diverse AI systems. These initiatives aim to establish common protocols and specifications for throttling mechanisms in AI hardware, ensuring interoperability and optimized resource utilization.

Several industry consortia and standards organizations have taken the lead in developing frameworks for AI hardware throttling. The Open Compute Project (OCP) has been instrumental in promoting open standards for data center hardware, including AI accelerators. Their efforts have extended to defining specifications for power management and throttling interfaces, enabling better integration of AI hardware into existing data center infrastructures.

The IEEE has also been actively involved in standardization efforts, with working groups focused on developing guidelines for AI hardware performance management. These groups are addressing challenges such as defining common metrics for measuring AI workload efficiency and establishing protocols for dynamic throttling based on workload characteristics and system conditions.

Collaboration between hardware manufacturers and cloud service providers has been crucial in driving standardization efforts. Companies like NVIDIA, Intel, and AMD have been working closely with major cloud platforms to develop and implement consistent throttling mechanisms across different AI hardware architectures. This cooperation has led to the emergence of industry-wide best practices for managing AI workloads and optimizing hardware utilization.

One key area of focus in standardization efforts has been the development of APIs and software interfaces for throttling control. These interfaces aim to provide a unified way for operating systems and applications to interact with AI hardware throttling mechanisms, regardless of the underlying hardware architecture. This abstraction layer enables more flexible and portable AI software development, as well as improved resource management in heterogeneous computing environments.

Efforts are also underway to standardize telemetry and monitoring protocols for AI hardware throttling. These standards define common formats and methods for collecting and reporting performance data, power consumption, and thermal metrics. By establishing a consistent approach to hardware monitoring, these standards facilitate better decision-making for throttling and workload distribution across AI systems.

As standardization efforts progress, there is growing emphasis on incorporating energy efficiency and sustainability considerations into AI hardware throttling protocols. This includes developing standards for adaptive throttling based on renewable energy availability and defining metrics for assessing the environmental impact of AI workloads. These initiatives align with broader industry goals of reducing the carbon footprint of AI computations and promoting more sustainable computing practices.

Several industry consortia and standards organizations have taken the lead in developing frameworks for AI hardware throttling. The Open Compute Project (OCP) has been instrumental in promoting open standards for data center hardware, including AI accelerators. Their efforts have extended to defining specifications for power management and throttling interfaces, enabling better integration of AI hardware into existing data center infrastructures.

The IEEE has also been actively involved in standardization efforts, with working groups focused on developing guidelines for AI hardware performance management. These groups are addressing challenges such as defining common metrics for measuring AI workload efficiency and establishing protocols for dynamic throttling based on workload characteristics and system conditions.

Collaboration between hardware manufacturers and cloud service providers has been crucial in driving standardization efforts. Companies like NVIDIA, Intel, and AMD have been working closely with major cloud platforms to develop and implement consistent throttling mechanisms across different AI hardware architectures. This cooperation has led to the emergence of industry-wide best practices for managing AI workloads and optimizing hardware utilization.

One key area of focus in standardization efforts has been the development of APIs and software interfaces for throttling control. These interfaces aim to provide a unified way for operating systems and applications to interact with AI hardware throttling mechanisms, regardless of the underlying hardware architecture. This abstraction layer enables more flexible and portable AI software development, as well as improved resource management in heterogeneous computing environments.

Efforts are also underway to standardize telemetry and monitoring protocols for AI hardware throttling. These standards define common formats and methods for collecting and reporting performance data, power consumption, and thermal metrics. By establishing a consistent approach to hardware monitoring, these standards facilitate better decision-making for throttling and workload distribution across AI systems.

As standardization efforts progress, there is growing emphasis on incorporating energy efficiency and sustainability considerations into AI hardware throttling protocols. This includes developing standards for adaptive throttling based on renewable energy availability and defining metrics for assessing the environmental impact of AI workloads. These initiatives align with broader industry goals of reducing the carbon footprint of AI computations and promoting more sustainable computing practices.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!