Systems and methods for depth-assisted perspective distortion correction

a depth-assisted, perspective-correction technology, applied in the direction of 3d image data details, instruments, image enhancement, etc., can solve the problems of perceived distortion, magnifying the size of the nose and chin, and close-range portraiture photographs, etc., to reduce the appearance of perspective distortion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

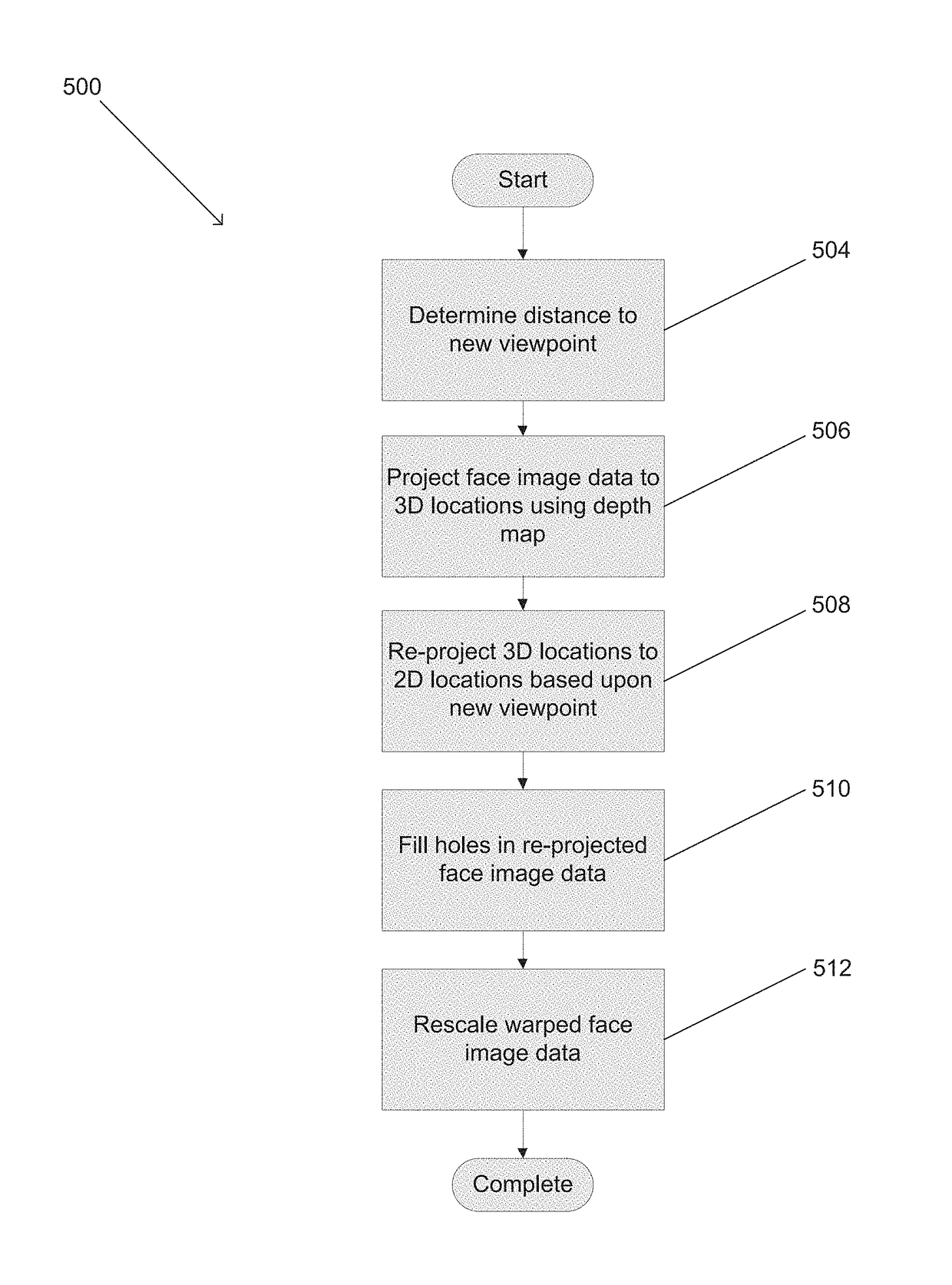

[0033]Turning now to the drawings, systems and methods for automatic depth-assisted perspective distortion correction in accordance with embodiments of the invention are illustrated. In many embodiments, a face is detected within an image for which a depth map is available. The depth map can be used to segment the face from the background of the image and warp the pixels of the segmented face to rerender the face from a viewpoint at a desired distance greater than the distance from which the camera captured the image of the face. In this way, the perceived perspective distortion in the face can be removed and the rerendered face composited with the image background. In several embodiments, the image background is inpainted to fill any holes created by the segmentation process. In many embodiments, the shifts in pixel locations between the original image and the perspective distortion corrected image are also applied to the original depth map to generate a depth map for the perspecti...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com