SLAM autonomous navigation identification method in closed scene

A technology of autonomous navigation and identification method, applied in the field of image processing, to achieve the effect of strong practicability and simple calculation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

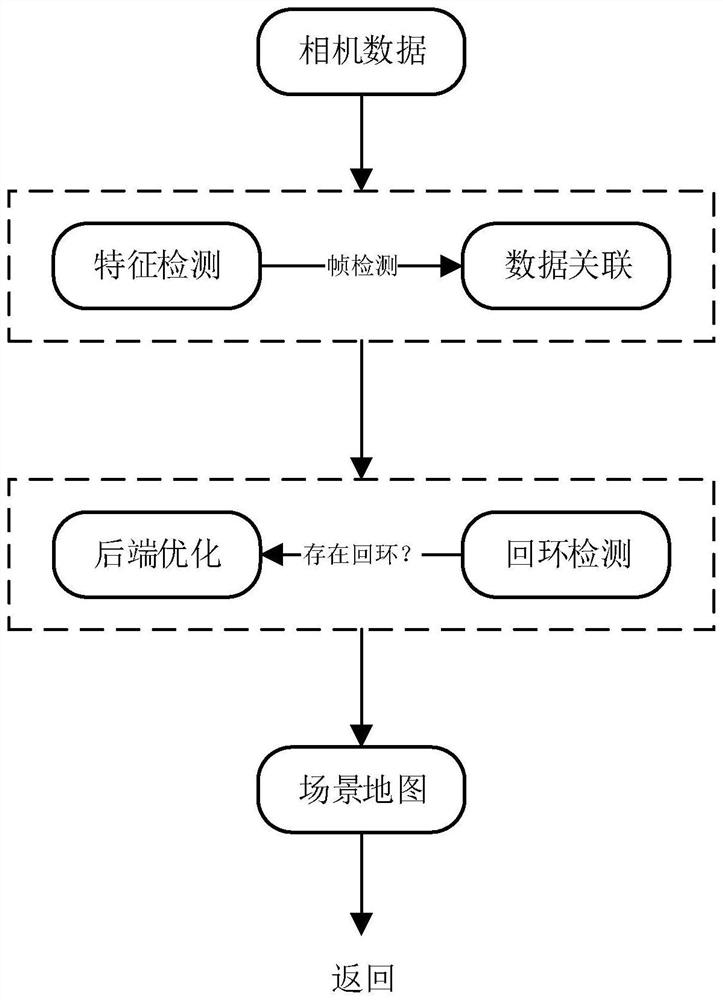

[0033] Attached below figure 1 The present invention is further described, a SLAM autonomous navigation and identification method in a closed scene, comprising the following steps:

[0034] Step 1: Obtaining external environment data, the autonomous robot obtains external environment data through its own camera;

[0035] Step 2: Feature detection, input the acquired external environment data into the SLAM feature extraction module, and the SLAM feature extraction module uses the Vision transformer model to realize the semantic detection of each object in the external environment, and extract the features of each object at the same time;

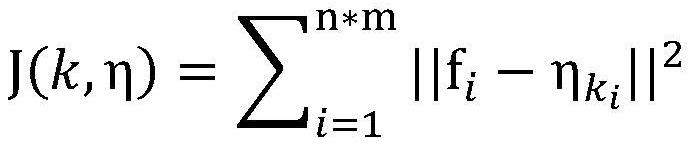

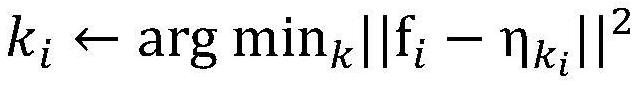

[0036] Step 3: Data association, the SLAM data association module tracks the common features of images in different frames, and achieves the same feature matching through the correlation clustering between frames, and then judges the movement of the object; for the successfully matched features , the controller of the autonomous robot correc...

Embodiment 2

[0060] The difference between this embodiment and Embodiment 1 is that the cluster value k=100 is set.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com