Sequence recommendation method and device based on multi-scale interest dynamic hierarchy Transformer

A recommendation method and multi-scale technology, applied in the direction of instruments, data processing applications, business, etc., can solve the problem of low recommendation accuracy, achieve the effect of meeting individual needs and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

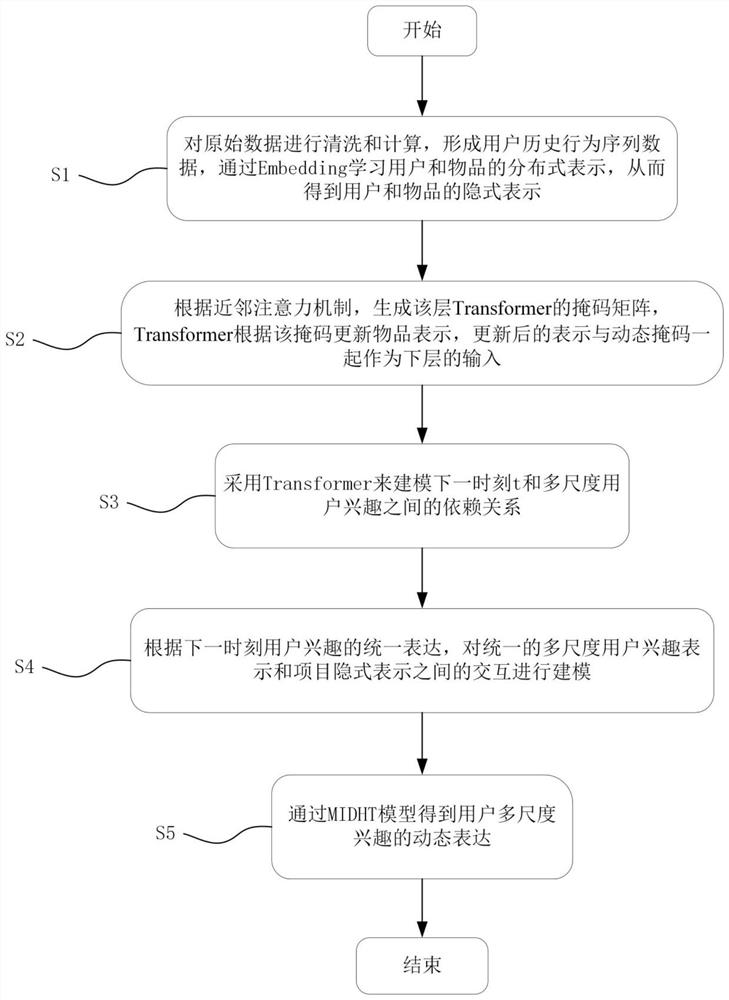

[0052] An embodiment of the present invention provides a sequence recommendation method based on a multi-scale interest dynamic hierarchy Transformer, including:

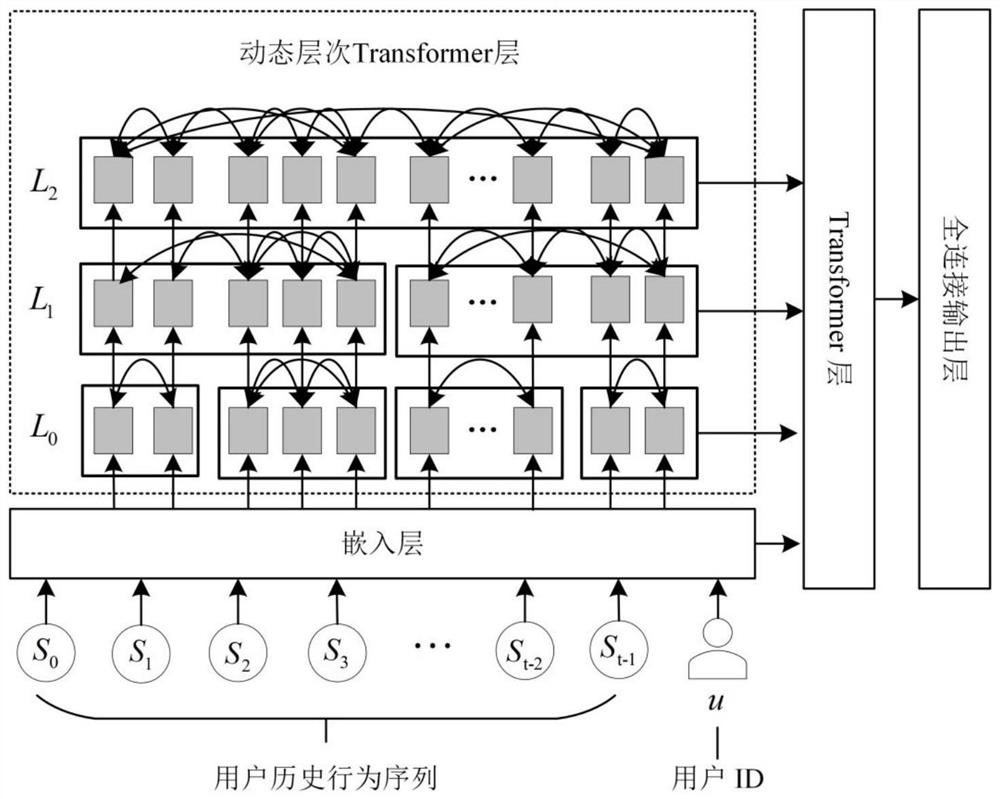

[0053] S1: For the input user history behavior sequence used to represent the interaction between the user and the item, learn the distributed representation of the user and the item;

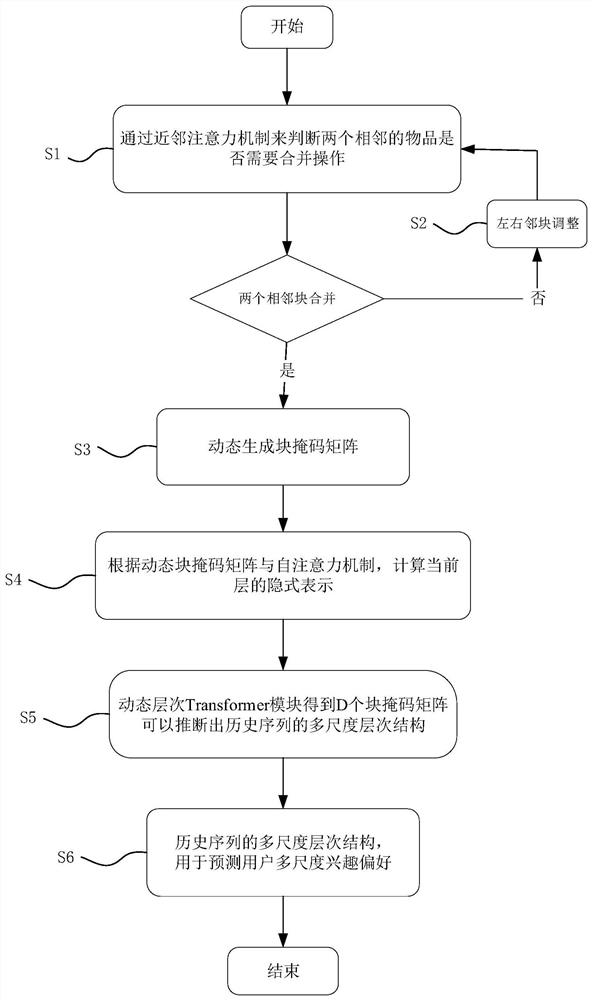

[0054] S2: Based on the distributed representation of users and items, through the neighbor attention mechanism, neighbor blocks are merged layer by layer from bottom to top, and a hierarchical representation of multi-scale user interests of user historical behavior sequences is obtained;

[0055] S3: Model the relationship between the user interest at the next moment and the multi-scale user interest hierarchical representation of the user's historical behavior sequence through the self-attention mechanism of the Transformer layer, and obtain the user interest expression at the next moment;

[0056] S4: Concatenate the user interest...

Embodiment 2

[0108] Based on the same inventive concept, this embodiment provides a sequence recommendation device based on a multi-scale interest dynamic hierarchy Transformer, including:

[0109] The embedding module is used to learn the distributed representation of users and items for the input user historical behavior sequence to represent the interaction between users and items;

[0110] The dynamic hierarchical Transformer module is used for distributed representation based on users and items. Through the attention mechanism of neighbor blocks, neighbor blocks are merged layer by layer from bottom to top to obtain a hierarchical representation of multi-scale user interests of user historical behavior sequences;

[0111] The Transformer module is used to model the relationship between the user interest at the next moment and the multi-scale user interest hierarchical representation of the user's historical behavior sequence through the Transformer layer self-attention mechanism, and o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com