Figure virtual clothes changing method, terminal equipment and storage medium

A technology of characters and clothes, applied in the field of computer vision, can solve the problems of poor transmission of clothing details, high cost hindering large-scale deployment, and limiting applications

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

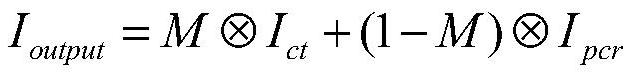

[0045] The embodiment of the present invention provides a method for virtual changing clothes of characters, such as figure 1 As shown, it is a flow chart of the character virtual changing clothes method described in the embodiment of the present invention, and the method includes the following steps:

[0046] S1: the character picture I p After affine transformation, the affine transformation picture I of the character is obtained t .

[0047] S2: For the person picture I p and character affine transformation picture I t Feature extraction is performed separately, and based on different pooling mechanisms and feature splicing mechanisms, the features of the character picture and the character affine transformation picture are converted into optimized features.

[0048] In this embodiment, the feature extraction is performed through the U-net network, and other methods may also be used in other embodiments, which are not limited here. People picture I p and character aff...

Embodiment 2

[0076] The present invention also provides a character virtual clothes-changing terminal device, including a memory, a processor, and a computer program stored in the memory and operable on the processor. When the processor executes the computer program, the present invention is realized. Steps in the above method embodiment of the first embodiment of the invention.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com