A Navigation Method Based on Real 3D Map

A navigation method and three-dimensional map technology, applied in the field of navigation, can solve the problems that the characterization is not easy to be accurate and complete, the navigation mode cannot meet the in-depth local experience, and cannot meet the navigation needs, etc., and achieves the effect of eliminating the time of the video.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

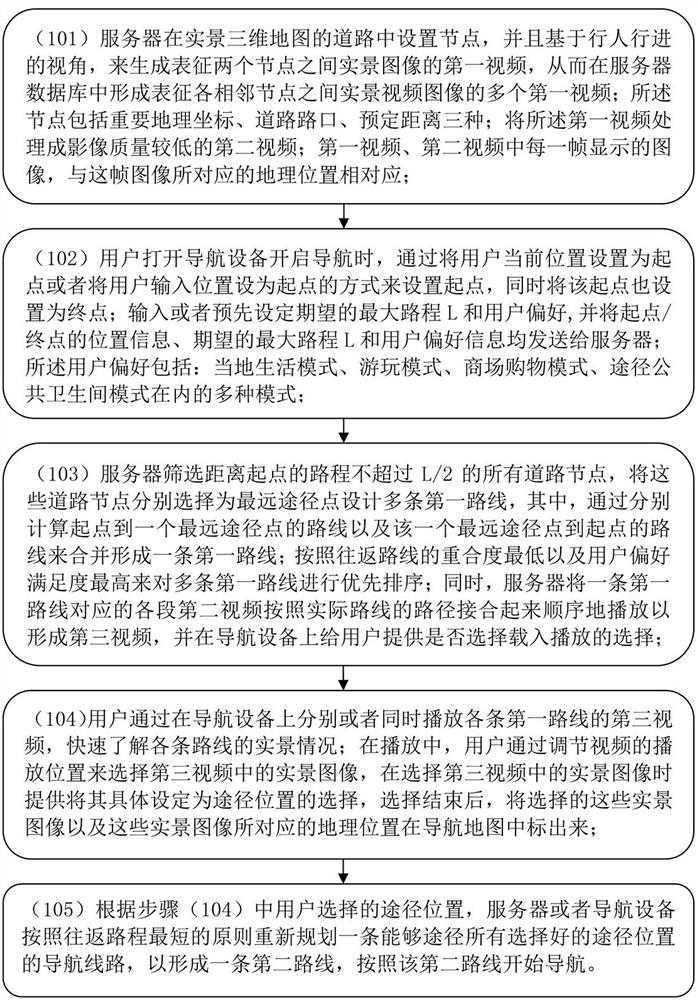

[0026] Embodiment 1: as figure 1 As shown, a navigation method, the navigation method includes the following steps:

[0027] (101) The server sets nodes on the roads of the real-scene 3D map, and generates the first video representing the real-scene image between the two nodes based on the perspective of pedestrians, thereby forming a representation of the real-scene between adjacent nodes in the server database. A plurality of first videos of the video images; the nodes include three kinds of important geographical coordinates, road intersections, and predetermined distances; the first videos are processed into a second video with lower image quality; the first video, the second video The image displayed in each frame corresponds to the geographic location corresponding to this frame of image;

[0028] (102) When the user turns on the navigation device and starts the navigation, set the starting point by setting the user's current location as the starting point or setting th...

Embodiment 2

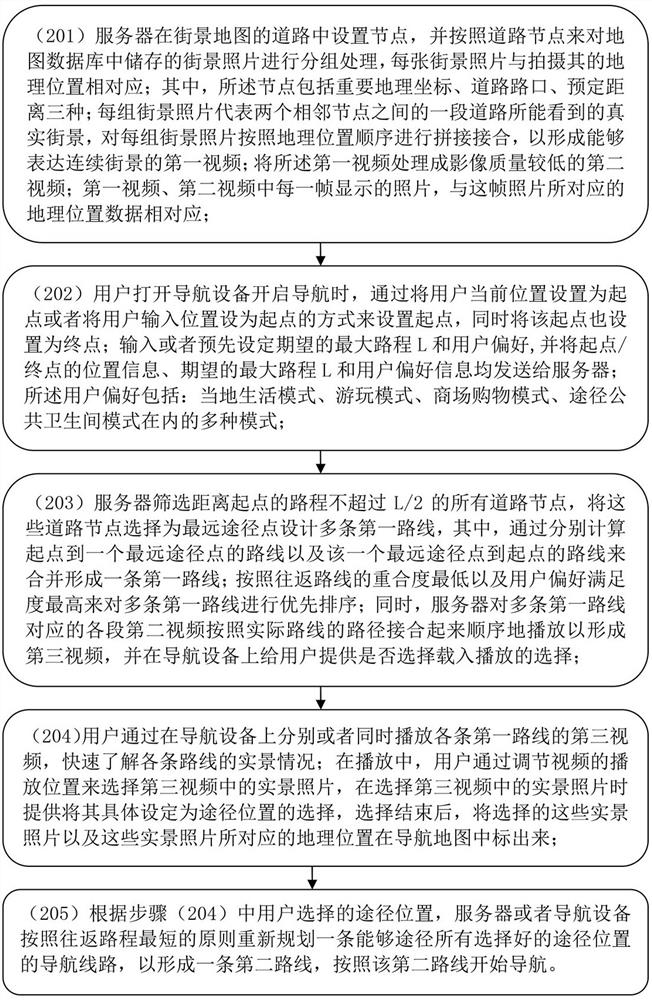

[0034] Such as figure 2 Shown, embodiment 2: a kind of navigation method, described navigation method comprises the following steps:

[0035] (201) The server sets nodes in the roads of the street view map, and groups the street view photos stored in the map database according to the road nodes, and each street view photo corresponds to the geographic location where it was taken; wherein, the nodes include important Geographical coordinates, road intersections, and predetermined distances; each group of street view photos represents the real street view that can be seen on a section of road between two adjacent nodes, and each group of street view photos is spliced and joined according to the order of geographic location to form a A first video expressing continuous street scenes; processing the first video into a second video with lower image quality; photos displayed in each frame of the first video and the second video, and geographic location data corresponding to this ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com