Robot autonomous tracking method based on vision

An autonomous tracking and robot technology, applied in the direction of instruments, computer parts, image data processing, etc., can solve the problem of not using path planning, etc., and achieve the effect of long tracking distance and strong tracking ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

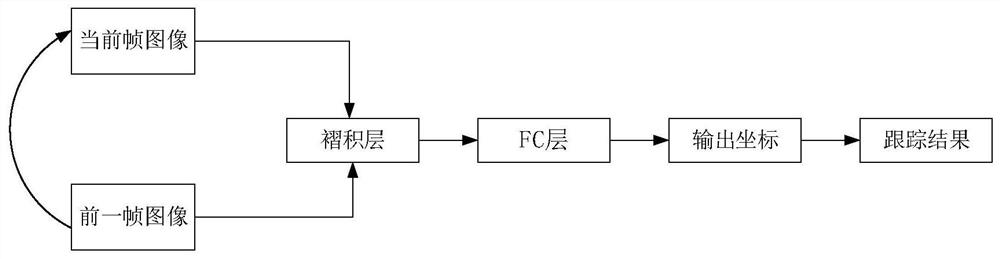

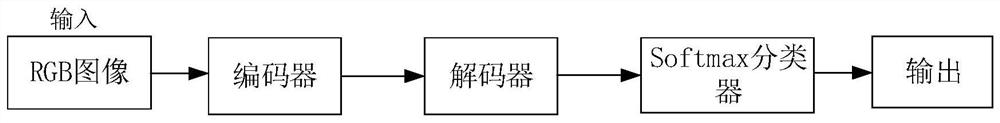

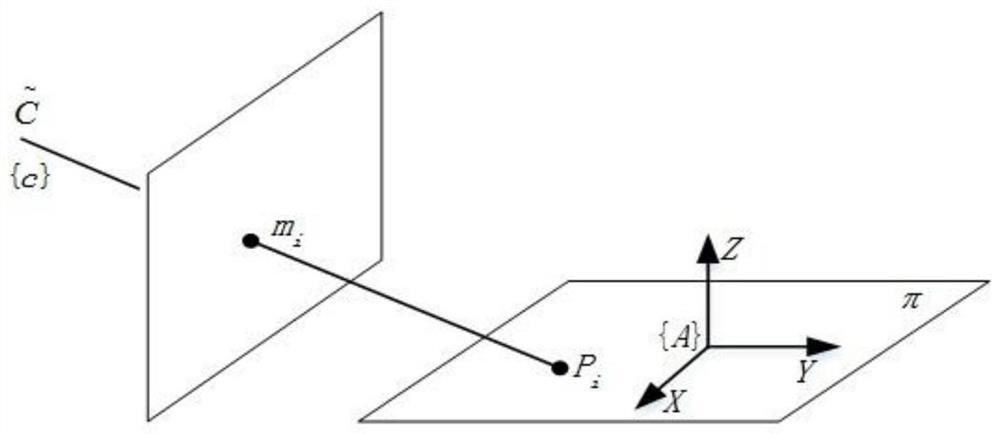

[0030] Embodiment 1: as Figure 1-7 As shown, a vision-based robot autonomous tracking method, firstly, manually select the tracking target, set the input of CNN as the target and the surrounding background area; then divide the environment into different categories according to the training data set; establish a very Coordinate local map, take the robot as the center of the polar coordinate system, establish the conversion relationship between the pixel coordinate point and the plane coordinate point and the grid coordinate, and calculate the grid pixel as the statistical grid obstacle; set the starting grid of the robot The grid coordinates, the robot target position is obtained through the target tracking algorithm, and finally the A* algorithm is used to complete the optimal path search. For this application, choose a tracking model similar to GOTURN, such as figure 1 As shown, the CNN model is used to extract the basic features of the current frame and the previous frame...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com