No-reference type super-resolution image quality evaluation method based on stacking

A technology for super-resolution image and quality evaluation, applied in complex mathematical operations, instruments, character and pattern recognition, etc., can solve the problem of difficulty in accurately and effectively evaluating the quality of super-resolution images, and achieve the effect of improving prediction accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

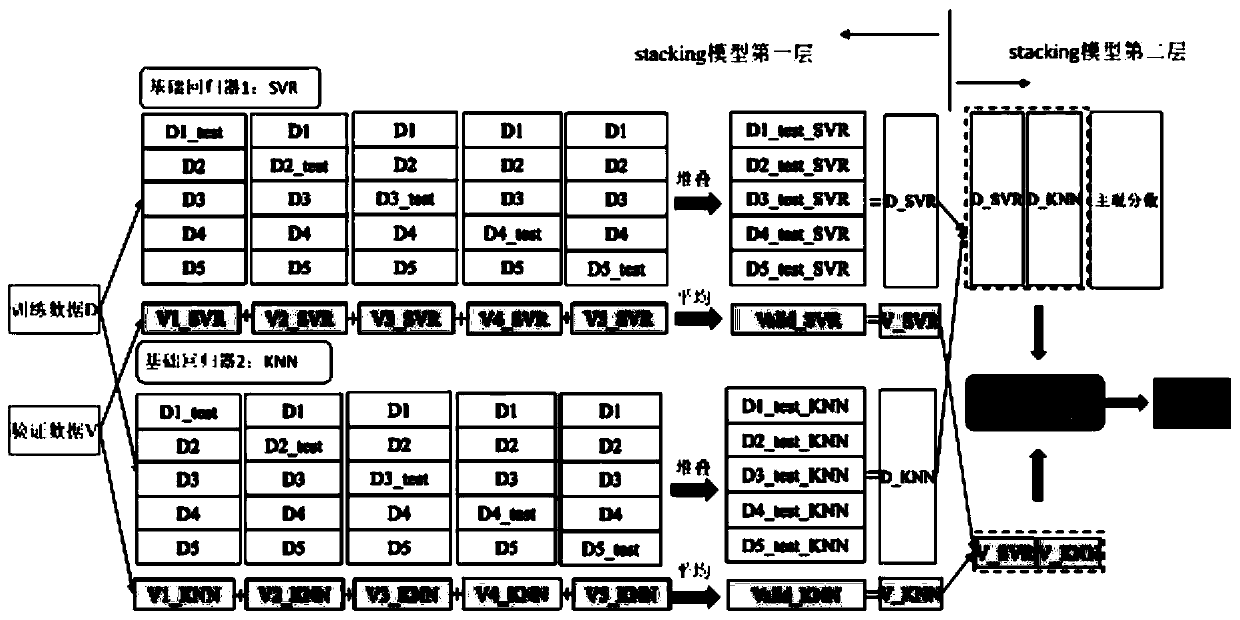

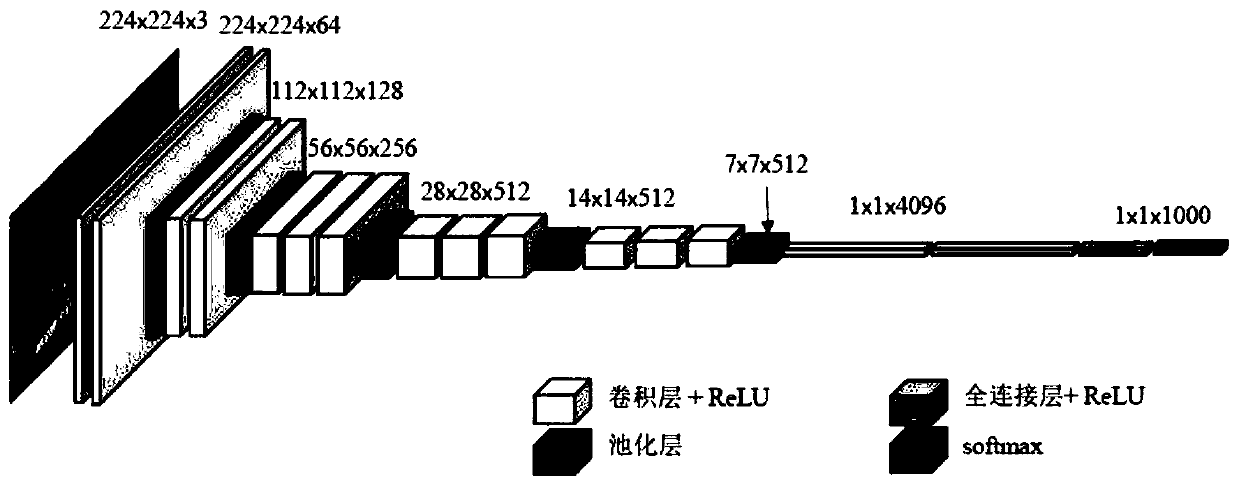

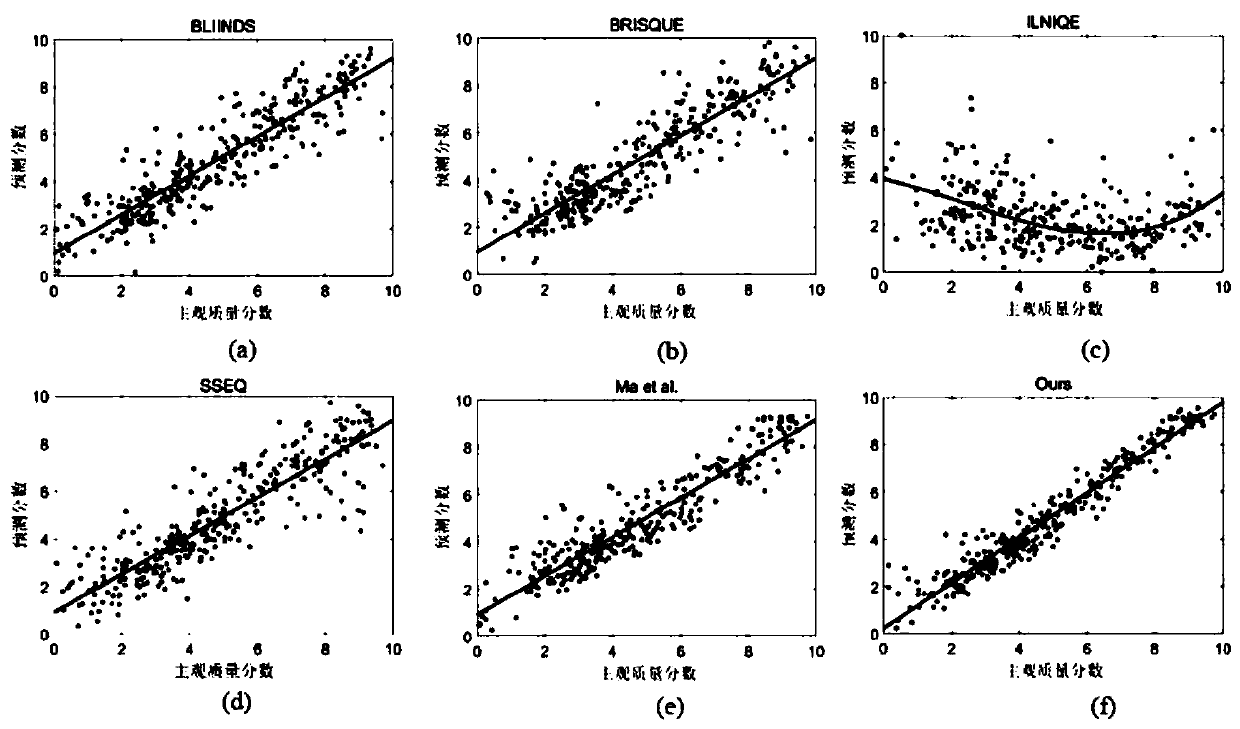

[0039] The present invention is based on the stacking non-reference type super-resolution image quality evaluation method, such as figure 1 and 2 As shown, it mainly includes two stages: the deep feature extraction stage and the training stage of the two-layer stacking regression model, which specifically includes the following steps: first, the deep features of the super-resolution image are extracted through the existing trained VGGnet model, which is used to quantify the super-resolution The degradation of the image; then, the stacking regression algorithm including the SVR algorithm and the k-NN algorithm is used as the first layer regression model to construct a mapping model from the depth features extracted from the VGGnet model to the predicted quality score, and then the linear regression algorithm is used to obtain The second layer r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com