Unsupervised learning scene feature rapid extraction method fusing semantic information

An unsupervised learning and semantic information technology, applied in the field of rapid extraction of unsupervised learning scene features, can solve the problems of insufficient discrimination of complex scenes, scene matching effect interference with binary feature descriptors, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

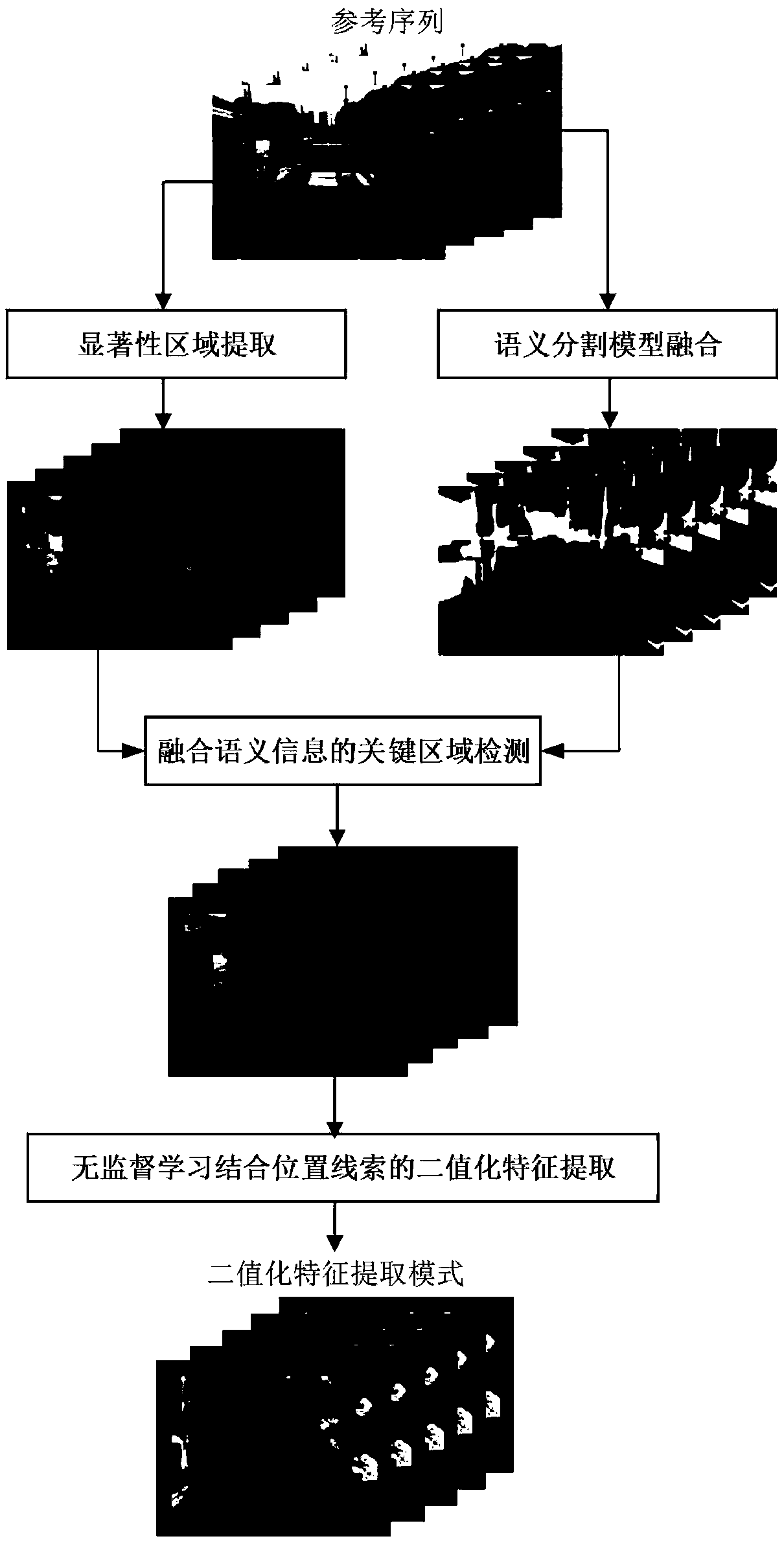

[0035] In order to achieve high-precision, high-robust image global-local feature extraction, while improving the efficiency of scene matching. The present invention considers the guiding role of semantic features on the extraction of salient regions in the scene and the advantages of high computational efficiency of binarized feature descriptors, and discloses a fast extraction method of unsupervised learning scene features fused with semantic information. The process is as follows figure 1 As shown, follow the steps below:

[0036] Step 1: Scene salient region extraction

[0037] Firstly, the video frame is preprocessed to remove the blurred and distorted areas. The video frame is then sampled row-by-row using a sliding window to compute the saliency score S(p(x,y,f) for each pixel in the image t )).

[0038]

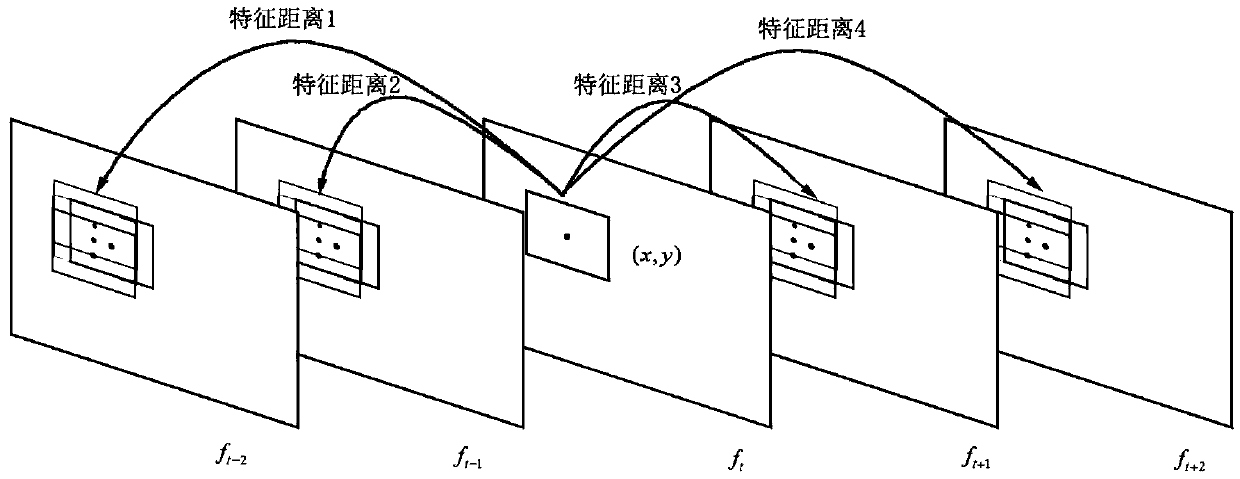

[0039] like figure 2...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com