Method for grabbing object through man-machine cooperation in VR scene

An object and scene technology, applied in the field of virtual reality, can solve the problems that users cannot operate accurately and efficiently, and the distance of 3D virtual objects is too far away.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] The present invention will be described in further detail below in conjunction with the accompanying drawings:

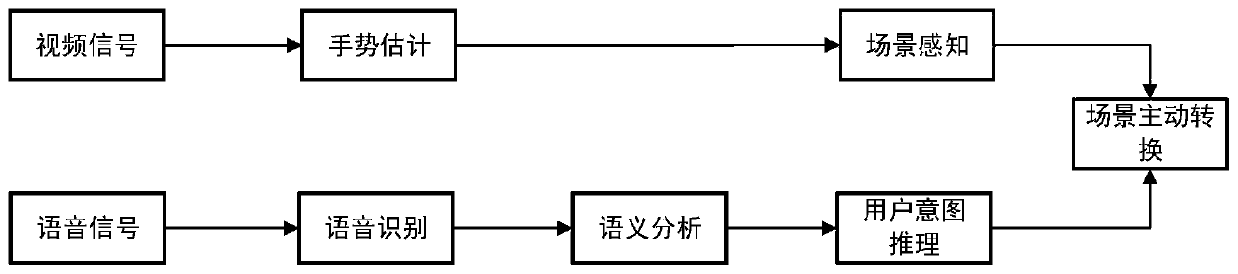

[0052] The present invention uses Inter RealSense to estimate gestures and drive virtual human hands, uses the iFLYTEK Speech Recognition SDK to recognize the user’s voice input, namely audio signals, and then grammatically segment the recognized voice to obtain the user’s operation intention. The situational awareness enables the scene to actively change to assist the user in completing the operation. The steps of the method of the present invention are as figure 1 Shown, including:

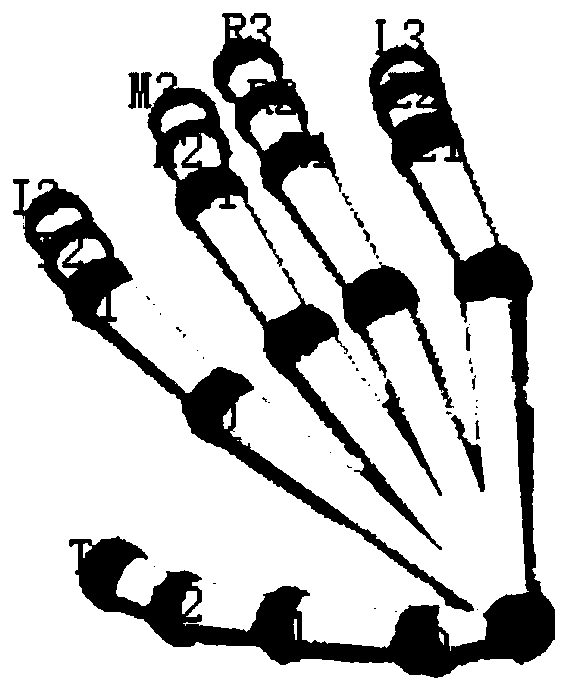

[0053] Gesture recognition and speech recognition are performed at the same time, where the gesture recognition is to collect the video signal of the human hand, drive the movement of the virtual human hand in the virtual scene according to the video signal, and obtain the gesture recognition result, namely figure 1 The voice recognition is to collect the user’s audio signal, identi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com