IPT simulation training gesture recognition method based on binocular vision

A technology of gesture recognition and binocular vision, which is applied in the field of computer vision/human-computer interaction, can solve problems such as differences in real interactive operations, reduction in recognition accuracy, loss of target gestures, etc., to reduce training costs, improve operation training efficiency, and improve usage The effect of experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0066] In order to understand the above-mentioned purposes and advantages of the present invention more clearly, the specific embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings:

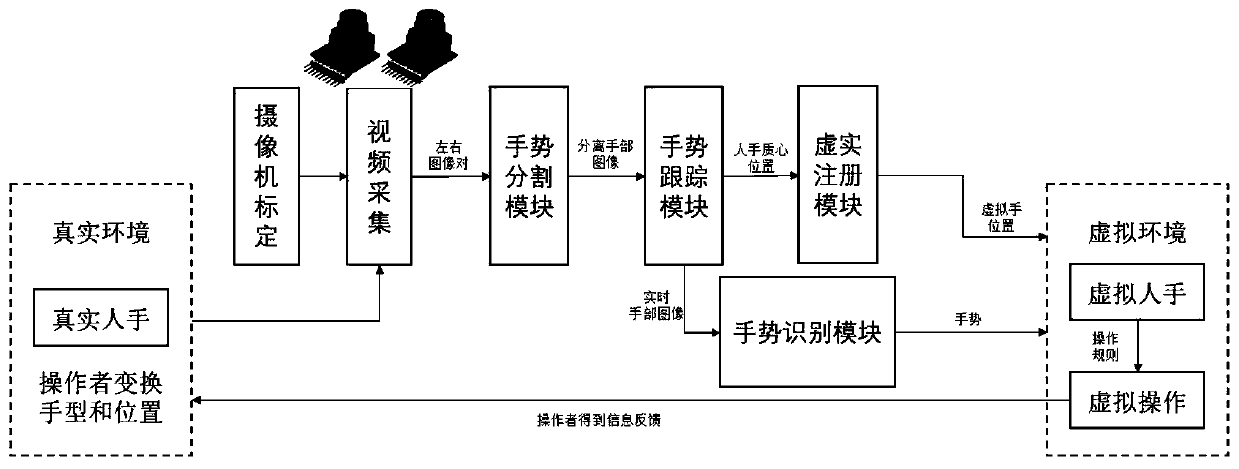

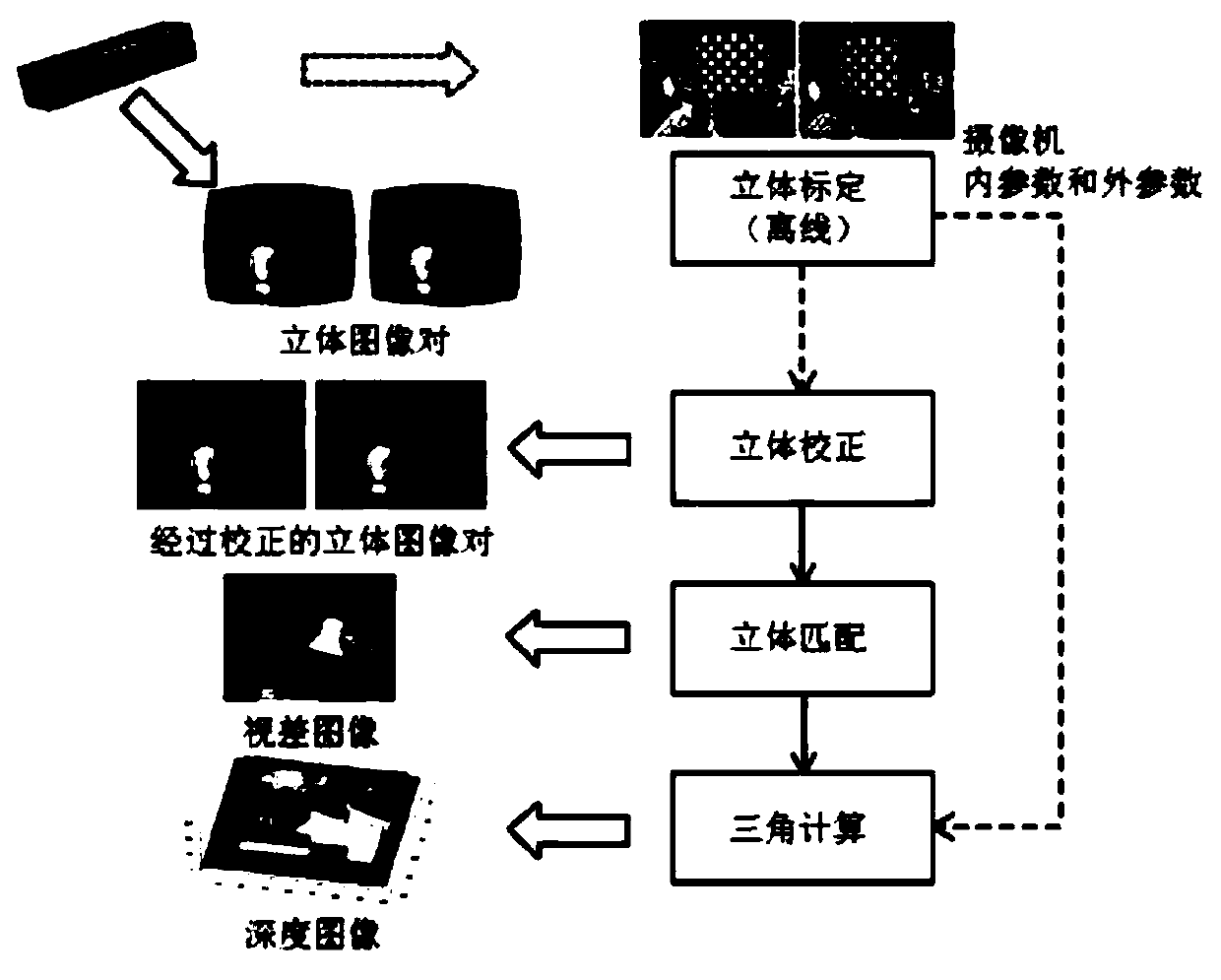

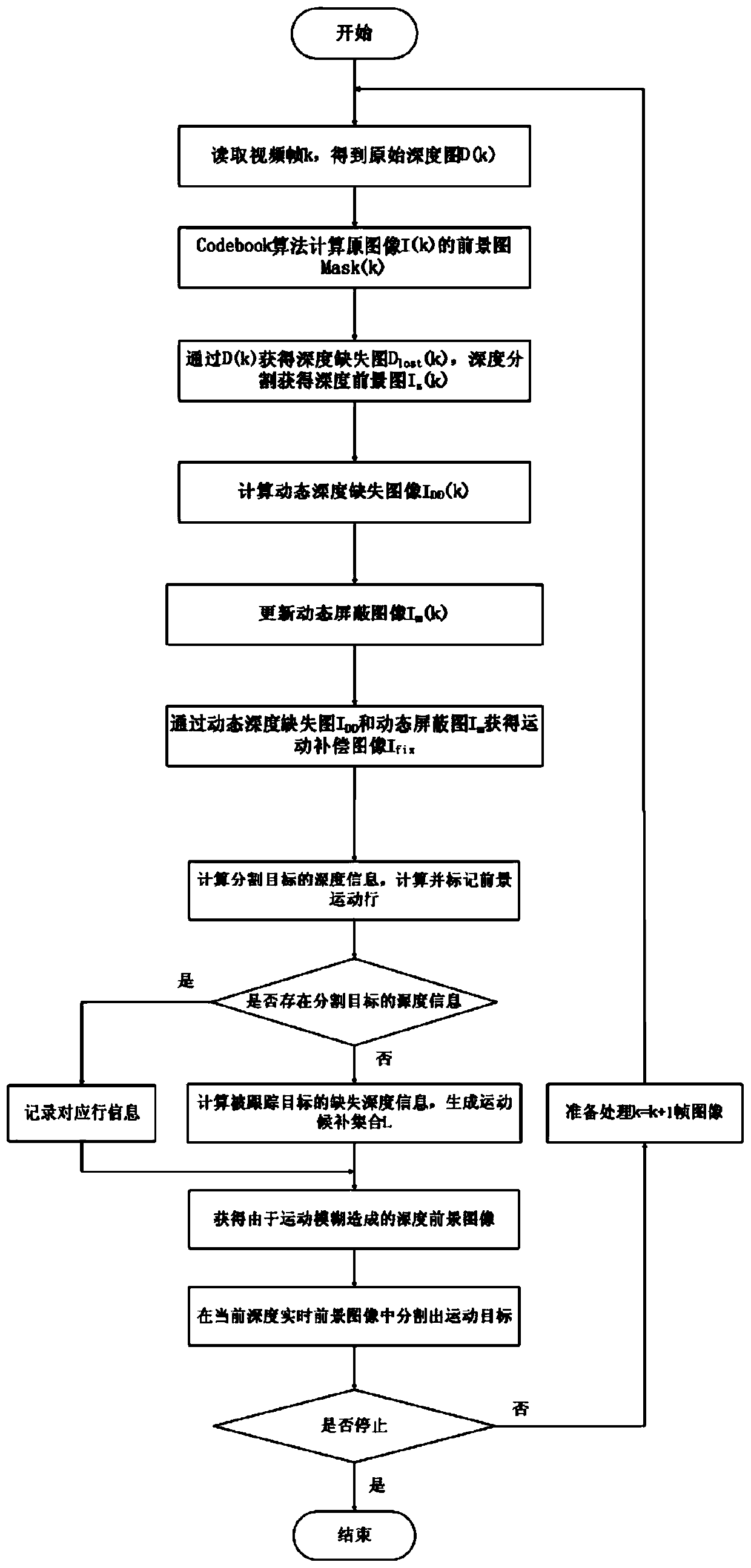

[0067] This embodiment proposes a binocular vision-based IPT simulation training gesture recognition method, adopts binocular vision-based recognition technology for gesture recognition, and makes innovative improvements to gesture segmentation, gesture tracking and gesture recognition in the process of visual gesture recognition. A gesture segmentation method based on depth images and background modeling, a Level Sets gesture tracking method based on depth features, and a hand shape classification method based on extended Haar-like features and improved Adaboost are proposed respectively. Specifically, such as figure 1 As shown, the functional block diagram of the gesture recognition method described in this embodiment:

[0068] Step...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com